**Lead-in** | In today’s audio and video era, WebRTC is used in numerous products and business scenarios. As we explore how browsers capture device audio and video data and how WebRTC transmits real-time audio over the network, it’s crucial to reinforce our understanding of network transmission protocols. This foundation will enhance our comprehension of WebRTC. It is advisable to read this in conjunction with articles in the frontend audio and video series.

1. Transport Layer Protocols: TCP vs. UDP

We all know the HTTP protocol, which runs on top of the TCP protocol and serves as the foundation of the World Wide Web’s operations. As a front-end developer, it seems natural for us to be familiar with HTTP and TCP protocols, so much so that HTTP status codes, message structures, TCP three-way handshake, and four-way termination have become standard basic interview questions. However, when it comes to other protocols, we might feel somewhat unfamiliar.

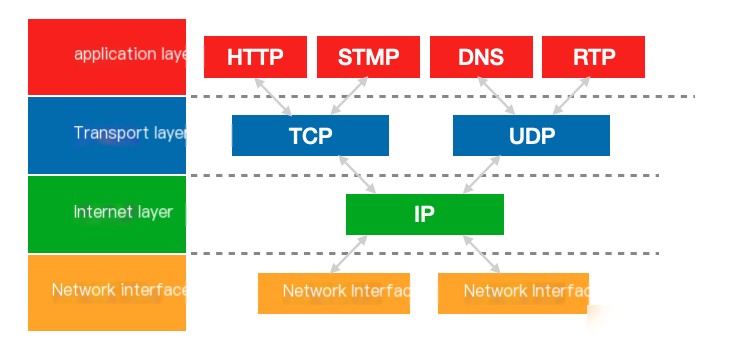

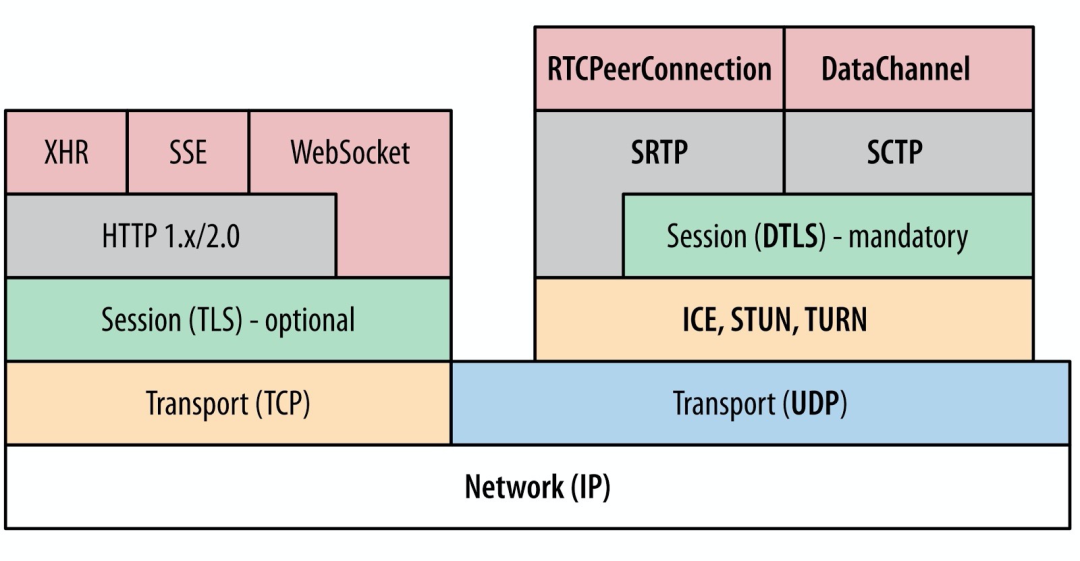

The diagram below shows a 4-layer structure of the TCP/IP communication protocol. In the transport layer, which is based on the internet layer, it provides data transmission services between nodes, with the most well-known beingTransmission Control Protocol (TCP)andUDP Protocol (User Datagram Protocol)。

The two protocols themselves involve a vast amount of content, but when it comes to selecting one for use, we might as well directly learn and understand them by comparing TCP and UDP.

1.1. Comparison of TCP and UDP

Overall, there are three main differences:

- TCP is connection-oriented, while UDP is connectionless.

- TCP is stream-oriented, meaning it treats data as a continuous sequence of unstructured bytes; UDP is message-oriented.

- TCP provides reliable transmission, meaning data transmitted over a TCP connection will not be lost, duplicated, or arrive out of order, whereas UDP provides unreliable transmission.

1.1.1. UDP is connectionless, and TCP is connection-oriented for real-time audio.

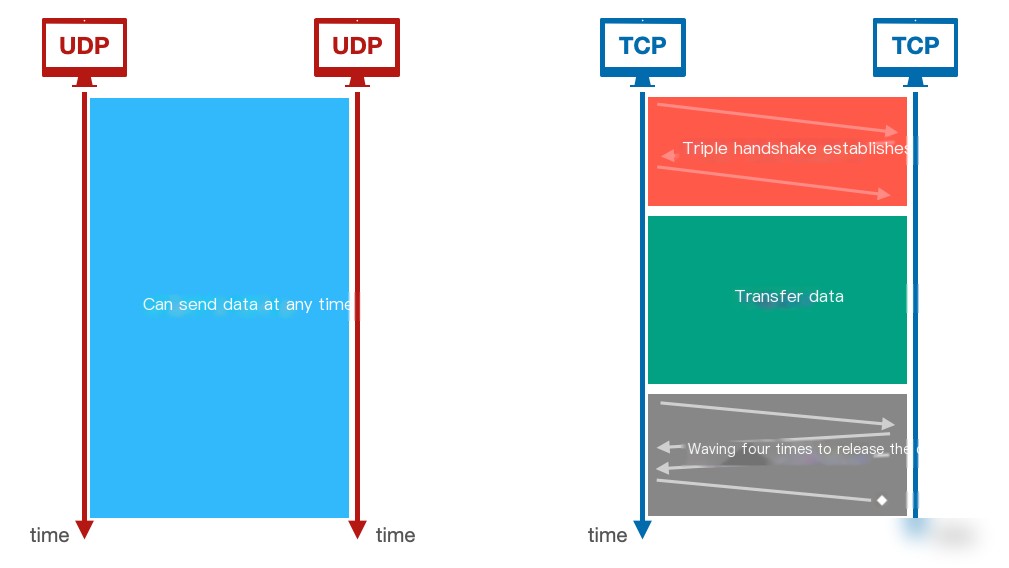

UDP does not require establishing a connection prior to transmitting data, allowing both parties to send data at any time; hence, UDP is connectionless. In contrast, the TCP protocol establishes a connection through a three-way handshake before transmitting data and requires a four-way handshake to release the connection after completion, with specific details not elaborated here.

1.1.2. UDP is message-oriented, TCP is stream-oriented

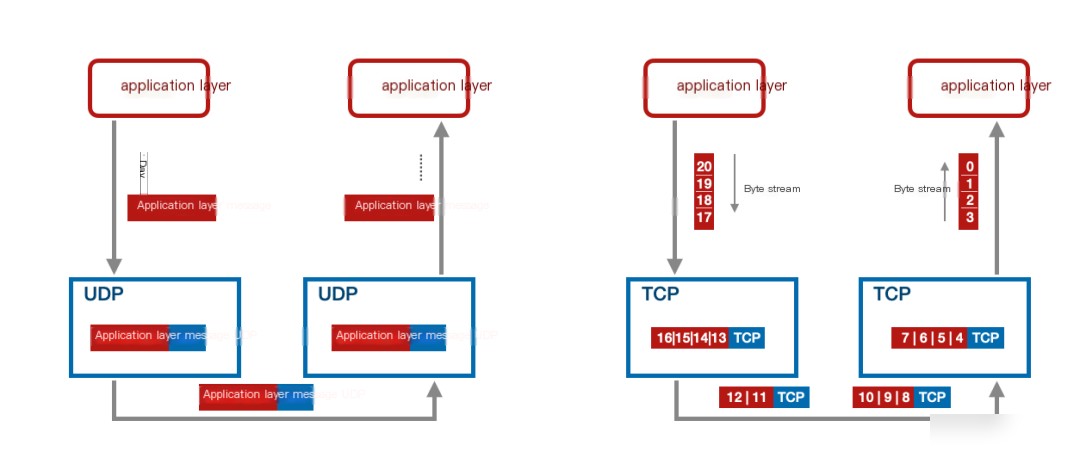

For UDP, the sending and receiving application layer only sends or receives packets to the UDP transport layer. Apart from transmission, UDP’s processing merely involves adding or removing the UDP header to the application layer message, preserving the application layer message. Hence, UDP is said to be message-oriented.

In terms of TCP, TCP treats the data handed down from the application layer as a stream of unstructured bytes, merely storing them in a buffer and constructing TCP packets for transmission based on a specific strategy. The receiving TCP only extracts the data payload portion to store in a buffer and simultaneously hands the buffered byte stream over to the application layer. TCP does not guarantee a corresponding size relationship between the sending and receiving data of both application layers. Therefore, it is referred to as byte-stream oriented, which also forms the basis for TCP’s flow control and congestion control mechanisms.

1.1.3. UDP is an unreliable connection, while TCP is a reliable connection for real-time audio.

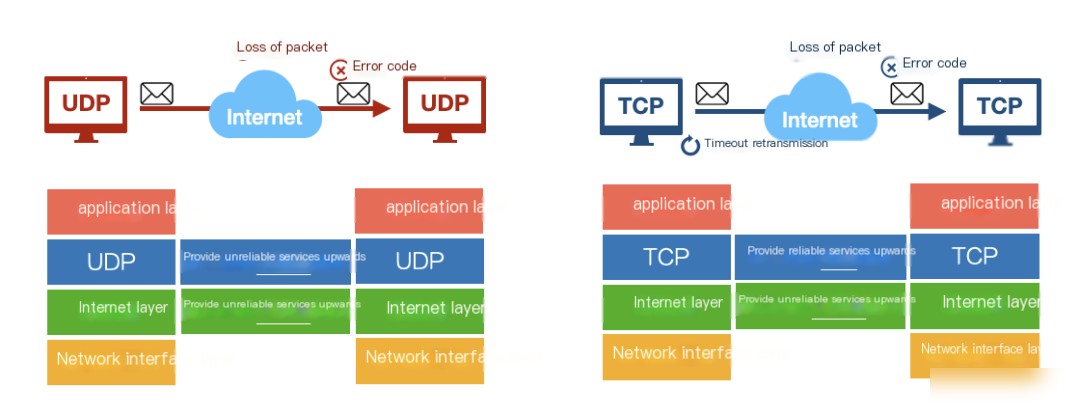

When transmitting data using UDP, packet loss may occur during sending, and the sender does not take any action. The receiver checks the header and detects errors but similarly takes no corrective action. Therefore, UDP provides a connectionless and unreliable service to the upper layers.

When TCP transmits data, if packet loss occurs or the receiver detects an error (in which case the receiver will discard the packet), the receiver will not send an acknowledgment message, which triggers a timeout retransmission from the sender. Consequently, it is clear that TCP ensures the data packet is correctly received by the recipient regardless of circumstances through its strategies. Thus, TCP is said to provide a connection-oriented reliable service.

1.2. Why Choose UDP

Since TCP has so many advantageous features, why is UDP used in real-time audio and video transmission?

The reason is that real-time audio and video are particularly sensitive to latency, and using the TCP protocol cannot achieve sufficiently low latency. Imagine, in the case of packet loss, the TCP protocol’s timeout retransmission mechanism where the RTT grows exponentially. If 7 retransmissions still fail, theoretical calculations can reach 2 minutes! In high-latency situations, achieving normal real-time communication is obviously impossible, and at this point, TCP’s reliability becomes a burden instead.

However, the reality is that in most cases, the loss of a small number of real-time audio and video data packets during transmission does not significantly affect the receiver. Since UDP is a connectionless protocol, it is often considered to send data without awaiting confirmation of receipt, thus offering advantages such as low resource consumption and fast processing speeds.

Therefore, UDP is highly efficient and offers low latency, making it the preferred transport layer protocol in real-time audio and video transmission.

WebRTC also implements reliable TCP for signaling control,However, for audio and video data transmission, UDP is used as the transport layer protocol.(As shown in the top right of the image.)

2. Application Layer Protocols: RTP and RTCP

Reliance solely on UDP for real-time audio and video communication is evidently insufficient. It also necessitates an application layer protocol based on UDP to provide additional assurances tailored specifically for audio and video communication.

2.1. RTP Protocol

In audio and video, the data volume of a single video frame requires multiple packets for transmission, which are then assembled into the corresponding frame at the receiving end to accurately reconstruct the video signal. Thus, it is essential to achieve at least two objectives:

- Detect errors in sequence and maintain synchronization between sampling and playback.

- It is necessary to detect the loss of packets on the receiving end.

However, UDP does not have this capability, so in audio and video transmission, UDP is not directly used. Instead, RTP is required as the application layer protocol in real-time audio and video.

RTP stands for Real-time Transport Protocol (实时传输协议)The RTP protocol is primarily used for real-time data transmission. What capabilities does the RTP protocol provide?

Include the following four points:

- Real-time data end-to-end transmission.

- Sequence Number (used for packet loss and reordering check)

- Timestamp (Time Synchronization Calibration and Distribution Monitoring)

- Definition types of payloads (used to specify the data encoding format)

But not including:

- Send promptly

- Quality Assurance

- Delivery (Possibly Lost)

- Order of Events (Arrival Sequence)

Let’s take a simple look at what’s next.RTP Protocol Specification[1]

RTP packets are composed of two parts: the header and the payload.

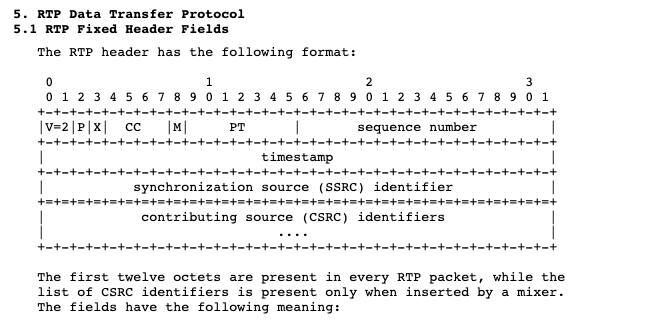

Below is an explanation of the RTP protocol header: the first 12 bytes are fixed, and the CSRC can have multiple entries or none.

- V: The RTP protocol version number, allocated with 2 bits, is presently version number 2.

- P: Padding flag, occupies 1 bit. If P=1, then one or more additional octets are padded at the end of the payload, which are not part of the payload.

- XExtension flag, occupying 1 bit. If X=1, an extension header follows the RTP header.

- CC: The CSRC counter, which occupies 4 bits, indicates the number of CSRC identifiers.

- M: Flag, takes up 1 bit, different payloads have different meanings,For video, mark the end of a frame; for audio, mark the start of a session.。

- PT (Payload Type)I’m sorry, it looks like your message is not clear. Could you please provide the text content of the WordPress post you would like translated?Type of payload, occupying 7 bits, used to record the payload type/Codec in the RTP packet.In streaming, most of it is used to differentiate between audio streams and video streams, making it easier for the receiver to identify the appropriate decoder for decoding.

- Sequence NumberI’m sorry, it seems like your message is incomplete or may not have enough context for me to provide a translation. Could you please provide more details or the full content you need help with?The sequence number is used to identify RTP packets sent by the sender. With each packet transmitted, the sequence number increases by one.This field can be used to check for packet loss when the underlying transport protocol is UDP and network conditions are poor. It can also be used to reorder data when network jitter occurs. The initial value of the sequence number is random, and the sequences for audio packets and video packets are counted separately.

- Timestamp: Occupies 32 bits and must use a 90 kHz clock frequency (90000 in the program).The timestamp reflects the sampling instant of the first octet in the RTP packet. The receiver uses the timestamp to compute delay and delay jitter, as well as for synchronization control. The timing of packets can be determined based on the RTP packet’s timestamp.

- Synchronization Source (SSRC) Identifier: Occupying 32 bits, it is used to identify the synchronization source. A synchronization source refers to the origin of the media stream, identified by a 32-bit number SSRC identifier in the RTP header, rather than relying on a network address. Receivers utilize the SSRC identifier to distinguish between different sources, enabling the grouping of RTP packets.

- Common Security Resource (CSRC) IdentifierEach CSRC identifier occupies 32 bits, and there can be 0 to 15 CSRCs. Each CSRC identifies all contributing sources included in the RTP packet payload.

RTCP Protocol

As previously mentioned, the RTP protocol is relatively straightforward and unrefined in overall terms, as it does not provide any built-in mechanisms for timely transmission or other Quality of Service (QoS) assurances. Therefore, RTP requires an accompanying set of protocols to ensure its service quality, and that would be…RTCP protocol (full name: Real-time Control Protocol).

The RTP standard defines two sub-protocols: RTP and RTCP.

For example, when transmitting audio and video, issues like packet loss, out-of-order delivery, and jitter are managed by WebRTC with appropriate strategies at the underlying level. However, how to ensure these transmissions while…“Network Quality Information”Real-time tells the other party the purpose of RTCP. Compared to RTP, RTCP occupies a very small portion of bandwidth, usually only 5%.

Next, let’s briefly review the RTCP protocol specification: First, RTCP packets come in several types:

- Send SR (Sender Report): Current activity sender send/receive statistics. PT=200

- Receiver Report (RR): Report received, inactive sender reception statistics. PT=201

- Source Description Report (SDES): Source description element, including CNAME PT=202

- BYB Report: Participant Ends Conversation PT=203

- APP Report: Application Custom PT=204

- The jitter report for IJ PT=195

- Transport Feedback RTPFB PT=205

- Payload Feedback PSFB PT=206… Here we can focus on two important messages: SR and RR, which let both the sender and receiver know about the network quality status.

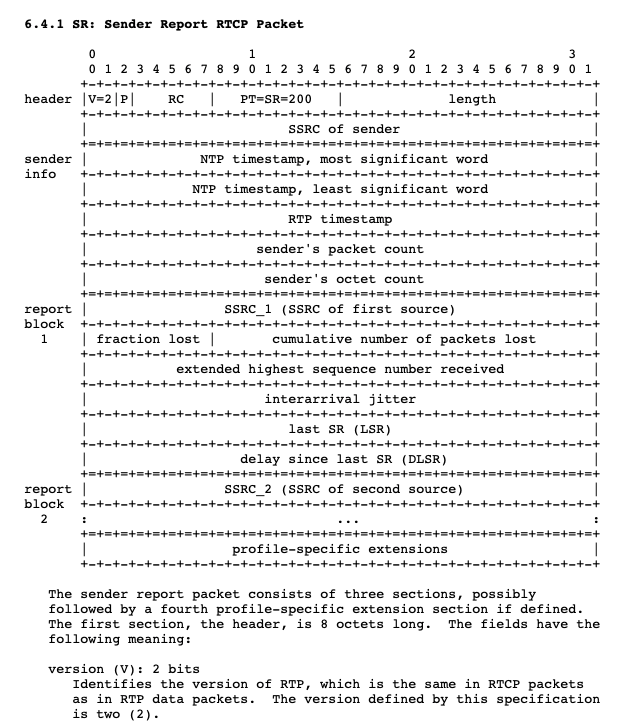

Above is the protocol specification for SR:

- The Header section is used to identify the type of message, for example, whether it is SR (200) or RR (201).

- The “Sender info” section is used to indicate exactly how many packets were sent as the sender.

- The Report block section specifies the status of packet reception from various SSRCs when the sender acts as a receiver.

By reporting the above information, each end can adjust their transmission strategies after receiving feedback data over the network. Naturally, the protocol’s content is not limited to the brief segment above; in practice, it also involves various methods for calculating feedback data. This is not expanded upon here due to limited space.

2.3. Overview of the RTP Session Process

Having grasped why UDP is chosen and what the RTP/RTCP protocols accomplish, let’s briefly summarize the entire process at the transmission protocol layer:

When the application sets up an RTP session, it determines a pair of destination transport addresses. The destination transport address consists of a network address and a pair of ports, with two ports: one for RTP packets and the other for RTCP packets. RTP data is sent to an even-numbered UDP port, while the corresponding control signals, RTCP data, are sent to the adjacent odd-numbered UDP port (even-numbered UDP port +1), thus forming a UDP port pair. The general process is as follows:

- RTP protocol receives the streaming media information stream from the upper layer and encapsulates it into RTP packets.

- RTCP receives control information from the upper layer and encapsulates it into an RTCP control packet.

- RTP sends RTP packets to an even-numbered port in the UDP port pair; RTCP sends RTCP control packets to the receiver port in the UDP port pair.

2.4. Quick Start with Wireshark for Capturing RTP and RTCP Packets

Knowledge gained from reading is ultimately shallow; to truly understand, one must practice it firsthand. Next, let us proceed with actual streaming using WebRTC and capture the packets to reveal the true nature of RTP packets and RTCP messages.

Wireshark is a powerful network packet analysis software capable of displaying the detailed exchange process of network packets, making it an invaluable tool for monitoring network requests and identifying network issues. This powerful packet capture tool encompasses numerous features. As this is not a Wireshark tutorial, you can search and explore additional features on your own. Here, I will only outline a general process:

- Download and install Wireshark (it’s very simple).

- Open your browser and navigate to Tencent Classroom, selecting a free course that is currently live streaming. Typically, WebRTC is used for playback in these scenarios. (Open a new tab to launch the WebRTC debugging tool, which will display the real-time network statistics of WebRTC streaming media on the page.)

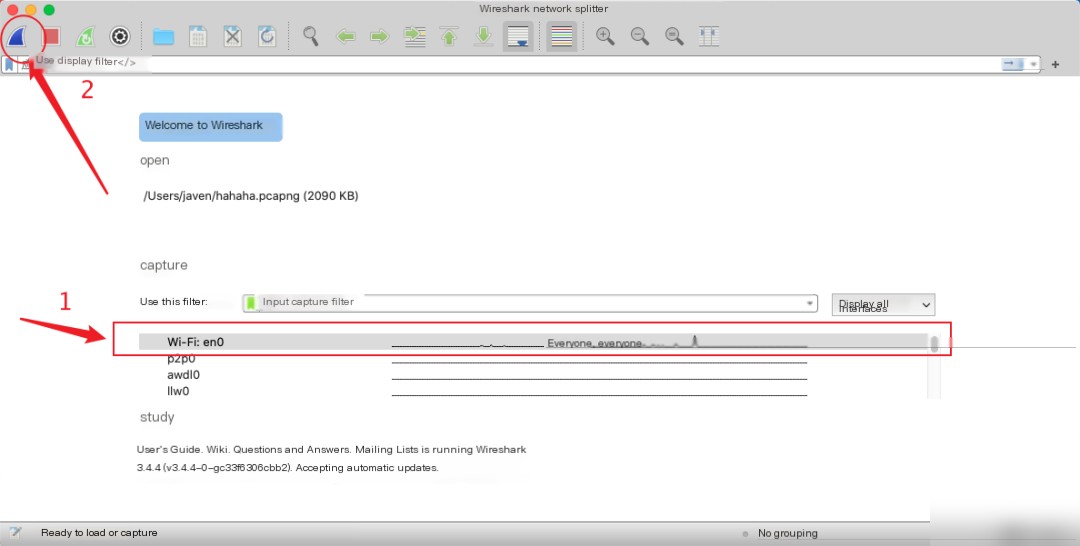

- Open Wireshark, and you need to select the network card on your machine for capturing network packets. When your machine has multiple network cards, you need to choose a network card interface. Here, I selected my WiFi and clicked the blue button in the top left corner to begin packet capturing.

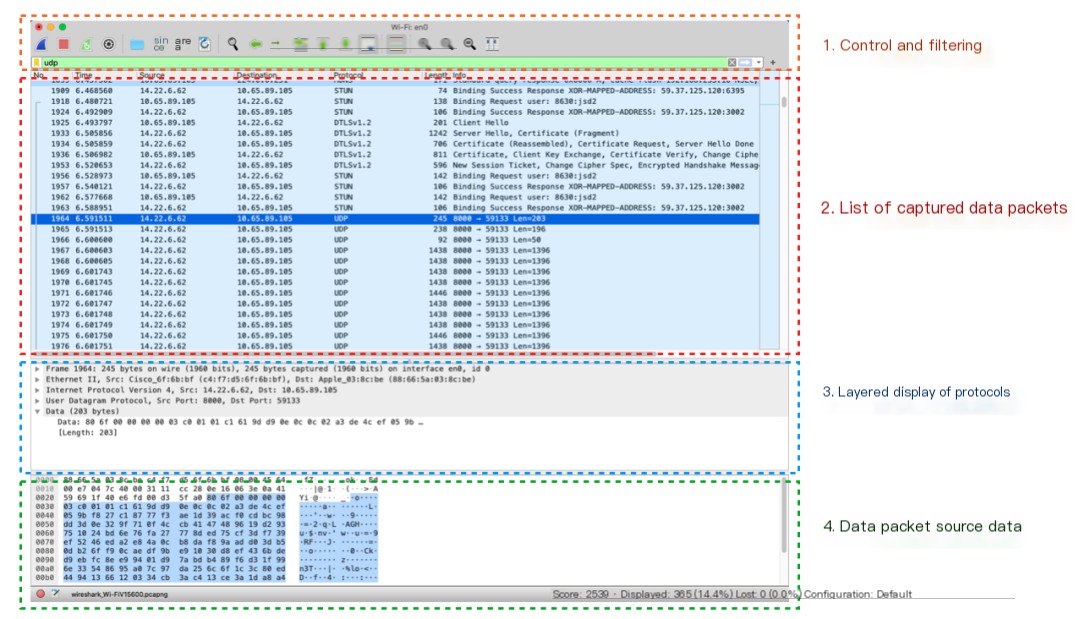

- Once you start packet capturing, a vast amount of data packets for various protocols will instantly appear (the image below demonstrates the functionality of each section in Wireshark). By entering “UDP” in the filter bar labeled ①, you can filter and display only UDP packets in the packet list marked ②. It will also parse packets of certain protocols, and you will clearly see their protocols in the Protocol column.

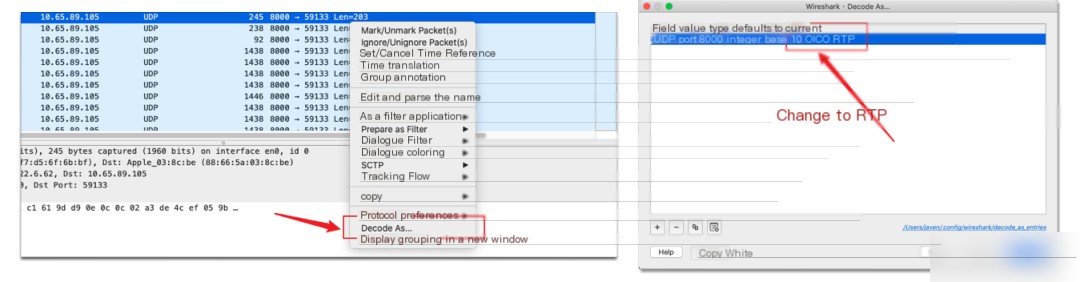

- Since Wireshark does not automatically recognize RTP packets within UDP data streams, you need to right-click on the UDP packet and select “Decode As” to interpret it as an RTP packet.

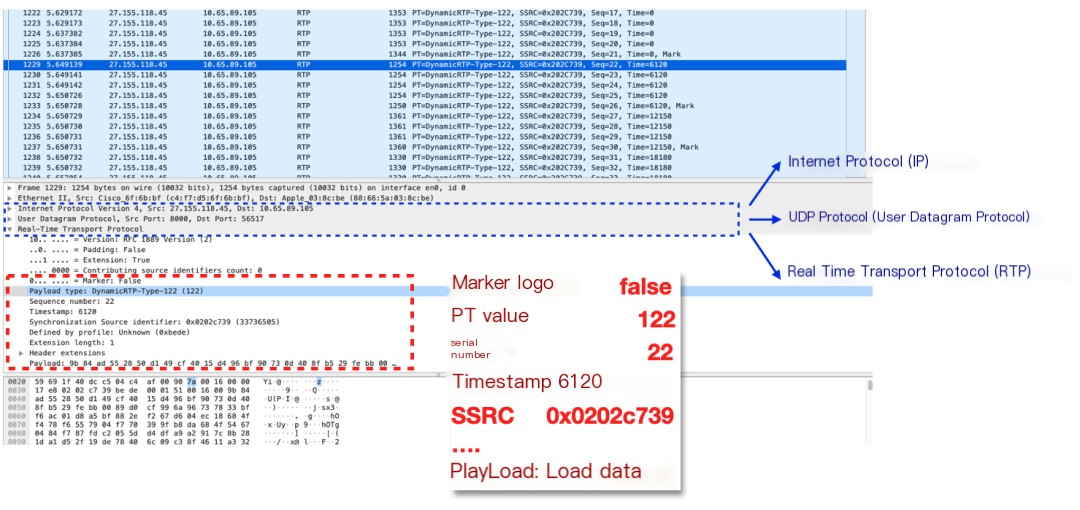

- At this point, Wireshark can identify RTP protocol packets, and within the layered protocol, you can see a clear structure, ordered from top to bottom as: IP => UDP => RTP. When we expand the RTP layer, you can observe that it matches the aforementioned RTP message protocol, indicating version number, padding flag, and so on.

Key aspects we should focus on: Payload type (PT value): Represents the type of payload, and here, 122 corresponds to the WebRTC SDP, confirming it as H264 video payload type data.

TimestampThe log is from a sampling time of 6120; conversion is still required according to the sampling rate.

SSRC: The synchronization source (SSRC) identifier is 0x0202c729. All of the above are RTP headers, and only the payload carries the media data.

The characteristics of RTP aren’t limited to support for being transported over UDP, which benefits the low-latency transmission of audio and video data. It also allows endpoints to negotiate the encapsulation and codec format of audio and video data through other protocols. The `payload type` field is quite flexible, supporting a wide variety of audio and video data types. For more information, you can refer to: RTP payload formats.

Let’s take a closer look at the audio and video frames of the RTP packet:

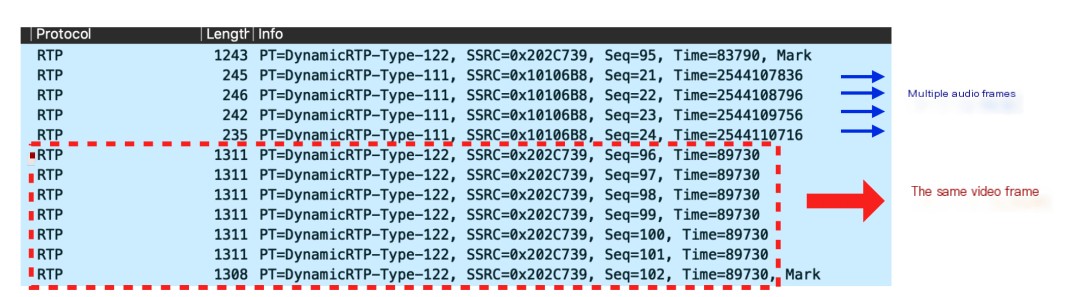

Among the packets with seq=21 to seq=24, each one is an individual audio frame, so the timestamps are different. In contrast, the packets within the red box from seq=96 to seq=102 collectively form a video frame with PT=122, resulting in identical timestamps for these packets. This occurs because a video frame contains a larger amount of data, necessitating division into multiple packets for transmission. In comparison, audio frames are smaller and can be sent in a single packet, as can be seen from the packet length, where the video packets are significantly larger than the audio packets. Furthermore, the packet with seq=102 has the mark field set to true, indicating it is the last packet of a video frame. By checking the sequence, it can be determined whether there is any disorder or packet loss in receiving the audio-video data.

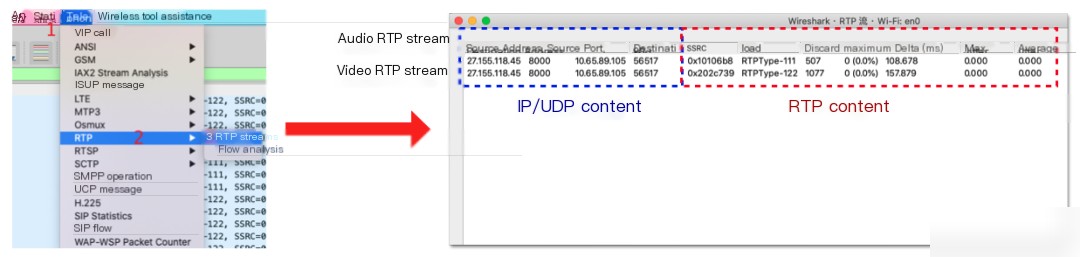

- By navigating through the top menu to ‘Toolbar’ => ‘Phone’ => ‘RTP’, you can open the information panel where you’ll see there is currently one audio RTP stream and one video RTP stream. On the left, it analyzes and displays the source address and port, as well as the destination address and port of the stream. On the right, you’ll find RTP-related details, including the stream’s SSRC, payload type, and packet loss information.

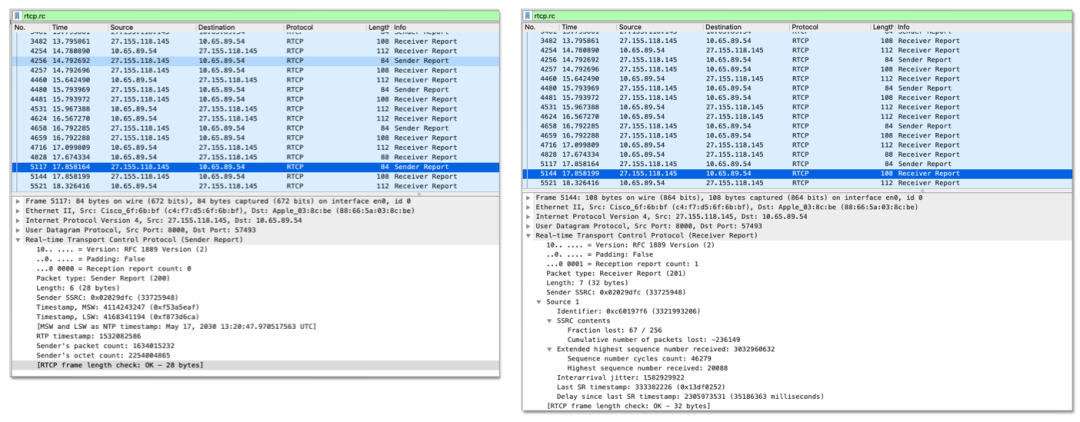

- Finally, let’s take a brief look at RTCP. By entering “rtcp” in the filter bar, you can filter to see the Sender Report and Receiver Report. Their PT (packet type) values are 200 and 201, respectively, with the report SSRC being 0x02029dfc. Additionally, you can observe detailed information regarding the sent and received packets. For an in-depth understanding, refer to the RTCP specification for further analysis.

3. Summary

Many people believe that when a developer is learning to use WebRTC, it’s sufficient to quickly practice and apply it in business scenarios. Is it necessary to further understand these transmission protocols? However, often knowing how to use it does not necessarily mean you can leverage its full potential. I believe that the extent to which you can utilize a technology is often determined by how deeply you understand its underlying mechanisms.

Here is a brief overview of why real-time audio and video choose UDP as the transport layer protocol, along with a simple introduction to two significant protocols in WebRTC: RTP and RTCP. WebRTC technology involves and integrates various technologies such as audio and video processing, transmission, and security encryption. Each module involves protocols that could each warrant an article of their own. Due to space limitations and the limits of my current knowledge, this article cannot expand on more content. If you are looking to learn and practice WebRTC, this article will only provide you with a preliminary understanding at the transport protocol level. Since protocols often involve the lower layers and are typically overlooked in everyday use, I have also introduced how to quickly capture packets to aid understanding. For deeper learning, additional resources should be consulted for in-depth study.