0×01 Overview

This section provides an overview of open-source solutions, highlighting their significance and the benefits they offer. Open-source solutions play a crucial role in today’s technological landscape, offering transparency, flexibility, and community-driven development processes.

Traffic monitoring is a common defense mechanism in the construction of an information security system. From capturing traffic to logging, from log analysis to threat alerts, related products based on traffic analysis models share similar processing logic at the highest level. Whether using Suricata or Snort, the processing flow is similar, and in the most rudimentary understanding, these systems belong to “large-scale string processing filtering systems.”

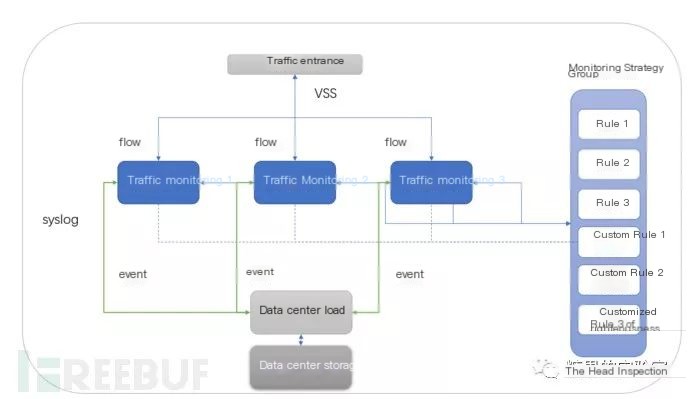

In actual production environments, it’s possible to use products from multiple vendors alongside open-source solutions or self-developed systems. Regardless of the adopted method, we can abstract a common top-level traffic data processing model, a typical traffic filtering and log analysis processing flow. We have practical experience with Tenable products, and this knowledge can also be broadly applied to other similar products, open-source solutions, and self-developed systems. The difference between closed-source commercial software systems and open-source community software systems extends beyond the products themselves to their ecosystems and later support. In our use of open-source software, we’ve received significant help from peers and the community. During our practice with Tenable, we received expert-level guidance, which greatly aided our understanding and practical application of the product. We chose Tenable for illustration because of its unique design concepts and classic functionality. Hence, we use this system to explain the common elements present in traffic analysis systems.

0×02 Basic Processing Model

From our perspective, a traffic analysis security threat system has 5 basic tasks to fulfill:

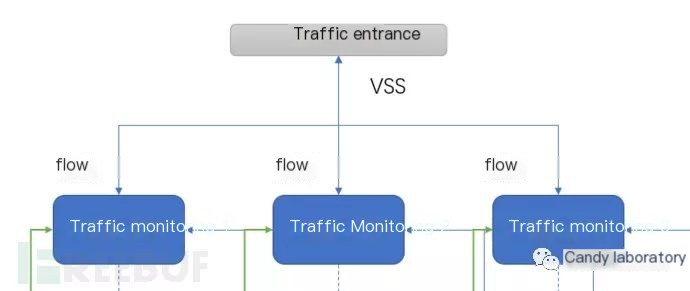

1. Traffic Acquisition: Capturing network traffic is the primary foundational function. Only by capturing traffic data and obtaining network traffic can threat event data be derived through application of filtering rules. (Note in the image that we use traffic distribution equipment.)

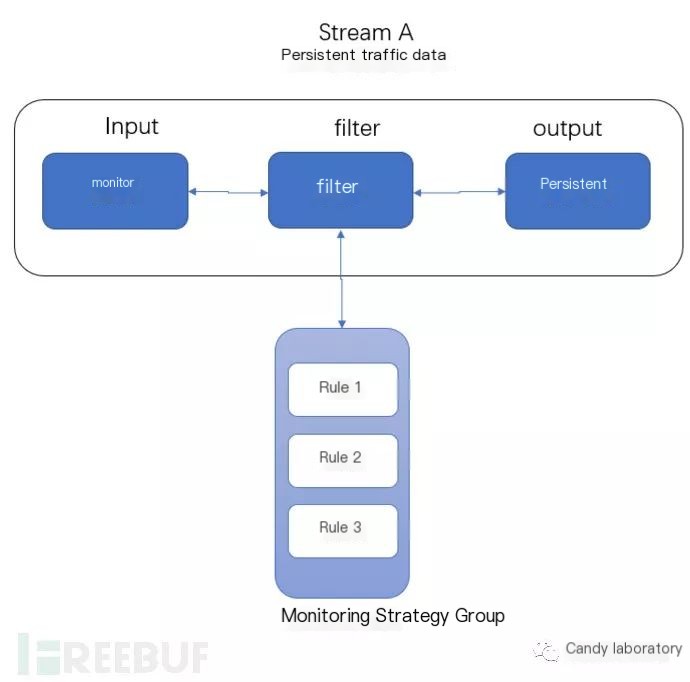

2. Traffic Filtering: Filtering data in the traffic acquisition phase is resource-intensive. By setting filter conditions, rule parsing, and rule deployment and execution, data filtering and anomaly detection can be conducted during traffic reading. However, more filtering rules can lead to less system efficiency, even creating bottlenecks or system delays.

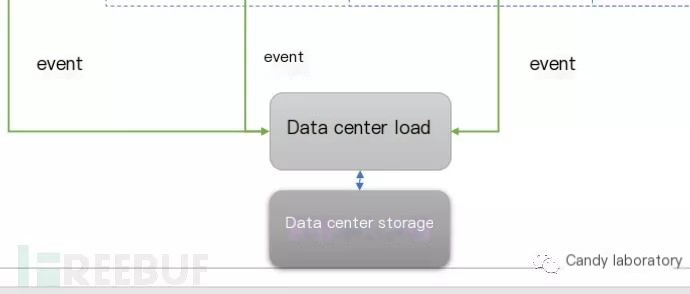

3. Data Storage: During the traffic acquisition phase, data analysis is time and performance intensive. If we cache the data and log it, near real-time data analysis can alleviate system burden. Furthermore, stored data allows for the application of data analysis models and aggregated correlated data, enabling more precise algorithmic anomaly detection. Therefore, data needs to be retained for a certain period to maintain its non-volatile state within its lifecycle.

4. Data Analysis: Simply storing data is not the end goal; it’s crucial to mine log data for threat event characteristic data. If traffic monitoring is considered prophetic warning processing, then risks can be forewarned and mitigated before occurrence. If filtering pertains to real-time rule matching of network traffic, then data analysis involves deeper mining of information once traffic logs are stored.

5. Threat Alerts: Traffic data as the system’s input doesn’t directly generate benefit. When the system generates effective threat alerts, it demonstrates the system’s threat awareness value by notifying responsible parties of threats as early as possible to prevent them. If host system threats are pinpointed at the threat action stage, then early alerts during the traffic monitoring rule filtering phase or predictive data analysis stage highlight proactive awareness.

0×03 “Flow” Classic Design Processing Model

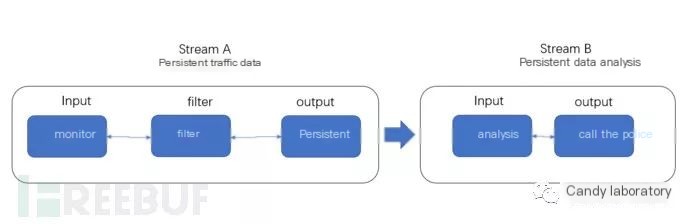

We use the work mode of “flow” to explain the working process of a traffic threat intelligence system, summarized by Stream A and Stream B as two “flows” covering the system’s five components:

Core metrics of a traffic threat system: False Negative Rate and False Positive Rate.

False Negative Rate: In the Stream A phase, filtering traffic can involve interactions not related to cumulative historical data. Without considering blacklist/whitelist mechanisms or immediate correlation statistics, filtering relies on anomaly rule collision. The completeness of rules determines the false negative rate, as non-self-learning systems rely on manually defined anomaly rules.

False Positive Rate: In the Stream B phase, threat pre-judgement conclusions based on Traffic flow rule filtering correlate proportionally to the quantity of defined rules. Assuming ideal completeness of rules with no false negatives, false positives can still exist. Methods to reduce false positives include:

1: Cross-checking alerts from multiple threat systems.

2: Utilizing aggregate mathematical statistical models for auxiliary judgments.

3: Scoring based on characteristic tags and accumulating scores, with alerts triggered above a certain threshold.

4: Re-confirming threat payloads with third-party libraries.

There are likely other major methods to address and confirm false positives on existing alerts. Rule strategies are a dynamic process.

Some friends find their systems suddenly breached, and in such cases, deploying multiple security systems in an environment becomes feasible. Systems A and B may have fundamental threat anomaly analysis rules, but some rules may not be publicly visible, with some supporting custom plugins, others not. If systems share or don’t share rule overlap, comparing alert results across systems becomes crucial. High-overlap alerts typically indicate high threat levels. Data diversity and connectivity are common; honeypots, firewalls, and traffic analysis concurrently acting on a network environment view identical input data from diverse threat perspectives.

0×04 Similarities and Differences Between Traffic and Honeypot Monitoring

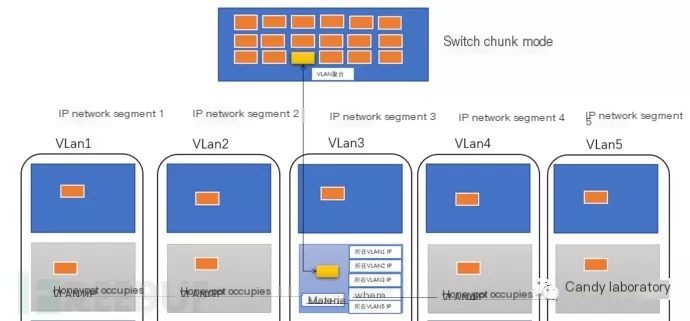

Honeypots monitor abnormal network behavior traffic using a “wait and see” approach. They deploy utilizing a switch’s port aggregation trunk mode, combining one IP from different VLAN segments into a machine’s entry port.

Setting up multi-IP and tagging, it receives different VLAN traffic access, similar to ARP-level and load balancing DR modes. Conversely, traffic monitoring aims to direct combined traffic to various monitoring machines, forming many-to-one or many-to-many relationships. Each monitoring machine uses identical filtering rules, corresponding one-to-one with anomaly behavior threats. In Tenable systems, these are categorized as SC and NC—codes for tasks involving monitoring and storing analysis.

0×05 Practice and Problem Solving

As mentioned, a threat analysis system essentially functions as a “large-scale string processing system.” Converting traffic into string files involves threat matching that requires string feature substring searches based on “rule conditions.” Tenable is an excellent product, notable for its unique design philosophy and expert support. Its rule system is user-friendly, allowing for custom plugins, rule additions, and system function expansions. Furthermore, similar to other systems, multi-machine deployment and distribution strategies arise, solved by utilizing Ansible for automated provisioning. If facing similar circumstances while using Suricata, this strategy could be worth exploring.

For traffic monitoring, auxiliary analysis tools are indispensable; tools like Wireshark, TcpDump, and WinDump assist in device debugging and real traffic monitoring. Wireshark is a classic tool, with plentiful resources like books titled similarly to “Wireshark Network Analysis: From Beginner to Practice.” A good publication can significantly aid day-to-day traffic analysis work.

Log data landing has been extensively introduced previously. One noteworthy milestone is the release of Graylog3: the third version of Graylog. Many threat analysis systems use Elasticsearch for data persistence, and Graylog facilitates secure data storage through ES, boasting its own distinctive features as an ES-based management information system.

For friends whose production systems have possibly been impacted by recent breaches, an open-source security system list is offered for initial system hardening: Graylog, Snort, Suricata, Wazuh, Moloch, Ansible, OpenVAS, etc. Hopefully, this provides timely assistance.

0×06 Summary

In a shared network environment, how to deploy various threat detection systems, abstract core processing models, and summarize critical system processing tasks stems from recent network intrusion incidents involving non-security sector associates. Swiftly addressing unexpected circumstances by selecting and deploying security systems to fortify the production environment, and quickly protecting security assets is paramount. This article’s inspiration arose from Tenable production practices and represents personal viewpoints, offered as reference!