Introduction

Recently, Juejin issued an invitation for a technical feature. Keeping up with the trend, I wrote an article about performance optimization and performance evaluation of network protocols. This article mainly discusses three major directions: performance indicators of network protocols, performance optimization strategies, and performance evaluation methods. I have conducted an in-depth analysis of these three aspects and hope to exchange and share insights with everyone.

1. Performance Indicators of Network Protocols

1. Latency

Latency is a metric that measures the time required for data to travel from the source to the destination. For real-time applications like VoIP (voice calls) and online gaming, reducing latency is crucial because it directly affects user experience.

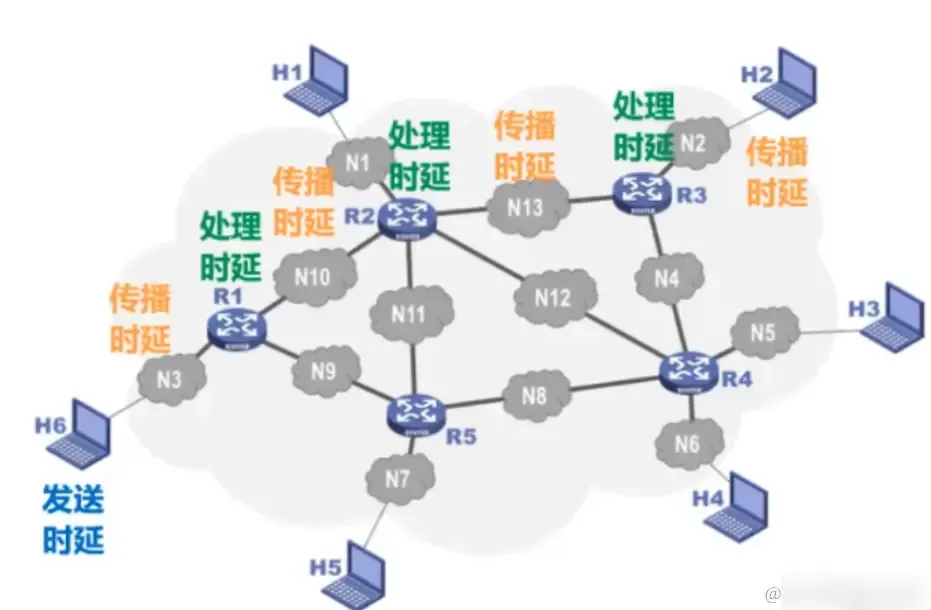

The path between source and destination hosts is made up of multiple links and routers. Thus, network latency is primarily composed of:

Transmission delayPropagation delayProcessing delay

The figure below shows the calculation formula:

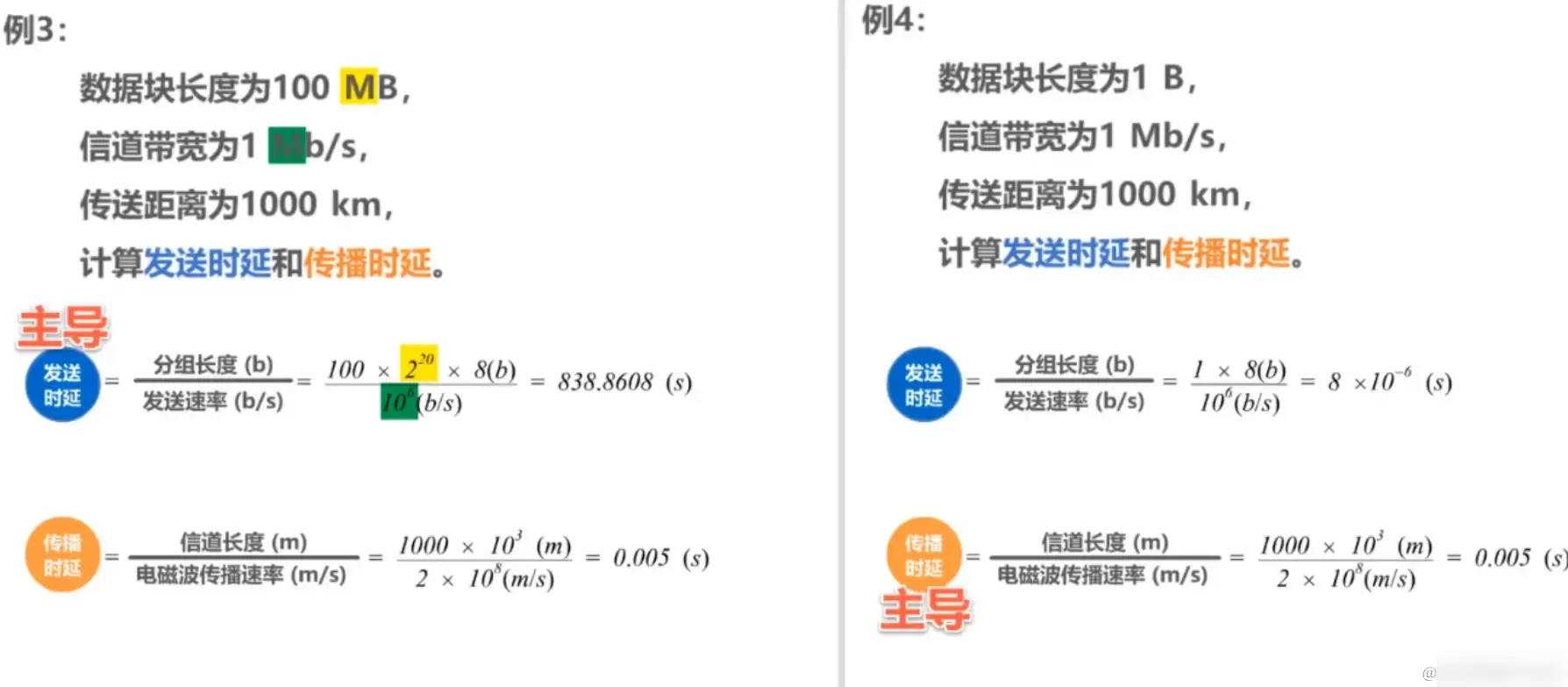

Generally, processing delay is negligible, and which delay dominates the total network delay requires**specific analysis of specific problems.** The example in the figure below illustrates:

When the transmission data volume far exceeds the path, transmission delay becomes the dominant factor. When the path length significantly exceeds the data volume, propagation delay takes precedence (e.g., sending data from Earth to space).

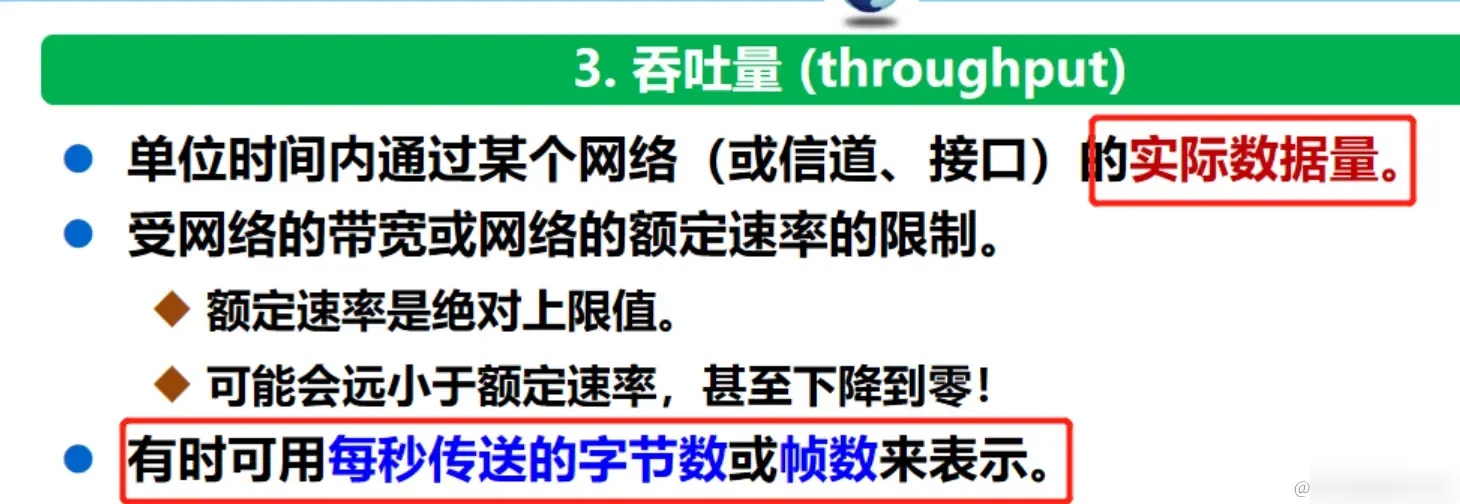

2. Throughput

Throughput refers to the amount of data processed by the network per unit of time. For video streaming and large data transfers, high throughput ensures a smoother experience and faster data processing rates.

Throughput represents the amount of data passing through a network (or channel, interface) per unit of time. It is often used to measure real-world networks to understand how much data can actually pass through. Throughput is limited by the network’s bandwidth or rated speed.

Bandwidth should be uninfluenced; however, typically, throughput is restricted and needs reduction based on available bandwidth.

The size of throughput is chiefly determined by the network card inside the firewall and the efficiency of program algorithms, particularly the program algorithms which involve significant computations making the communication volume substantially lower. Hence, while most firewalls claim to be 100M firewalls due to reliance on software algorithms, the actual communication volume is much less, only about 10M-20M. A purely hardware firewall can achieve a linear throughput of 90-95M due to hardware-based computations and is a true 100M firewall.

3. Bandwidth

Bandwidth utilization reflects the efficient use of network resources. By optimizing bandwidth utilization, network congestion can be reduced, and data transmission efficiency can be improved.

Represents the ability of a network’s communication line to transmit data, where network bandwidth indicates the “maximum data rate” achievable from one point to another within the network over a period, measured in bps as the rate unit.

For example, if home bandwidth is typically 800M, it actually refers to 800Mbps or 800Mb/s. The actual speed is divided by 8, i.e., 800Mbps/8=100M/s (of course, this is an ideal scenario, the actual situation should be calculated with throughput).

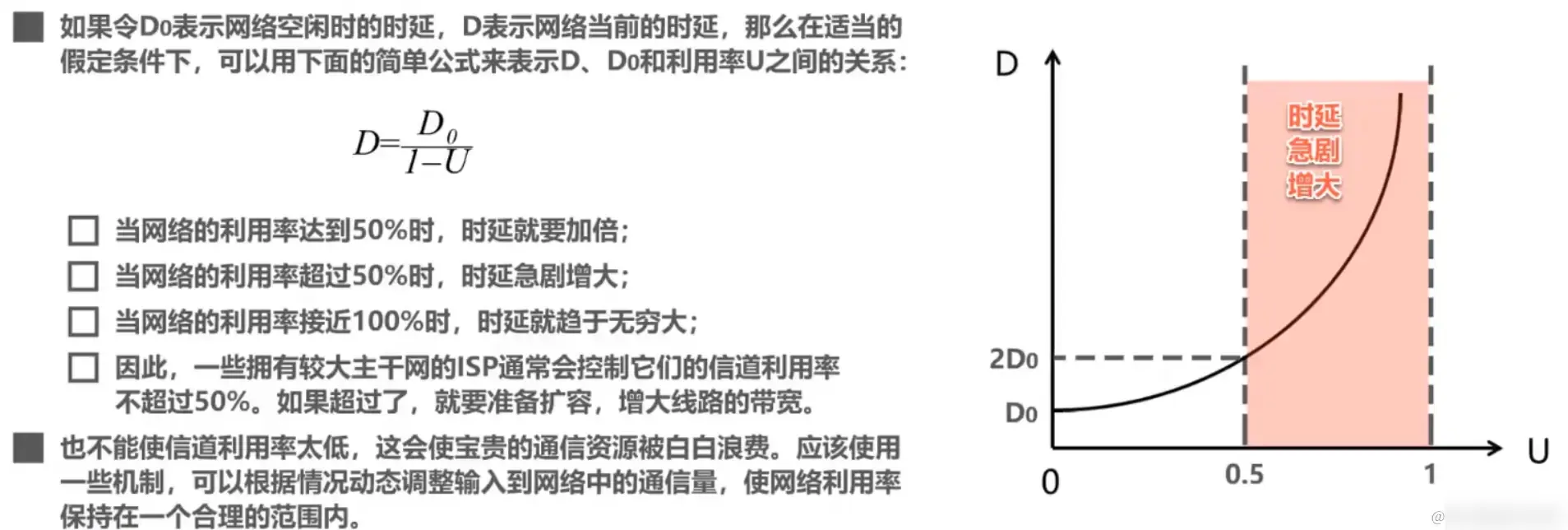

4. Utilization Rate

Utilization is divided into:

Channel utilization rate: indicates what percentage of time a particular channel has data passing through itNetwork utilization rate: weighted average of channel utilization rates across the entire network

According to queuing theory, as the utilization rate of a channel increases, so does its delay. Thus, channel utilization rate is not necessarily better the higher it is, and it is generally kept below 50%

Utilization is divided into channel utilization rate and network utilization rate

Channel Utilization Rate

- Channel Utilization Rate

- Describes the percentage of time a **channel** is utilized (**data passing through**)

- A **completely idle** channel has a utilization rate of **zero**Network Utilization Rate

- Network Utilization Rate: the

weighted average of channel utilization rates across the entire network - Channel utilization rate is not necessarily better the higher it is

- Too low leads to resource waste

- But too high (when a channel's utilization rate increases), the **delay caused by that channel also increases rapidly**2. Performance Optimization Strategies

1. Reduce Latency

- Optimize Routing Algorithms: Choose shorter or faster paths to reduce packet transmission time. Such questions are often encountered in interviews and are quite critical, requiring strong memorization!!

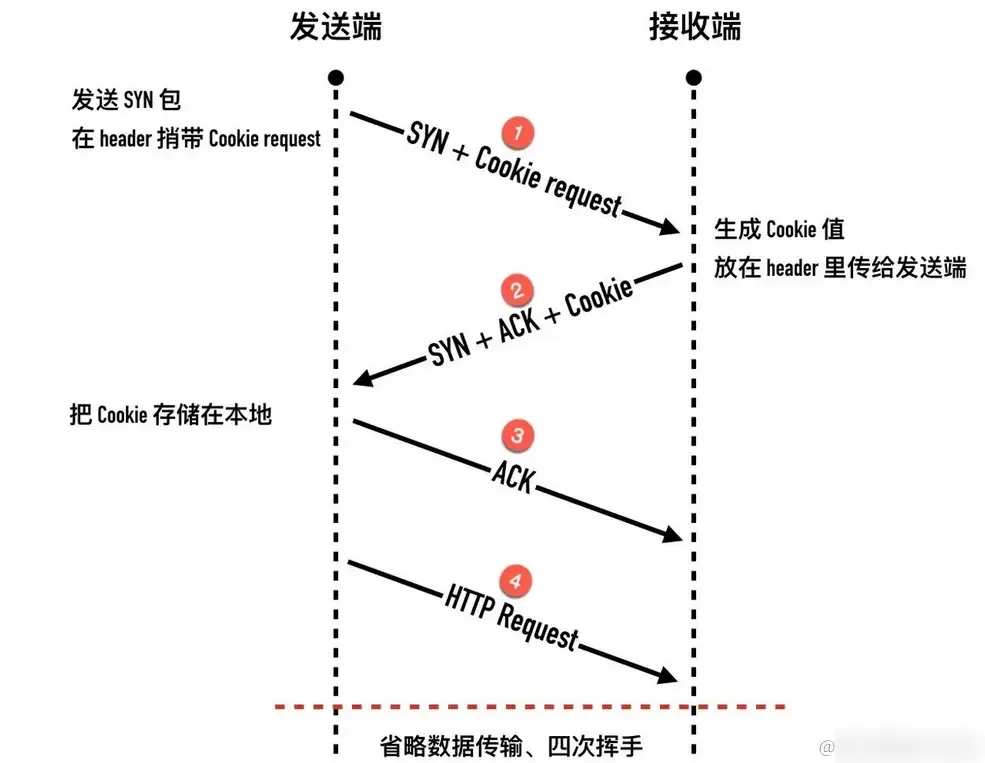

- Enable TCP Fast Open: TCP Fast Open is a mechanism to speed up TCP connection establishment, allowing data transmission to start on the first handshake of the three-way handshake, thus reducing connection establishment time and latency.

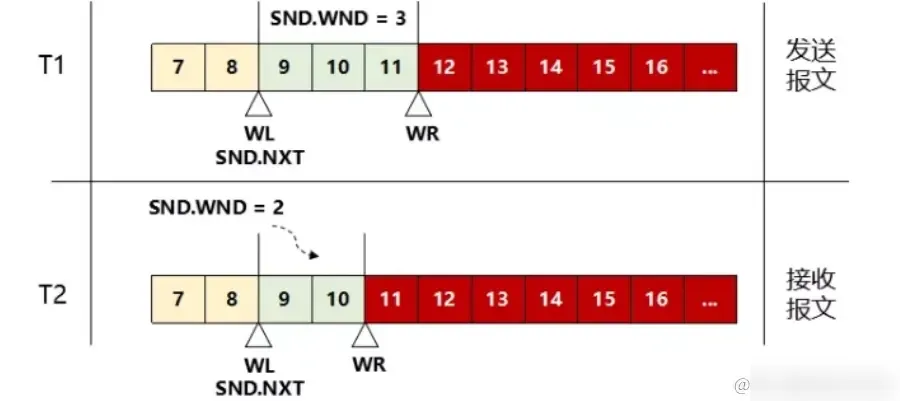

- Enable TCP Window Scaling: TCP window scaling can increase the throughput of a TCP connection, thereby reducing latency.

- Enable TCP SACK: TCP SACK can better handle packet loss and retransmission during network congestion, thereby reducing latency.

- Enable TCP BBR: TCP BBR is a new congestion control algorithm that can better handle packet loss and retransmission during network congestion, reducing latency.

- Use Faster Transmission Media: Such as replacing copper wires with optical fibers to reduce propagation delay.

1. **Use CDN (Content Delivery Network)**: A CDN is a collection of servers distributed globally that can cache website content and provide it to users.

2. **Use Compression**: Compression can reduce file size, thus reducing download time. Common compression formats include **Gzip** and **Brotli**.

3. **Use HTTP/2**: HTTP/2 is the latest version of the HTTP protocol. It can reduce latency and improve performance. HTTP/2 uses multiplexing technology, allowing multiple requests and responses to be transmitted simultaneously, thus reducing network latency.

4. **Use WebP Format**: WebP is a new image format that can load faster than JPEG and PNG formats. WebP images are generally smaller than JPEG and PNG images, allowing faster downloads.

5. **Use Video and Audio Streaming**: For large media files, video and audio streaming can reduce load times. Users can start watching videos or listening to audio while the file is downloading.

6. **Use Preloading**: Preloading can speed up website load times by loading resources before the user requests them. Preloading can be implemented in HTML using tags.1. **Reduce the Number of Network Requests**: By merging multiple requests, reduce the number of network requests to decrease network latency. For example, multiple small files can be combined into one large file for transmission, or CSS Sprites technology can be used to combine multiple small images into one large image for loading.

2. **Reduce the Number of DNS Queries**: DNS queries also consume time and can be minimized to lower network latency. For example, separate static resource domain names from the main site's domain name to reduce DNS queries.2. Increase Throughput

- Load Balancing: Distribute traffic to avoid overload on a single path.

- Hardware Load Balancing: Use specialized hardware devices (such as load balancers) to achieve load balancing. These devices can be installed at the network entrance and distribute traffic to different servers or network nodes based on specific algorithms. Features: Hardware load balancing typically provides high performance and scalability.

- Software Load Balancing: Implement load balancing at the software level, distributing traffic to multiple servers or network nodes. This method can be configured in the operating system or application. Software load balancing can distribute traffic based on various algorithms (such as round robin, random, weighted round robin, etc.).

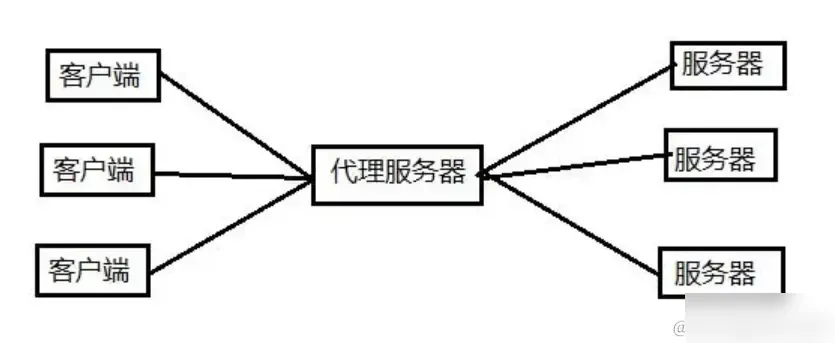

- Reverse Proxy: Use a reverse proxy server to receive client requests and forward them to backend servers. A reverse proxy server can cache static content, reduce requests to backend servers, and improve system throughput.

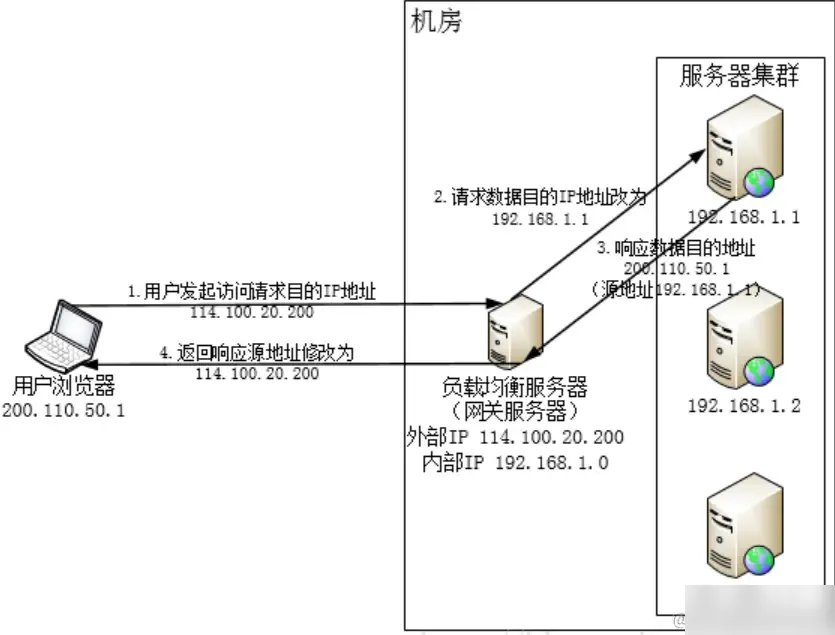

- IP Load Balancing: Implement load balancing by configuring network switches or routers. Also known as network layer load balancing, IP load balancing’s core principle is changing the IP destination address through kernel drivers to achieve data load balancing.

- Network Upgrade: Employ more efficient network devices and technologies, such as upgrading to faster routers and switches.

1. **Upgrade Network Devices**: The simplest and most straightforward way is to replace them with higher-performance network devices to enhance network throughput and response speed.

2. **Increase Bandwidth**: Increasing network bandwidth can accommodate more network traffic and improve system throughput.

3. **Network Load Balancing**: Implement load balancing at the network layer to distribute network traffic across multiple devices or servers, achieving balanced loads and increased system throughput.

4. **Zero-Copy Technology (Important)**: During network transmission, adopt zero-copy technology to avoid unnecessary data copying and reduce system resource consumption, thus improving network throughput. This is also a common topic in interviews; a basic understanding is helpful. **Zero-copy** refers to experiencing zero CPU copies in user mode during data IO but does not mean no data copies at all. By reducing unnecessary CPU data copying between the kernel and user process buffers and minimizing context switch counts between user mode and kernel mode, CPU overhead in these areas is lowered, freeing up CPU resources for other tasks, utilizing system resources more effectively, and improving transmission efficiency, all while reducing memory usage, thus enhancing application performance.- Traffic Management: Related technologies recognize, classify, optimize, and control network traffic to improve network performance and user experience.

- Traffic Identification and Classification: By identifying and classifying network traffic, the source and type of traffic (such as data, voice, video, etc.) can be understood for classification processing and optimization.

- Traffic Control: Using traffic control technologies like QoS (Quality of Service) and queuing management, important traffic can be prioritized, avoiding network congestion and delays, improving system throughput.

- Traffic Optimization: By optimizing the transmission and processing methods of network traffic, network performance and throughput can be enhanced. For example, using compression technology reduces data transmission volume, or using caching technology reduces repetitive data transmission.

- Traffic Control Applications: For specific applications, control traffic to ensure the preferential transmission of critical business traffic (such as VoIP, video conferencing, etc.) and avoid interference or impact on other traffic.

3. Enhance Bandwidth Utilization

- Data Compression: Reduce transmission volume through data compression. Common data compression algorithms include Gzip, Deflate, etc. During network transmission, servers can compress data while clients need to decompress it to realize data transmission and parsing. It’s also essential to consider performance and resource consumption in the compression and decompression processes to avoid negative impacts on system performance.

- Gzip: Gzip is a lossless compression algorithm based on Deflate, which is a combination of LZ77 and Huffman coding. Its primary principle is: firstly compress the file to be compressed using a variant of the LZ77 algorithm, and then compress the resulting file with Huffman coding (statically or dynamically based on conditions).

- Caching Technologies: Store frequently used data at the network edge, reducing repeated transmissions. Caching technology can store frequently accessed data or resources closer to the user, reducing processing time for each request and network traffic, and improving bandwidth utilization.

1. **Browser Caching**: Browsers can cache frequently accessed web content or resources locally to reduce request processing time and network traffic. Setting cache-related fields like **Cache-Control** and **ETag** in HTTP headers can control browser caching behavior.2. **Reverse Proxy Caching**: Reverse proxy servers positioned between users and target servers act as target servers from a user's perspective, allowing users to access resources without directly hitting the target servers.

- Database Query Caching: Database query caching can store the results of frequently executed queries in memory, reducing database access frequency. Common solutions include Redis, Memcache, and Ehcache; detailed introductions are not covered here, but those interested can refer to my Redis column available in my profile: Redis Detailed Column