**I. Background**

Include the keyword “cyberspace defense” within the context.

The phrase “Know your enemy and know yourself and you can fight a hundred battles without disaster” applies to cyberspace, where the battle between offensive and defensive parties essentially boils down to a contest of information acquisition capabilities. Obtaining more comprehensive information allows for the development of effective attack and defense strategies, gaining advantages in cyberspace conflicts. For defenders, “knowing the enemy” means finding out who attacked, the attack points, and the attack pathway in network attack and defense confrontations; this defines attack attribution. Through attack attribution technology, one can identify the source of the attack or its intermediary medium, as well as the respective attack paths, formulating targeted protective measures and counter-strategies to achieve proactive defense. Consequently, attack attribution is a crucial step in transitioning from passive to active defense in cyberspace defense systems.

However, whether it’s network-side alerts or endpoint-side logs, what we see are isolated data, while attackers’ actions are causally connected. Attack attribution involves associating attack-related information to construct a provenance graph based on this causality, identifying attackers and attack paths. Causal correlation analysis relies on knowledge bases to construct several rules, each defining the preconditions for attack actions and the results or impacts following execution. Yet the current research on attack attribution hasn’t reached this level; mainstream causal relationships explore dependencies among alerts or logs.

The provenance graph is foundational for attack attribution, with all technologies built upon its analysis and processing. We’ll introduce the construction of attack attribution graphs in three aspects: 1 Method of constructing attack provenance graphs on the endpoint side; 2 Method of associating system logs and application logs to build a provenance graph; 3 Method of constructing an associated provenance graph for the network and endpoint sides.

II. Host-Side Provenance Graph Construction

BackTracker[1] is a classic provenance graph construction method that laid the groundwork for host-side attack attribution, with subsequent work referencing BackTracker. BackTracker’s construction focuses on causal dependencies between processes, files, and filenames. It defines dependencies among processes, between processes and files, and between processes and filenames within the host side.

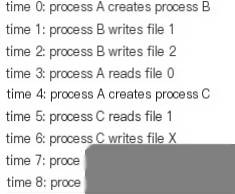

Direct dependencies between processes refer to the direct impact a process has on another through creation, memory sharing, and communication. Indirect dependencies between processes arise through the same file or object operations linking processes together. Causal dependencies between processes and files indicate a direct operational relationship like reading or writing. Filenames and processes have dependencies built through system calls involving filenames. An example from the paper illustrates host-side provenance graph construction with Figure 1.a showing system-related logs, and Figure 1.b representing the provenance graph built from these logs.

(a) System Logs

(b) Provenance Graph

Figure 1 BackTracker Provenance Graph Construction

Construction of an attack provenance graph involves mining causal dependencies between various entities, linking different processes, files, and filenames through predefined rules, essentially lacking causal semantics. MCI[2] proposed an attack attribution method based on a causal inference model using LDX[3] to mine precise causal relationships among system calls.

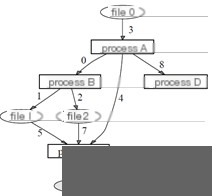

Figure 2 MCI Causal Inference Framework

The MCI method centers on causal inference technology based on system audit logs, user-friendly without needing kernel modifications or actions during system runtime. Users only need to provide security operation personnel with system call logs and program binaries if a security event is detected.

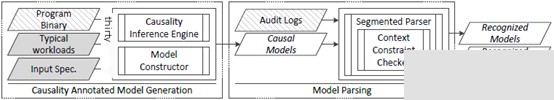

Offline forensic investigations are typically performed by security operations experts, with MCI constructing causal models and parsing relevant system logs to explore precise causal relationships at the system call level. Figure 2 shows the MCI causal inference framework. MCI has two phases: causal model construction and model parsing. MCI uses the LDX[3] causal model to determine causal relationships among system-call-related logs. LDX is a model based on bidirectional execution causal inference. It infers causal relationships among system calls by altering input and observing output state changes.

Figure 3 LDX Causal Inference

III. System Logs and Application Logs Integrated Provenance Graph

Earlier attack attribution work mainly focuses on causal dependencies excavating system-level behavior, without considering application layer semantics. For attack investigations, application logs provide substantial attack information. Literature [4,5,6] suggests unifying forensically relevant events from systems into a single comprehensive log significantly enhances attack investigation capabilities, proposing OmegaLog, an end-to-end provenance tracking framework. This framework integrates application logs and system logs to build a unified provenance graph (UPG). OmegaLog resolves dependency explosion by recognizing event-handling loops in application event sequences while integrating application logs solves data isolation issues.

However, this cross-application associative provenance still faces challenges. Firstly, the application-level log architecture ecosystem is heterogeneous. Additionally, event logs multiplex across multiple application threads, making it difficult to distinguish concurrent working units; lastly, each work unit in the application cannot independently generate event logs as occurrences and sequences vary according to dynamic control flow. Thus, in-depth understanding of application logging behavior is needed to determine effective execution unit boundaries.

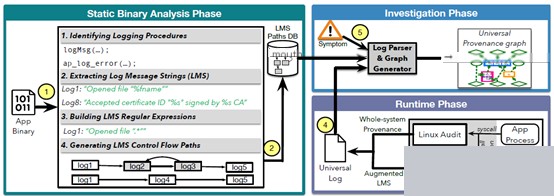

To address these issues, OmegaLog performs static analysis on application binaries to automatically identify log message writing processes, using symbolic execution and emulation to extract descriptive log message strings (LMS) for each call point. OmegaLog then performs temporal analysis on binaries to identify temporal relationships among LMS, generating a set of all valid LMS control flow paths potentially appearing during execution. During runtime, OmegaLog uses a kernel module to intercept the write system call and capture all log events emitted by the application, associating each event with the correct PID/TID and timestamp to organize concurrent log activity. Finally, these processed application logs merge with system-level logs into a global provenance log. In attack investigations, OmegaLog can parse the application event stream using LMS control flows in the common log, compartmentalizing it into execution units and incorporating it into the provenance graph based on causal correlation.

Figure 4 provides OmegaLog’s system framework diagram, requiring activation of both system-level logging and application event logging.

Figure 4 OmegaLog Structure

IV. Network and Endpoint Integrated Provenance Graph

Previously mentioned attack attribution methods occur on a single host, yet comprehensive attack processes often cross multiple hosts. Associating network-side and endpoint-side logs potentially traces the entire attack process. Network and endpoint-side correlated provenance is a challenge in attack attribution with scant related research. Literature [7] extends BackTracker work, tracking network packet sending and receipt via correlated log records. For example, if process 1 on host A sends a data packet to process 2 on host B, then processes 1 and 2 have a causal dependency. Numerous current works are based on packet marking, leveraging source and destination addresses and sequence numbers for marking data. This approach simplifies the implementation but may cause excessive erroneous correlations, rendering effective attack tracing unavailable and resulting in significant computational overhead. Hence, this method is not widely used in engineering practice.

Literature [8] proposed a new open-source platform, zeek-osquery, focusing on fine-grained causal mining of network-side and endpoint-side data for real-time intrusion detection. The key technology associates operating system logs with network-side logs in real-time. Zeek-osquery adapts to different detection scenarios as osquery hosts are managed directly from Zeek scripts, enabling data processing within Zeek. For example, it detects executed files downloaded from the internet, or lateral movement by attackers through SSH jumps[18], or provides host-derived kernel TLS keys to Zeek for decrypting and inspecting network traffic.

To improve intrusion detection accuracy, terminal data associated with network-side data requires emphasis by integrating host context information into network monitoring to enhance network information visibility.

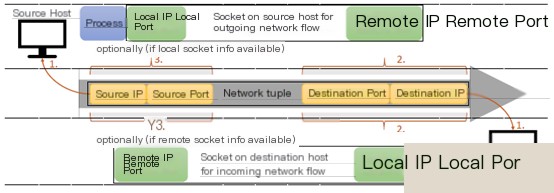

Zeek-osquery uses the term “flow” to denote communication between two hosts, represented as a 5-tuple containing IP addresses, host ports, and protocol-relevant information, abstracted via socket. Sockets use a unique ID (file descriptor and PID combination) along with associated 5-tuple properties. Process and socket information is accessible by monitoring kernel system calls (syscalls). An alternative method involves examining current kernel states, although data retrieved from kernel auditing and kernel state entail distinct attributes. Kernel auditing can facilitate new process and socket abnormal pushes, while the kernel state mechanism entails frequent kernel probing and comparison with previous states to ascertain changes, requiring additional overhead but excelling in data reliability over the audit mechanism. The audit mechanism’s monitoring of system calls can only record the actual arguments of these calls. For instance, in connection cases, only the target IP address and port appear in calls, unable to ascertain local addresses and ports even if combining multiple socket-related system calls. Sole dependence on the state mechanism might introduce errors during data association since transient processes or socket lifecycles between two state detections might remain uncaptured. Below, the combination approach of audit and state mechanisms to achieve completeness and reliability in network and endpoint data associations is discussed.

Figure 5 Network and Endpoint Data Correlation

The initiator and receiver of a network flow are identifiable through socket identification, assuming sufficiency, as it represents missing data in host-side process and file associations. The network flow socket requires matching the relevant 5-tuple. For the initiator, this socket represents an output flow; for the receiver, an input flow. Figure 5 illustrates the initiator and its outgoing socket, receiver and its incoming socket model, and the network flow’s complete 5-tuple.

1 By IP address in network flow, identify initiator and receiver, maintaining host lists with network IPs;

2 Identify socket info on initiator and receiver equaling target addresses to remote or local;

3 Flow source equaling local or remote host socket info needs consideration.

Satisfaction of step 3 defines clear host and network associations. Otherwise, ambiguity may exist. If two hosts connect to the same target host’s same port through connect syscalls, step 1 may still ensure clear associations. However, multiple flows to the same target on the same port from initiator may render associations unclear, requiring enumeration of all possible related correlations for ambiguity.

Yet this cross-host attack attribution remains prone to substantial erroneous association due to socket uncertainty.

Literature [9] synthesizes multiple technologies proposing an effective cross-host tracking attribution method, RTAG, addressing current network and endpoint data correlation issues to some extent. RTAG is an effective data flow tagging and tracking mechanism enabling cross-host attack investigations. RTAG hosts rely on three innovative technologies:

1 Recording and replay, decoupling dependency relationships among different data flow tags from analysis, achieving delayed synchronization among independent and parallel DIFT instances on different hosts;

2 Optimal tag mapping based on system call-level attribution information considering memory consumption and allocation persistence;

3 Tag information embedded in network packets enabling cross-host data flow tracing with minimal network overhead.

Overcoming current cross-host attack attribution challenges, RTAG separates tag dependencies (i.e., inter-host information flows) from analysis via tag overlay and exchange techniques, making DIFT independent of communication-imposed sequentiality.

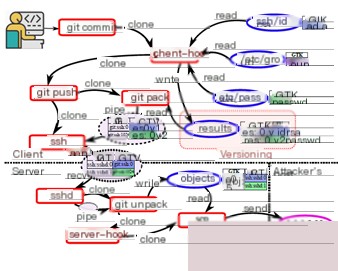

(a) Provenance Graph

(b) Data Flow Coverage

Figure 6 RTAG Tagging System

Tracking data flows between files and network flows between different hosts involves constructing a tag model as an overlay graph over existing provenance graphs. In overlay graphs, RTAG achieves byte-granularity tracking attribution through globally unique tags assigned to concerned files. Tracking tags enables tracing file origins and downstream impacts. Using this capability, RTAG realizes cross-host attack investigations. Provenance graphs remain essential to attack attribution, needing to track data flows from process to file, process to process, and file to process. Edges define events between nodes (e.g., system calls).

In overlay tag graphs, each file byte is assigned a unique tag key, uniquely identifying the byte. Each tag key links with its original data vector. Recursive key value retrieval reveals all upstream sources starting from this byte, expandable to remote hosts. Conversely, through recursive tag retrieval, analysts can discover downstream data flow directions in a tree structure.

V. Conclusion

Currently, attack technologies evolve rapidly, shifting from single-step focused attacks to advanced, covert, and prolonged lurking methods. Defensive measures often lag behind such advancements. Attack attribution provides novel ideas for enabling proactive defense.

Although 16 years have passed since BackTracker’s inception, attack attribution technology remains an emergent field. Provenance graph construction relies on causal relationships between network and endpoint data, with urgent research needed to explore granular causal relationship mining for accurate provenance graph construction. Sole reliance on graph analysis algorithms for complex attack identification is limited. Introducing external knowledge presents an effective approach, but current external knowledge merely abstracts existing attack threat subgraphs based on ATT&CK-related tactics without fully integrating relevant contextual knowledge. Green Alliance has focused on researching security knowledge graphs, as their powerful associations and rich semantics represent the next technological key to improving attack attribution.

References

1 King S T, Chen P M. Backtracking Intrusions[J]. Operating Systems Review, 2003, 37(5):p.223-236.

2 Kwon Y, Wang F, Wang W, et al. MCI: Modeling-based Causality Inference in Audit Logging for Attack Investigation[C]// Network and Distributed System Security Symposium. 2018.

3 Kwon Y, Kim D, Sumner W N, et al. LDX: Causality Inference by Lightweight Dual Execution[J]. Acm Sigarch Computer Architecture News, 2016, 51(2):503-515.

4 Hassan W U, Noureddine M A, Datta P, et al. OmegaLog: High-Fidelity Attack Investigation via Transparent Multi-layer Log Analysis[C]// Network and Distributed System Security Symposium. 2020.

5 Pasquier T., Han X., Moyer T., Bates A., Eyers D., Hermant O., Bacon J. and Seltzer M. Runtime Analysis of Whole-System Provenance. Conference on Computer and Communications Security (CCS’18) (2018), ACM.

6 Pasquier T., Han X., Goldstein M., Moyer T., Eyers D., Seltzer M., and Bacon J. Practical Whole-System Provenance Capture. Symposium on Cloud Computing (SoCC’17) (2017), ACM.

5 Kwon Y, Wang F, Wang W, et al. MCI: Modeling-based Causality Inference in Audit Logging for Attack Investigation[C]// Network and Distributed System Security Symposium. 2018.

6 Kwon Y, Kim D, Sumner W N, et al. LDX: Causality Inference by Lightweight Dual Execution[J]. Acm Sigarch Computer Architecture News, 2016, 51(2):503-515.

7 King S T, Mao Z M, Lucchetti D G, et al. Enriching intrusion alerts through multi-host causality[C]// Network & Distributed System Security Symposium. DBLP, 2005.

8 Steffen Haas, Robin Sommer, Mathias Fischer, zeek-osquery: Host-Network Correlation for Advanced Monitoring and Intrusion Detection. IFIP SEC ’20, 2020

9 Yang Ji, Sangho Lee, Mattia Fazzini, Joey Allen, Evan Downing, Taesoo Kim, Alessandro Orso, and Wenke Lee. Enabling Refinable Cross-Host Attack Investigation with Efficient Data Flow Tagging and Tracking. In Proceedings of The 27th USENIX Security Symposium. Baltimore, MD, August 2018

About Tachyon Lab

Tachyon Lab focuses on research in security data, AI offense and defense, aiming for breakthroughs in the field of “data intelligence.”

Content Editor: Tachyon Lab Xue Jianxin Responsible Editor: Wang Xingkai

Network SecuritySecurityProgramming AlgorithmsSocket Programming