A recently released Linux kernel introduced a powerful capability for kernel monitoring with a Linux monitoring framework. It originated from what was historically known as BPF.

What is BPF?

BPF (Berkeley Packet Filter) is a highly efficient network packet filtering mechanism designed to avoid unnecessary user-space applications. It processes network packets directly in the kernel space. The most common application using BPF is the filter expressions used in the tcpdump tool. In tcpdump, the expression is compiled and converted to BPF bytecode. The kernel loads this bytecode and applies it on the raw network packet stream, efficiently transmitting the packets that meet the filter criteria to the user space.

What is eBPF?

eBPF is an extended and enhanced version of the BPF for the Linux observability system. It can be regarded as a counterpart to BPF. With eBPF, you can customize bytecode in a sandbox that eBPF provides in the kernel. It allows the safe execution of almost all functions thrown by the kernel symbol table without risking kernel integrity. In practice, eBPF enhances the security of interactions with user space as well. The kernel’s verifier will refuse to load bytecode referencing invalid pointers or those reaching maximum stack size limits. Loops are generally not allowed unless proven to have a constant upper bound at compile-time. Bytecode can only call a small set of specified eBPF helper functions. eBPF programs are guaranteed to terminate in a timely manner, preventing exhaustion of system resources that kernel modules may cause, leading to kernel instability and crashes. Conversely, you might find that, compared to the freedom offered by kernel modules, eBPF’s restrictions are significant, but overall, it tends to favor eBPF over modular code, mainly because authorized eBPF does not harm the kernel. However, this isn’t the only advantage.

Why use eBPF for Linux monitoring?

As part of the Linux kernel core, eBPF does not rely on any third-party modules or extensions. It includes a stable ABI (Application Binary Interface) allowing programs compiled on older kernels to run on newer ones. The performance overhead introduced by eBPF is generally negligible, making it highly suitable for application monitoring and heavy system execution tracking. Windows users don’t have eBPF, but they can use Windows Event Tracing.

eBPF is incredibly flexible and can track almost all major kernel subsystems, covering CPU scheduling, memory management, networking, system calls, block device requests, etc., with ongoing expansion.

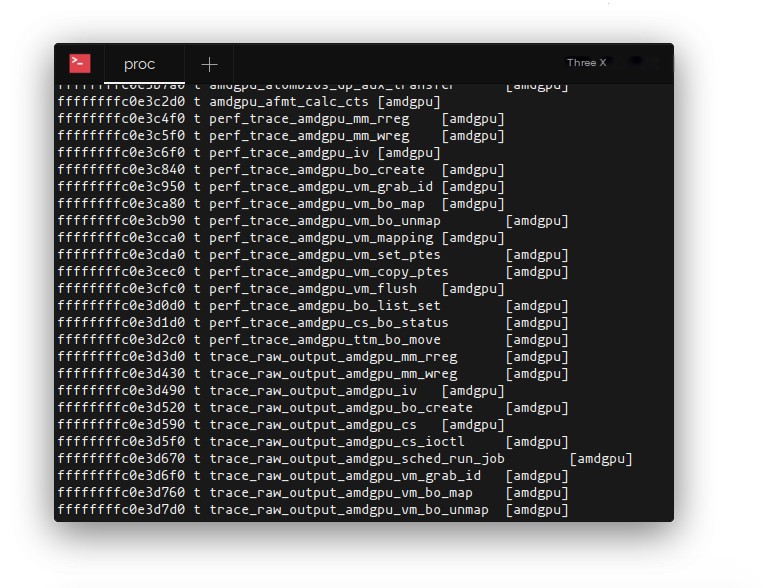

You can run the following command in the terminal to see a list of all kernel symbols that eBPF can track:

$ cat /proc/kallsyms

Symbols that can be tracked

The above command will produce a huge output. If we were only interested in instrumenting syscall interface, a bit of grep magic will help filter out unwanted symbol names:

$ cat /proc/kallsyms | grep -w -E “sys.*”

ffffffffb502dd20 T sys_arch_prctl

ffffffffb502e660 T sys_rt_sigreturn

ffffffffb5031100 T sys_ioperm

ffffffffb50313b0 T sys_iopl

ffffffffb50329b0 T sys_modify_ldt

ffffffffb5033850 T sys_mmap

ffffffffb503d6e0 T sys_set_thread_area

ffffffffb503d7a0 T sys_get_thread_area

ffffffffb5080670 T sys_set_tid_address

ffffffffb5080b70 T sys_fork

ffffffffb5080ba0 T sys_vfork

ffffffffb5080bd0 T sys_clone

Different types of probes respond to events triggered by different kernel modules. Kernel programs run at specified memory addresses; incoming network packets or execution calls from user space code can all be tracked via eBPF programs. By deploying eBPF into kprobes or XDP, you can trace incoming network packets; by deploying eBPF into uprobes, you can trace calls to user-space programs.

At Sematext (a company, and this article is a blog post from that company), they are obsessed with eBPF, trying every possible way to leverage eBPF for service monitoring and container visualization. They are also recruiting talent in this area. If you are interested, you can give it a try.

Let’s delve further into how eBPF programs are constructed and loaded into the kernel.

Analyzing Linux eBPF programs

Before diving deeper into the structure of eBPF programs, it’s necessary to mention BCC (BPF Compiler Collection), a toolkit for compiling eBPF requisite bytecode, and it provides bindings for Python and Lua, enabling code loading into the kernel and interacting with the underlying eBPF facilities. It also contains many useful tools to show what can be achieved with eBPF.

In the past, BPF programs were generated by manually assembling raw BPF instructions. Fortunately, clang (which is part of the LLVM frontend) can convert C language into eBPF bytecode, saving us from dealing with BPF instructions ourselves. Today, it’s also the only compiler that can generate eBPF bytecode, though Rust can also generate eBPF bytecode.

Once successfully compiling the eBPF program and generating the object file, we can prepare to inject it into the kernel. To facilitate injection, a new bpf system call has been introduced in the kernel. Besides loading eBPF bytecode, this seemingly simple system call performs many other tasks. It creates and manages maps in the kernel (more on an essential data structure later), a very high-level feature in eBPF instructions. You can learn more from the bpf man pages (man 2 bpf).

When a user-space process issues eBPF bytecode via the bpf system call, the kernel verifies these bytecodes and subsequently JIT (Just-In-Time) compiles them (converting them into machine-executable code), transforming these bytecode instructions into the current CPU’s instruction set. The converted code executes swiftly. If the JIT compiler becomes unavailable for any reason, the kernel will fallback to using an interpreter, though without the high-performance execution previously mentioned.

Linux eBPF Example

Now, let’s look at an example of a Linux eBPF program. The goal is to catch the caller of the setns system call. A process can enter an isolated namespace by invoking this system call after a child process descriptor is created (the child process can control this namespace through a bitmask specified when invoking the clone system call to detach from the parent process). This system call is typically used to provide a process with an isolated attribute, such as a TCP stack, mount point, PID namespace, etc.

#include <linux/kconfig.h>

#include <linux/sched.h>

#include <linux/version.h>

#include <linux/bpf.h>

#ifndef SEC

#define SEC(NAME)

__attribute__((section(NAME), used))

#endif

SEC("kprobe/sys_setns")

int kprobe__sys_setns(struct pt_regs *ctx) {

return 0;

}

char _license[] SEC("license") = "GPL";

__u32 _version SEC("version") = 0xFFFFFFFE;

The above is a very simple eBPF program. It includes several script segments; the first segment includes various kernel header files that define multiple data types. Following that is the declaration of the SEC macro, which is used to generate sections in the object file for interpretation by the ELF BPF loader later. If load errors are encountered due to the absence of license and version section information, define them as well.

Next, let’s look at the most interesting part of the eBPF program – the actual hook point of the setns system call. Functions prefixed with kprobe__ and the binding SEC macro indicate callback instructions to the built-in virtual machine in the kernel attached to thesys_setns symbol, triggering the eBPF program and executing the body code each time the system call is made. Each eBPF program has a context. In the kernel probe example, this context is the processor’s register (pt_regs structure) current state, holding the function parameters prepared by libc during transitions from user space to kernel space. To compile the program (llvm and clang should be configured), one can use the following command (note that the kernel header file path should be specified through the LINUX_HEADERS environment variable), where clang will generate the program’s LLVM intermediate code, which LLVM subsequently compiles into final eBPF bytecode:

$ clang -D__KERNEL__ -D__ASM_SYSREG_H

-Wunused

-Wall

-Wno-compare-distinct-pointer-types

-Wno-pointer-sign

-O2 -S -emit-llvm ns.c

-I $LINUX_HEADERS/source/include

-I $LINUX_HEADERS/source/include/generated/uapi

-I $LINUX_HEADERS/source/arch/x86/include

-I $LINUX_HEADERS/build/include

-I $LINUX_HEADERS/build/arch/x86/include

-I $LINUX_HEADERS/build/include/uapi

-I $LINUX_HEADERS/build/include/generated/uapi

-I $LINUX_HEADERS/build/arch/x86/include/generated

-o - | llc -march=bpf -filetype=obj -o ns.o

You can use the readelf tool to view section information and the symbol table in the target file:

$ readelf -a -S ns.o

…

2: 0000000000000000 0 NOTYPE GLOBAL DEFAULT 4 _license

3: 0000000000000000 0 NOTYPE GLOBAL DEFAULT 5 _version

4: 0000000000000000 0 NOTYPE GLOBAL DEFAULT 3 kprobe__sys_setns

The above output confirms that the symbol table is compiled into the target file. With a valid target file now, we can load it into the kernel to see what happens.

Deploying eBPF programs to the kernel using Go

Earlier, we talked about BCC and mentioned how providing valid interfaces to the eBPF system hooks it into the kernel. Compiling and running eBPF programs requires BCC to have LLVM and kernel headers installed, which isn’t always feasible in the absence of a compiling environment and compilation machine. In scenarios like this, it’s ideal if we can embed the generated ELF objects in the binary’s data segment, maximizing cross-machine portability.

Besides providing bindings for libbcc, the gobpf package can load eBPF programs from compiled bytecode. By combining it with a tool such as packr, which embeds blobs into Go applications, we have all the tools necessary for distributing binary files without runtime dependencies.

We make slight amendments to our eBPF program so it can print messages to the kernel tracing pipeline when a kprobe is triggered. For succinctness, we will omit the printf macro and other eBPF helper functions, but you can find relevant definitions in this header file.

SEC("kprobe/sys_setns")

int kprobe__sys_setns(struct pt_regs *ctx) {

int fd = (int)PT_REGS_PARM1(ctx);

int pid = bpf_get_current_pid_tgid() >> 32;

printt("process with pid %d joined ns through fd %d", pid, fd);

return 0;

}

We can now start writing Go code to handle eBPF bytecode loading. We’ll implement a small abstraction on top of gobpf (KprobeTracer):

import (

"bytes"

"errors"

"fmt"

bpflib "github.com/iovisor/gobpf/elf"

)

type KprobeTracer struct {

// bytecode is the byte stream with embedded eBPF program

bytecode []byte

// eBPF module associated with this tracer. The module is a collection of maps, probes, etc.

mod *bpflib.Module

}

func NewKprobeTracer(bytecode []byte) (*KprobeTracer, error) {

mod := bpflib.NewModuleFromReader(bytes.NewReader(bytecode))

if mod == nil {

return nil, errors.New("ebpf is not supported")

}

return KprobeTracer{mod: mod, bytecode: bytecode}, nil

}

// EnableAllKprobes enables all kprobes/kretprobes in the module. The argument

// determines the maximum number of instances of the probed functions the can

// be handled simultaneously.

func (t *KprobeTracer) EnableAllKprobes(maxActive int) error {

params := make(map[string]*bpflib.PerfMap)

err := t.mod.Load(params)

if err != nil {

return fmt.Errorf("unable to load module: %v", err)

}

err = t.mod.EnableKprobes(maxActive)

if err != nil {

return fmt.Errorf("cannot initialize kprobes: %v", err)

}

return nil

}

Ready to start kernel probe tracing:

package main

import (

"log"

"github.com/gobuffalo/packr"

)

func main() {

box := packr.NewBox("/directory/to/your/object/files")

bytecode, err := box.Find("ns.o")

if err != nil {

log.Fatal(err)

}

ktracer, err := NewKprobeTracer(bytecode)

if err != nil {

log.Fatal(err)

}

if err := ktracer.EnableAllKprobes(10); err != nil {

log.Fatal(err)

}

}

Use sudo cat /sys/kernel/debug/tracing/trace_pipe to read out the debug information that has been piped in. The easiest way to test the eBPF program is by appending it to a running Docker container:

$ docker exec -it nginx /bin/bash

The scenario behind this is that the container runtime will re-associate the bash process to the namespace of the nginx container. The first parameter obtained through the PT_REGS_PARM1 macro is the first file descriptor for the namespace, which can be traced in the /proc//ns symbolic link directory. So we can monitor if a process joins that namespace. This feature might not be very useful, but it is an example of tracking system call executions and obtaining their parameters.

Using eBPF Maps

Writing results to the trace pipe is good for debugging, but in a production environment, we certainly need a more advanced mechanism to share state data between user space and kernel space. This is where eBPF maps come in. These are efficient kernel key/value storage structures designed for data aggregation, asynchronously accessible from user space. eBPF maps come in many types, but for the specific example above, we will use BPF_MAP_TYPE_PERF_EVENT_ARRAY maps. It can store custom data structures and send and broadcast them to user-space processes via perf event ring buffers.

The Go-bpf library allows for creating perf maps and providing event streams to Go channels. We can add the following code to send C structures to our program.

rxChan := make(chan []byte)

lostChan := make(chan uint64)

pmap, err := bpflib.InitPerfMap(

t.mod,

mapName,

rxChan,

lostChan,

)

if err != nil {

return quit, err

}

if _, found := t.maps[mapName]; !found {

t.maps[mapName] = pmap

}

go func() {

for {

select {

case pe := <-rxChan:

nsJoin := (*C.struct_ns_evt_t)(unsafe.Pointer(&(*pe)[0]))

log.Info(nsJoin)

case l := <-lostChan:

if lost != nil {

lost(l)

}

}

}

}()

pmap.PollStart()

We initialized the Rx and lost channels and passed them to the InitPerfMap function, along with a reference to the module to consume events and the name of the perf map. Each time a new event is delivered to the Rx channel, we can convert out the original pointer to the C structure (ns_evt_t) defined in the eBPF program above. We also need to declare a perf map and send the data structure via the bpf_perf_event_output helper function:

struct bpf_map_def SEC("maps/ns") ns_map = {

.type = BPF_MAP_TYPE_HASH,

.key_size = sizeof(u32),

.value_size = sizeof(struct ns_evt_t),

.max_entries = 1024,

.pinning = 0,

.namespace = "",

};

struct ns_evt_t evt = {};

/* Initialize structure fields.*/

u32 cpu = bpf_get_smp_processor_id();

bpf_perf_event_output(ctx, &ns_map,

cpu,

&evt, sizeof(evt));

Conclusion

eBPF continues to develop and gain broader application. With kernel evolution, new eBPF features and improvements are seen in each kernel release. Its low overhead and localized programming features make it extremely attractive for various use scenarios. For instance, the Suricata intrusion detection system uses it to implement high-level socket load balancing policies and packet filtering at early stages of the Linux network stack. Cilium relies heavily on eBPF to offer complex security policies to containers. The Sematext Agent uses eBPF to pinpoint events that require attention, like broadcast kill signals or OOM notifications in Docker and Kubernetes monitoring, and general service monitoring. It also captures TCP/UDP traffic statistics using eBPF, providing a low-cost network tracing option for network monitoring. The goal of eBPF seems to establish itself as a de facto Linux monitoring standard via kernel monitoring.