1 -> DeepSeek AI Overview

DeepSeek AI is a series of artificial intelligence models developed by China’s DeepSeek Exploration Company, known for its efficient performance and low-cost training. Here is a brief introduction:

1.1 -> Technical Features of DeepSeek AI

- Mixture of Experts Architecture (MoE): DeepSeek-V3 employs the MoE architecture with a total of 671 billion parameters, but only 37 billion parameters are activated per input. Using a dynamic redundancy strategy, it maintains optimal load balance during inference and training, significantly reducing computational costs while maintaining high performance.

- Multi-Head Latent Attention (MLA): Introduces a multi-head latent attention mechanism that, through a low-rank joint compression mechanism, compresses the Key-Value matrix into low-dimensional latent vectors, significantly reducing memory usage.

- Load Balancing Without Auxiliary Loss: Utilizes a load balancing strategy without auxiliary loss to minimize performance degradation from load balancing encouragement.

- Multi-Token Prediction (MTP): Uses a multi-token prediction objective, proven to benefit model performance and can be used for inference acceleration.

- FP8 Mixed Precision Training: Designed an FP8 mixed precision training framework, verifying for the first time the feasibility and effectiveness of FP8 training on extremely large-scale models.

- Knowledge Distillation: Through knowledge distillation, DeepSeek-R1 distills the reasoning capability from chain-of-thought (CoT) models into a standard LLM, significantly enhancing inference performance.

1.2 -> DeepSeek AI Model Release

- DeepSeek-V3: Released in December 2024, with a total of 671 billion parameters, adopting innovative MoE architecture and FP8 mixed precision training. The training cost is only $5.576 million, ranking seventh in the Chatbot Arena and first among open-source models, being the most cost-effective in the global top ten models.

- DeepSeek-R1: Released in January 2025, with performance comparable to OpenAI’s o1 stable version, and open-sourced. It ranks third in the comprehensive leaderboard of Chatbot Arena, tying with OpenAI’s o1, with outstanding performance on high-difficulty tasks.

- Janus-Pro: Released on January 28, 2025, available in 7B (7 billion) and 1.5B (1.5 billion) parameter versions, both open-source. Significant advancements in multimodal understanding and text-to-image instruction following. Enhanced stability in text-to-image generation, outperforming OpenAI’s DALL-E 3 and Stable Diffusion in several benchmarks.

1.3 -> Application Domains

- Natural Language Processing: Can understand and answer user questions, perform text generation, translation, summarization, etc., applicable in smart customer service, content creation, information retrieval, and other fields.

- Code Generation and Debugging: Supports code generation, debugging, and data analysis tasks in various programming languages, helping programmers improve efficiency.

- Multimodal Tasks: Models like Janus-Pro can perform tasks like text-to-image and image-to-text, with potential applications in image generation, image understanding, etc.

1.4 -> Advantages and Impact

- Cost-Effectiveness: Through algorithm optimization and architectural innovation, it significantly reduces training and inference costs while ensuring performance, making AI technology more accessible and applicable.

- Open Source Strategy: Adopts a fully open-source strategy, attracting attention from many developers and researchers, fostering collaboration within the AI community, and accelerating technological progress.

- Driving Industry Change: The success of DeepSeek challenges the traditional “bigger is better” AI development model, providing new development ideas and directions for the industry, inspiring more innovation and exploration.

2 -> Local Deployment of DeepSeek

2.1 -> Install Ollama

- Click Download to Download

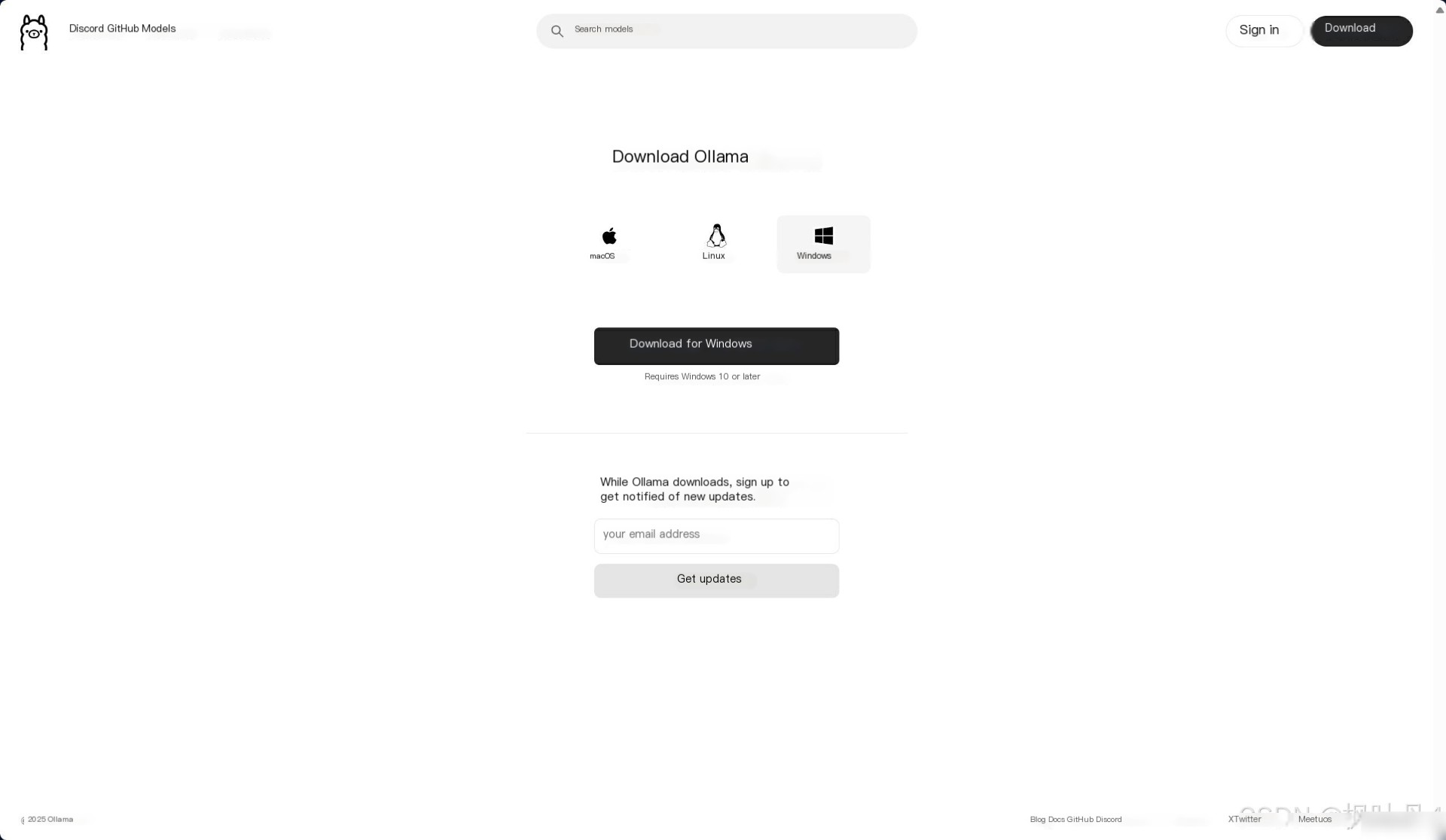

- Select the corresponding operating system, using Windows OS as an example here

Click Download for Windows to download.

- After downloading, open the file to start installing OllamaSetup

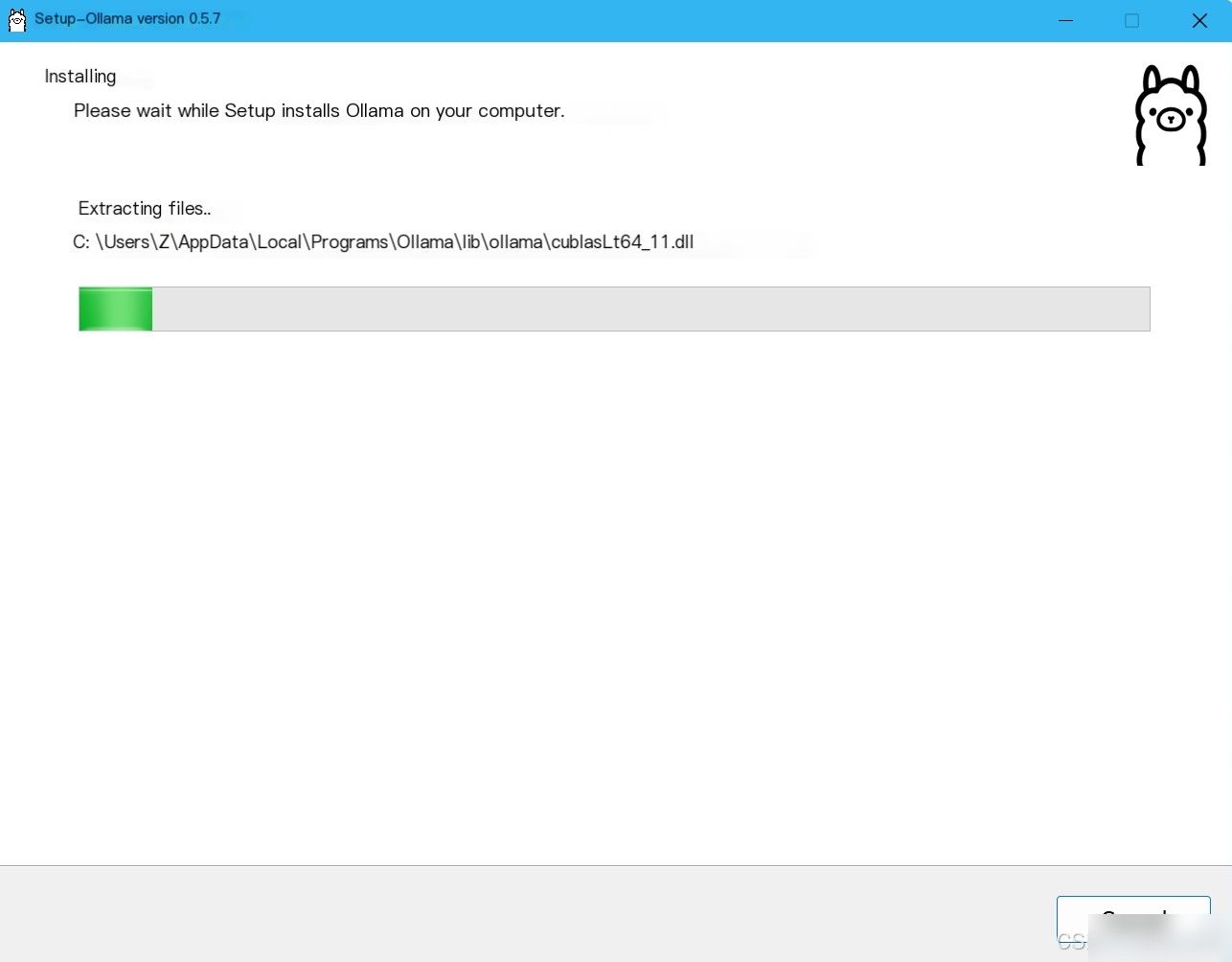

- Click Install to start the download, wait for the download to complete

- Check if the installation was successful

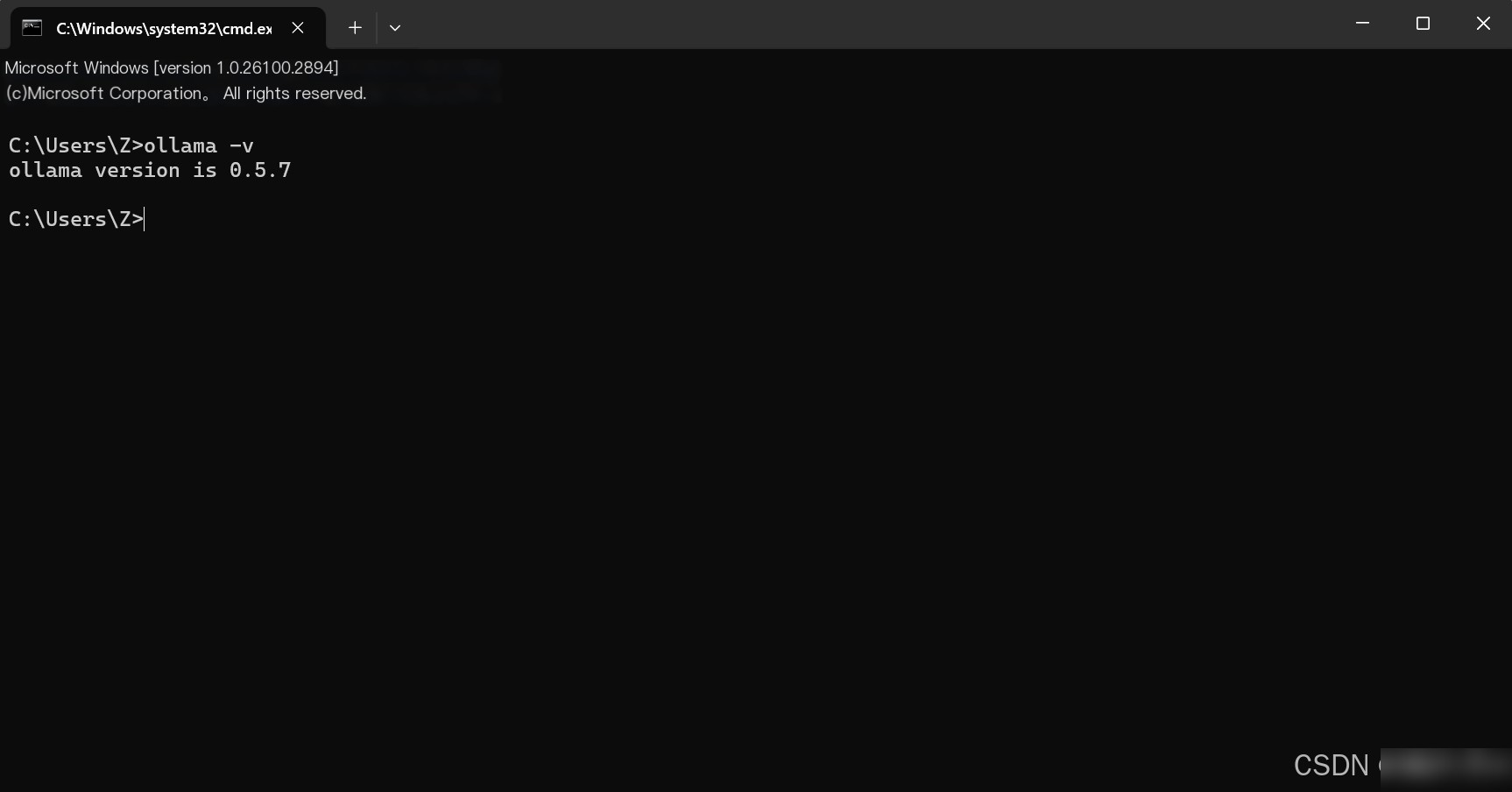

Press win+R, type cmd to open the command line and enter command mode, input the command ollama -v to check if the installation was successful. If a version number appears after entering the command, the installation is successful.

2.2 -> Deploy DeepSeek-R1 Model

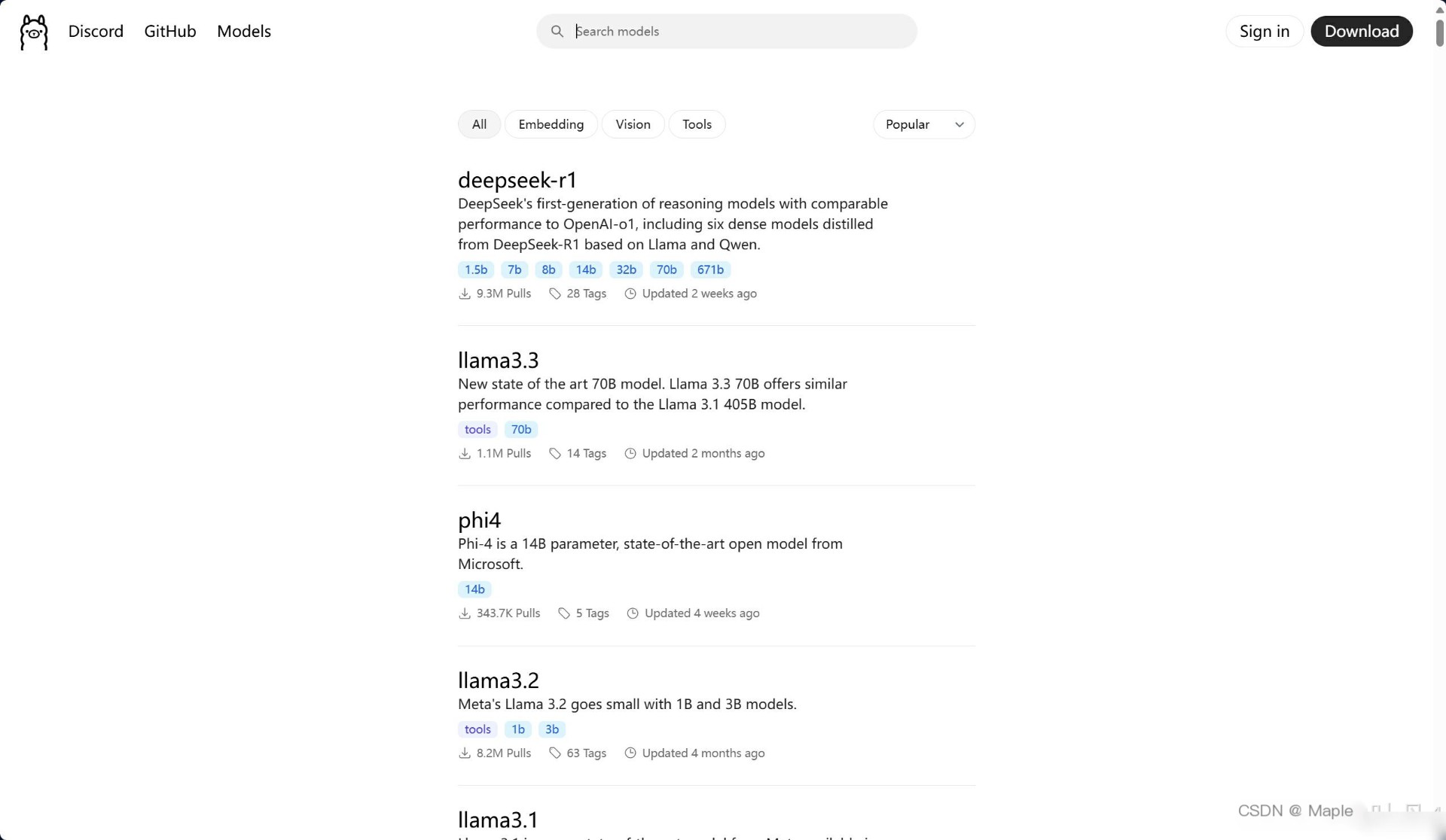

- Return to the Ollama official website, click Models in the top-left corner to enter the following interface. Select the first DeepSeek-R1 and click to enter

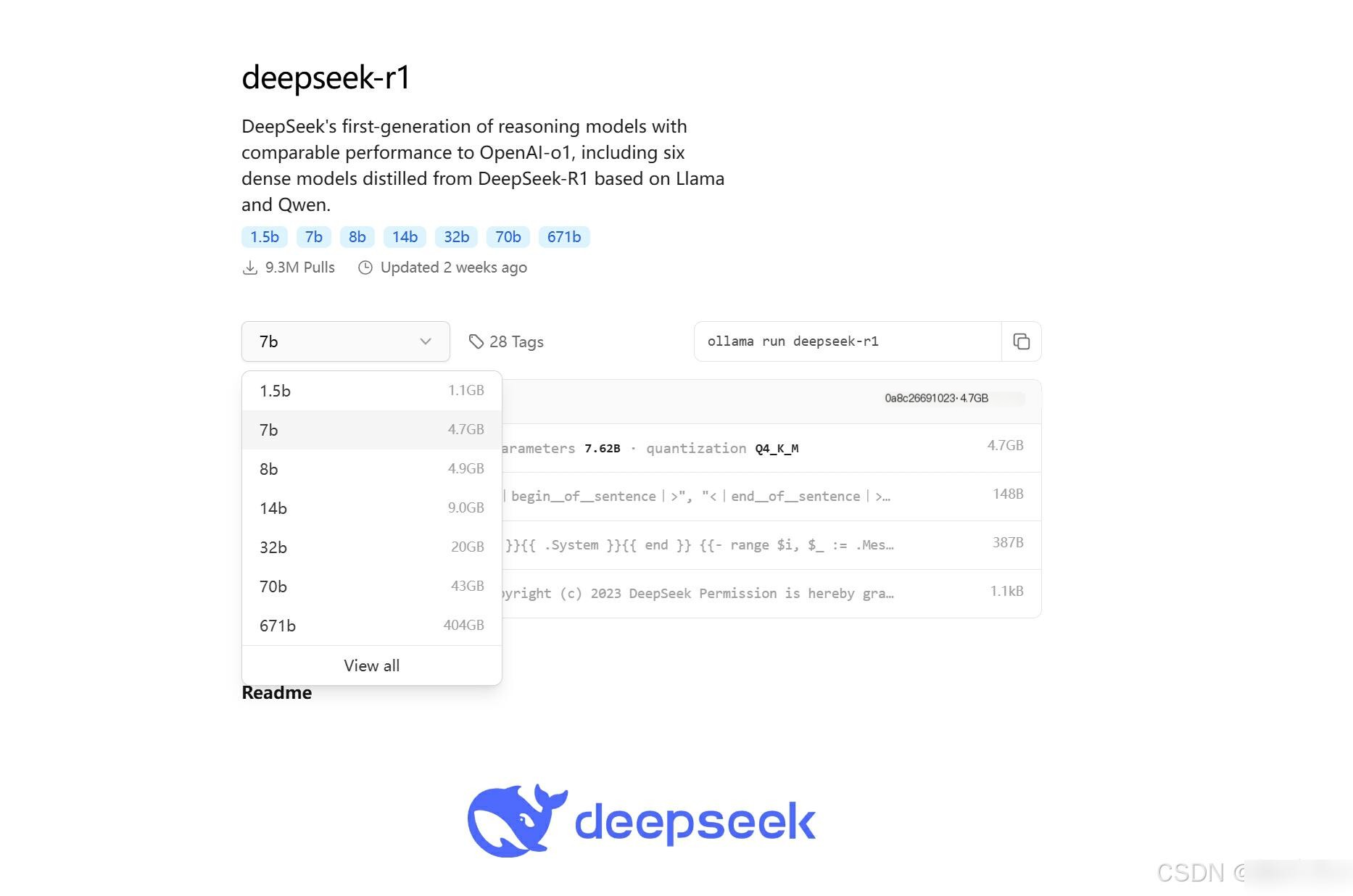

- Choose a version that suits your computer configuration

- After choosing, copy the command corresponding to the version

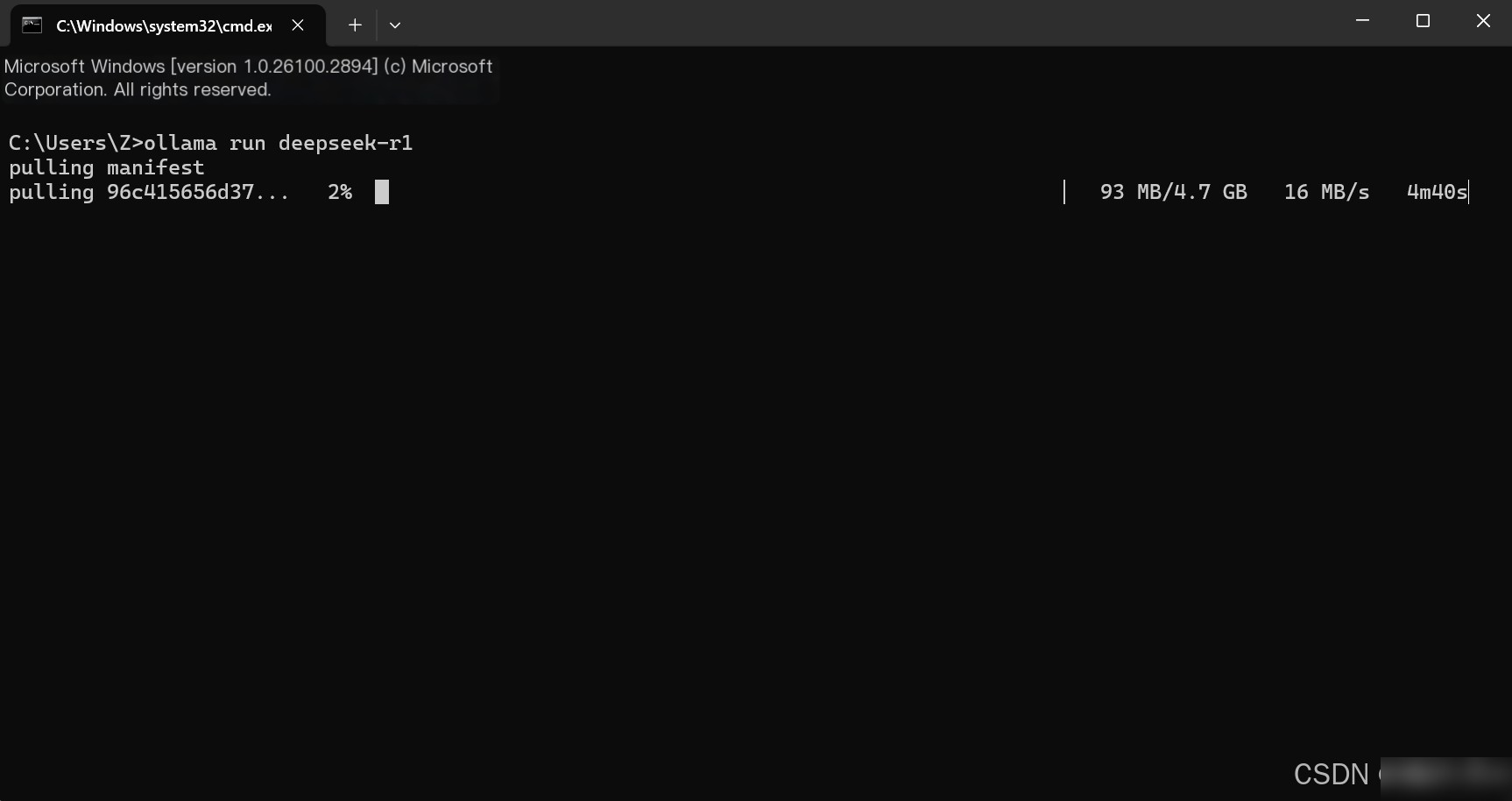

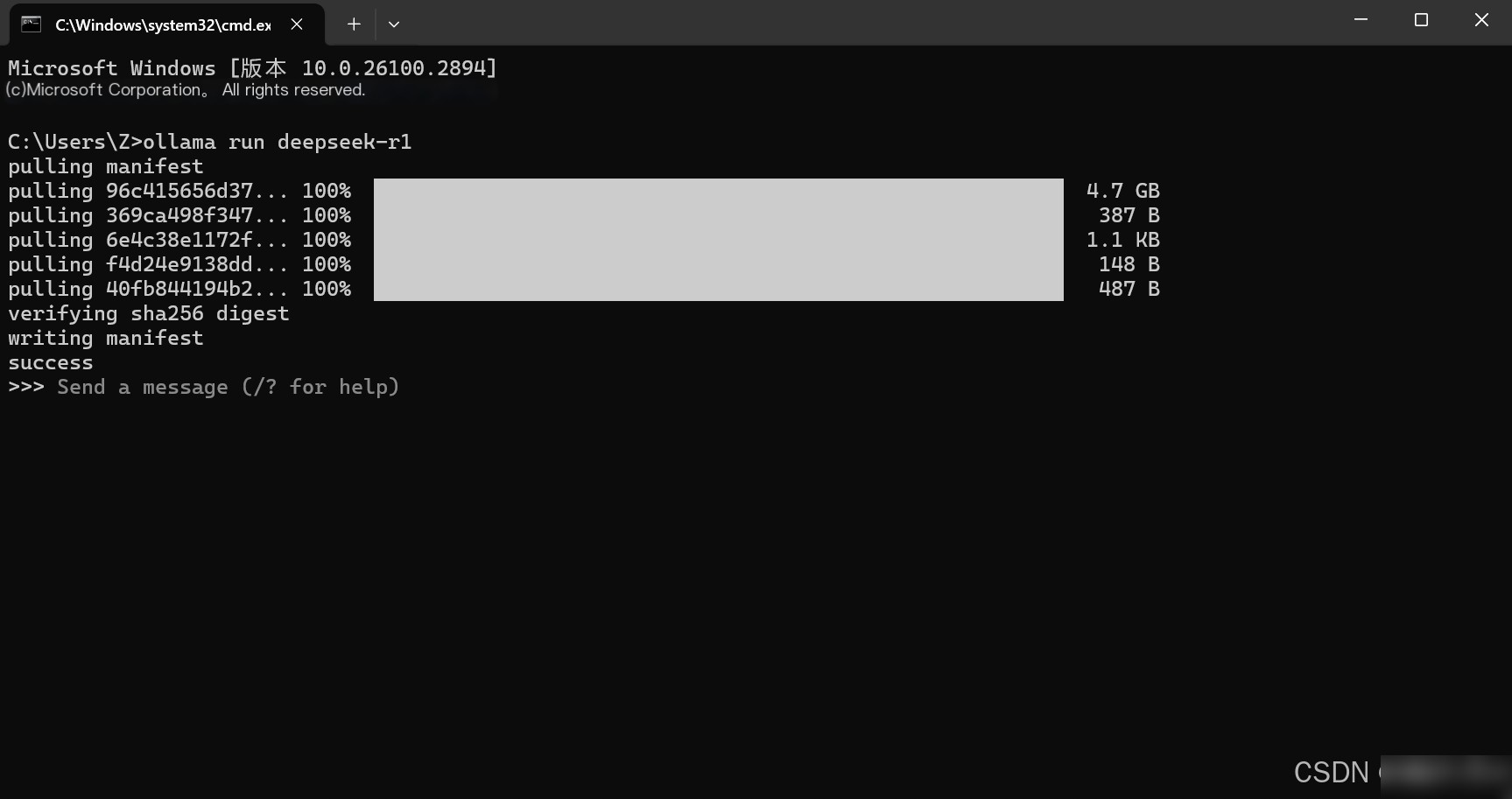

- Press win+R, type cmd to bring up the command line and enter command mode, input the command

If you feel the download speed is slow, you can press Ctrl+C to exit the command, then input the command again to restart the download. The download will resume from the last progress, and the speed will be slightly faster.

- Once downloaded, you’ll see success, and then you can start a conversation

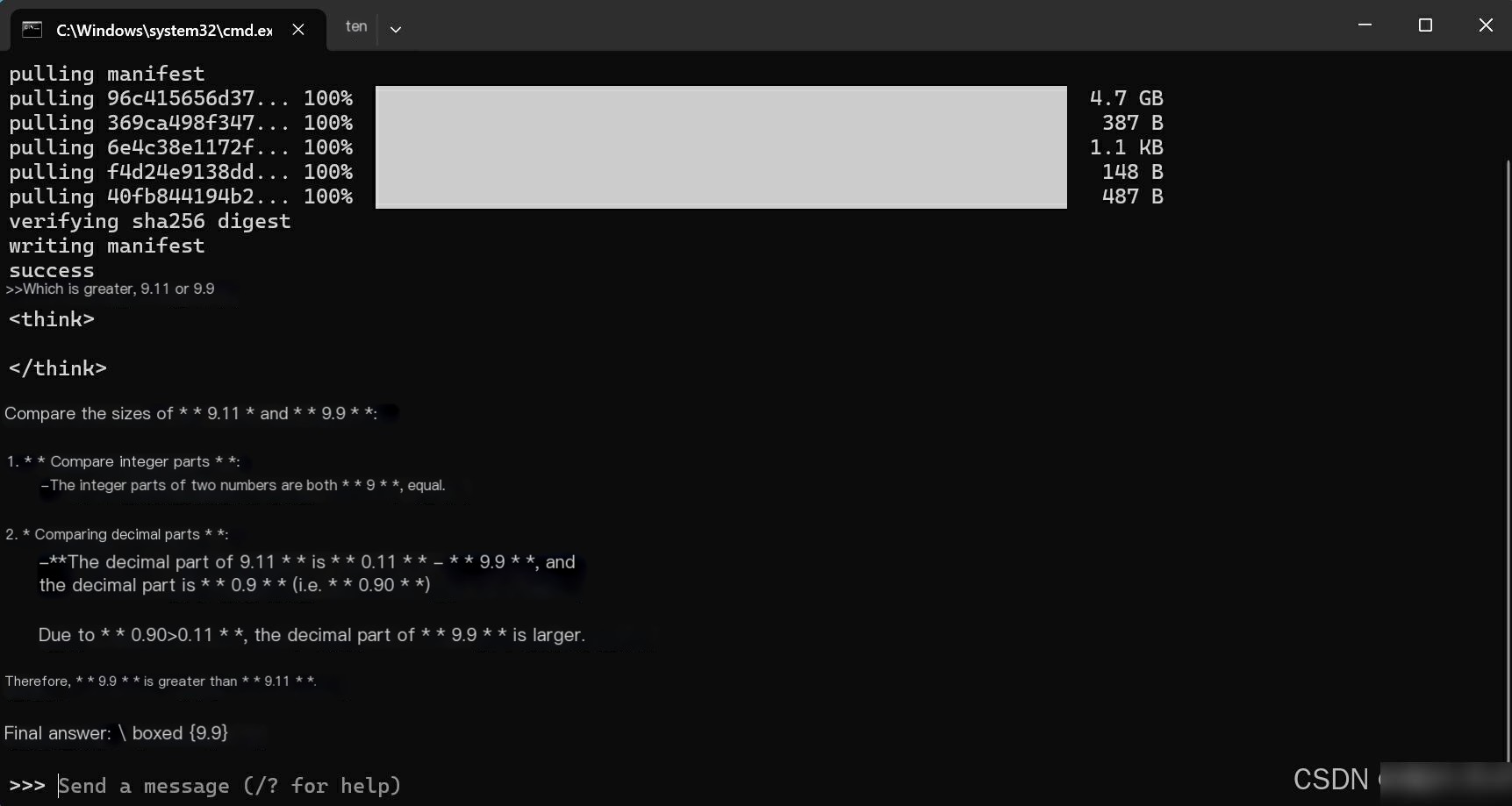

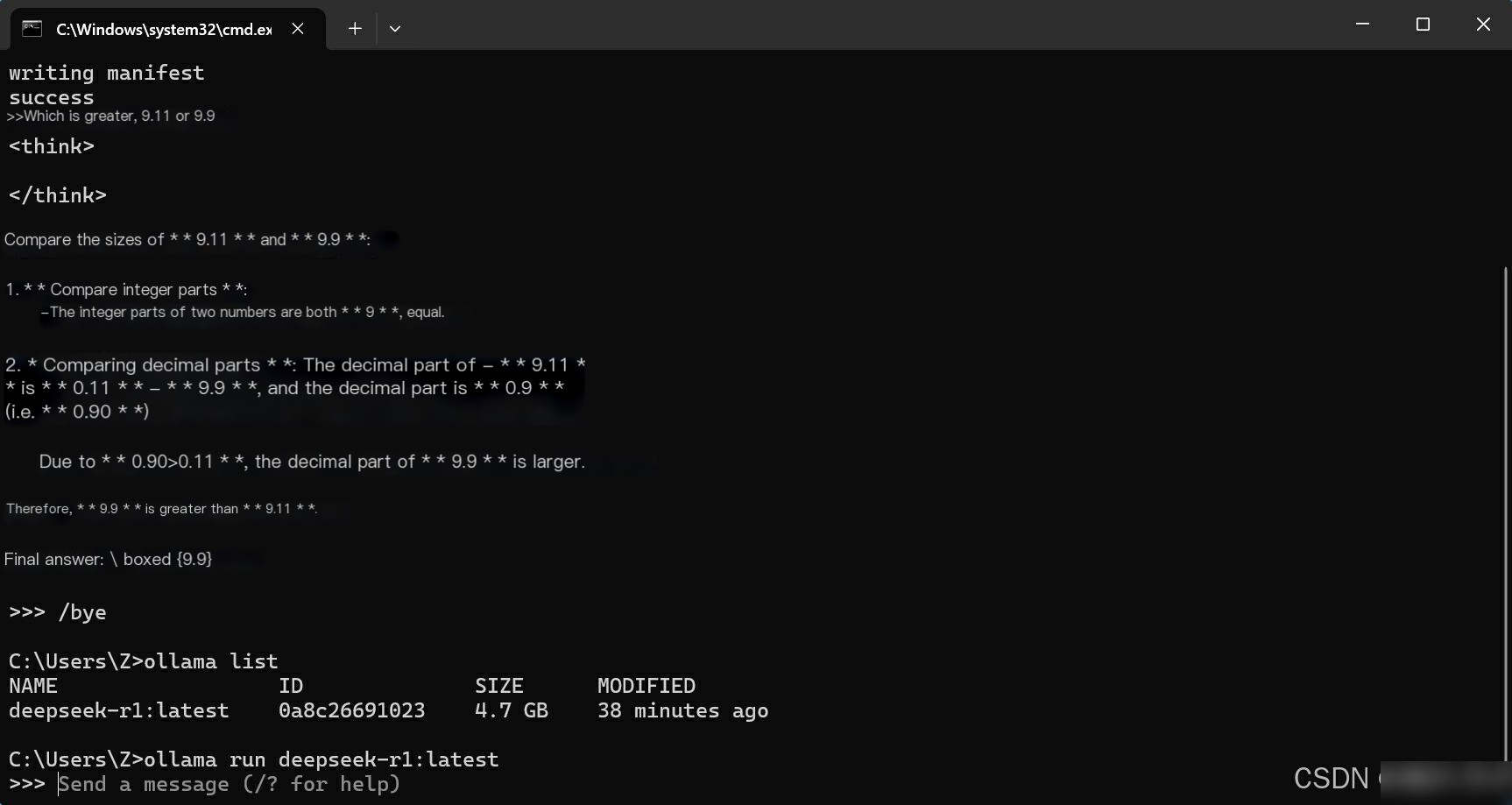

- Ask a frequently incorrect question for AI: which is greater, 9.11 or 9.9

You can see the thought process and final conclusion.

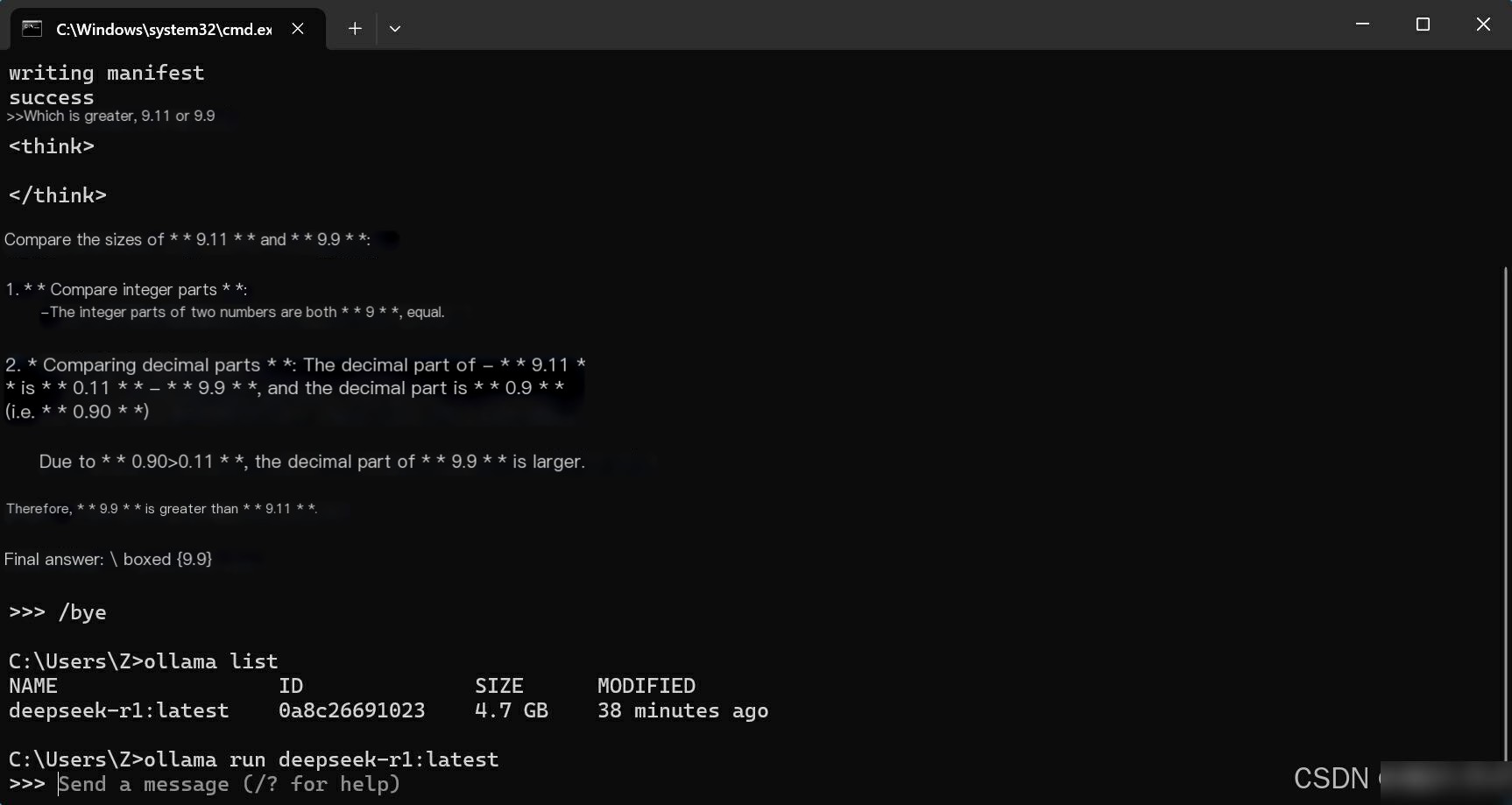

- Enter the command /bye to exit the conversation

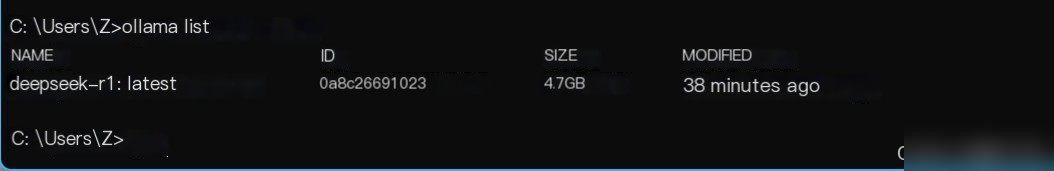

- Enter the command ollama list to view downloaded models

- Enter ollama run + the corresponding model to start a conversation

Here, input ollama run deepseek-r1:latest to re-enter a conversation.

And that’s how you complete the local deployment of DeepSeek.