1. Introduction

Netty is a widely popular asynchronous event-driven Java open-source framework for network applications, ideal for the rapid development of maintainable and high-performance protocol servers and clients. For more details, visit the [Netty framework](https://netty.io/) website.

This article is based on Netty 4.1Expand on introducing relevant theoretical models, usage scenarios, basic components, and overall architecture, so that we understand not only how things work but also why they work that way. I hope this can provide guidance for everyone in practical development practices and studying open-source projects.

Issues with Native JDK NIO Program

The JDK natively offers a set of web application APIs, but they have a series of issues, primarily as follows:

1) The NIO libraries and APIs are complex and cumbersome to use: You need to proficiently master Selector, ServerSocketChannel, SocketChannel, ByteBuffer, and others.

2) Additional skills required for foundation: For instance, being familiar with Java multithreading programming, because NIO programming involves the Reactor pattern. You must be very familiar with both multithreading and network programming to write high-quality NIO applications.

3) Reliability capability enhancement involves significant development effort and complexity: For example, the client side faces issues such as reconnecting after disconnection, network glitches, half-packet reads/writes, failure caching, network congestion, and handling of abnormal code streams, etc. The characteristics of NIO programming are that while functionality development is relatively easy, the workload and complexity of enhancing reliability capabilities are very large.

4) Bug in JDK NIO: For example, the notorious Epoll Bug, which can cause the Selector to spin in an empty loop, eventually leading to 100% CPU utilization. The official claim is that this issue was fixed in JDK version 1.6 update 18, but the problem persisted into JDK version 1.7. Although the probability of this bug occurring was reduced, it was not fundamentally resolved.

4. Characteristics of Netty

Netty wraps the native NIO API provided by the JDK to address the aforementioned issues.

The main features of Netty include:

1) Elegant Design: A unified API suitable for various types of transmission, including blocking and non-blocking Sockets; based on a flexible and scalable event model that clearly separates concerns; highly customizable threading models – single-threaded, one or more thread pools; genuine support for connectionless datagram sockets (since 3.1).

2) Easy to Use: Comprehensive Javadoc documentation, user guides, and examples; no additional dependencies needed, only JDK 5 (Netty 3.x) or 6 (Netty 4.x) is sufficient.

3) High performance, greater throughput: Lower latency; reduced resource consumption; minimize unnecessary memory copying.

4) Security: Full SSL/TLS and StartTLS support.

5) Active Community and Continuous Updates: The community is active, with short iteration cycles for version updates. Bugs that are discovered can be timely fixed, while more new features are constantly added.

5. Common Use Cases for Netty

Common use cases for Netty are as follows:

1) Internet Industry: In distributed systems, remote service calls are needed between different nodes, making a high-performance RPC framework essential. Netty, as an asynchronous high-performance communication framework, is often used as a fundamental communication component by these RPC frameworks. A typical application is the Alibaba Distributed Service Framework Dubbo’s RPC framework, which uses the Dubbo protocol for inter-node communication. The Dubbo protocol defaults to using Netty as the base communication component to implement internal communication between process nodes.

2) Gaming Industry: Whether it’s mobile game servers or large-scale online games, the Java language is being increasingly utilized. Netty, as a high-performance foundational communication component, provides TCP/UDP and HTTP protocol stacks.

It is highly convenient to customize and develop private protocol stacks. Account login servers and map servers can easily communicate with high performance using Netty.

3) In the field of big data: The classic high-performance communication and serialization component Avro’s RPC framework in Hadoop defaults to using Netty for inter-node communication. Its Netty Service is implemented through a secondary packaging based on the Netty framework.

Readers who are interested can learn about the current open-source projects that are using it.Related Projects of Netty。

6. Netty High-Performance Design

Netty, as an asynchronous event-driven network framework, derives its high performance primarily from its I/O model and threading model. The former determines how data is transmitted and received, while the latter dictates how data is processed.

6.1 I/O Model

What kind of channels are used to send data to the other party?BIO, NIO, or AIOThe I/O model largely determines the performance of the framework.

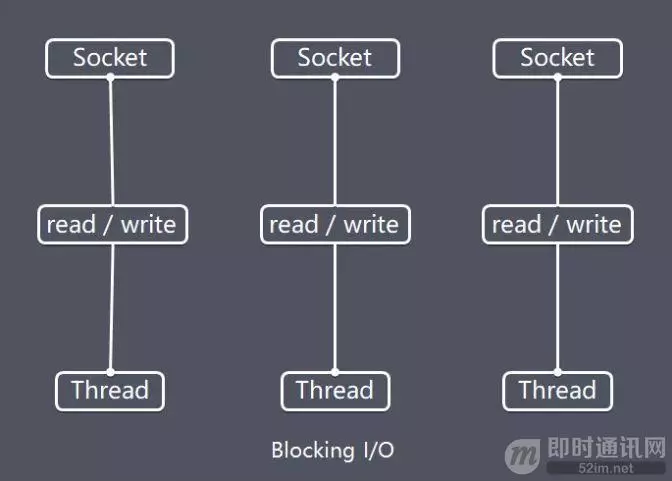

【Blocking I/O】:

Traditional blocking I/O (BIO) can be represented by the diagram below:

Characteristics are as follows:

Each request requires a separate thread to complete the entire operation of data Read, business processing, and data Write.

When the concurrency is high, a large number of threads need to be created to handle connections, which results in significant system resource consumption.

Once the connection is established, if the current thread temporarily has no data to read, the thread becomes blocked on the Read operation, resulting in wasted thread resources.

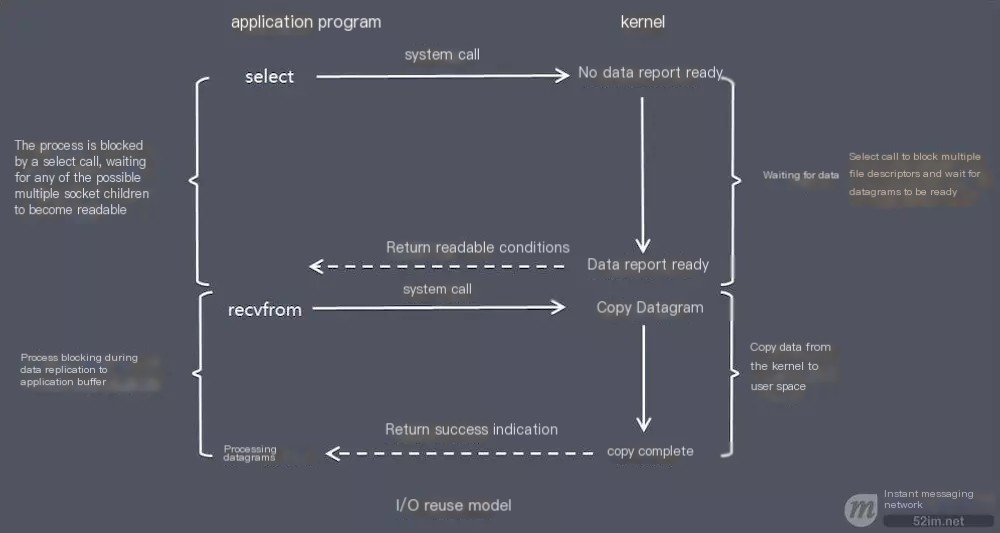

【I/O Multiplexing Model】:

In the I/O multiplexing model, Select is utilized, and this function also causes the process to block. However, unlike blocking I/O, these two functions can simultaneously block multiple I/O operations.

Moreover, it can simultaneously monitor multiple read and write I/O functions until data is ready to be read or written, at which point it actually invokes the I/O operation function.

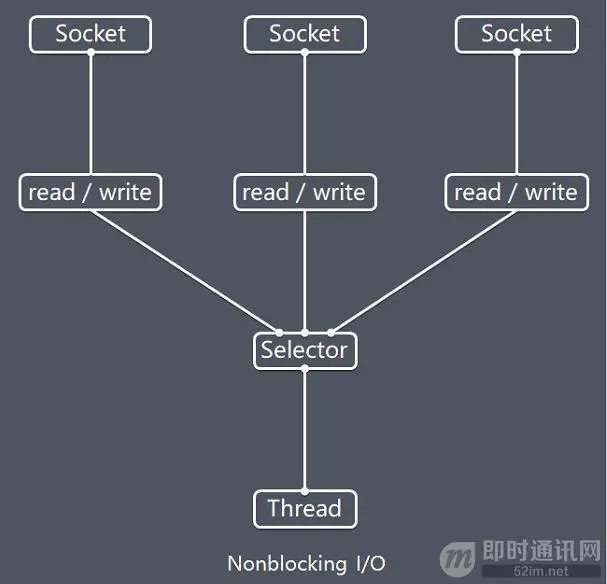

The key to the implementation of Netty’s non-blocking I/O is based on the I/O multiplexing model, represented here by the Selector object:

The IO thread NioEventLoop in Netty, due to its integration with the multiplexer Selector, can concurrently handle hundreds or thousands of client connections.

When a thread reads or writes data from a client socket channel, if no data is available, the thread can perform other tasks.

Threads typically use the idle time of non-blocking I/O to perform I/O operations on other channels, allowing a single thread to manage multiple input and output channels.

Due to the non-blocking nature of read and write operations, the efficiency of IO threads can be significantly enhanced, preventing thread suspension caused by frequent I/O blocking.

An I/O thread can concurrently handle N client connections and read/write operations, fundamentally resolving the traditional synchronous blocking I/O one-connection-per-thread model. This architecture significantly enhances performance, scalability, and reliability.

【Based on Buffer】:

The traditional I/O is oriented towards byte streams or character streams, reading one or more bytes sequentially from a stream in a streaming manner, and therefore, the read pointer’s position cannot be changed arbitrarily.

In NIO, the traditional I/O streams are replaced by the introduction of the concept of Channel and Buffer. In NIO, data can only be read from a Channel into a Buffer or written from a Buffer into a Channel.

Based on Buffer operations, unlike traditional IO which operates sequentially, NIO allows random access to any position within the data.

6.2 Thread Model

How is a datagram read? After reading, on which thread does encoding and decoding occur, how is the encoded and decoded message dispatched, and how does the different threading model significantly impact performance?

【Event-Driven Model】:

Typically, we have two approaches to designing a program with an event-driven model:

Polling Method: The thread continuously polls the relevant event source to check if any events have occurred. If an event has occurred, it invokes the event handling logic.

2) Event-driven approach: When an event occurs, the main thread places the event into an event queue, and another thread continuously loops through and consumes events from this event list, calling the corresponding processing logic to handle the event. The event-driven approach is also referred to as the message notification method and is actually based on the Observer Pattern from design patterns.

Using GUI logic processing as an example, explain the differences between the two types of logic:

1) Polling Method: The thread continuously polls to check if a button click event has occurred, and if it has, invokes the handling logic.

2) Event-Driven Approach: When a click event occurs, it is placed into an event queue. In another thread, events in the event list are consumed, and relevant event handling logic is invoked based on the event type.

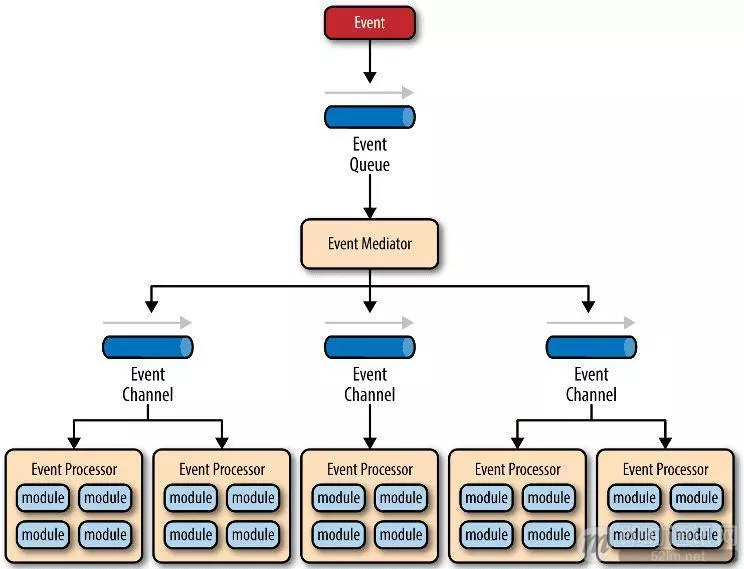

Here, let’s reference the illustration by the renowned O’Reilly regarding the event-driven model:

Consists of 4 basic components:

1) Event Queue: The entry point for receiving events, storing events that are awaiting processing;

2) Event Mediator: Distributes various events to different business logic units.

3) Event Channel: A communication channel between the dispatcher and the handler;

4) Event Processor: Implements business logic, and upon completion, emits an event to trigger the next step in the process.

It can be observed that, compared to the traditional polling model, event-driven architecture offers the following advantages:

1) Good scalability: With a distributed asynchronous architecture, the event processors are highly decoupled, allowing easy expansion of event processing logic;

2) High Performance: Events can be temporarily stored in a queue, enabling convenient parallel asynchronous processing of events.

【Reactor Thread Model】:

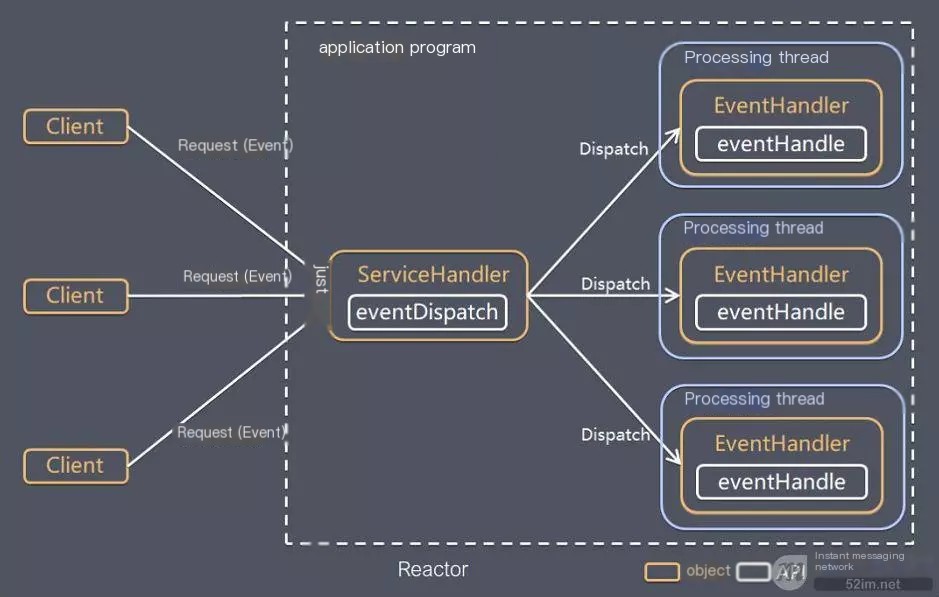

Reactor means a reactor. The Reactor pattern refers to an event-driven processing model where one or more inputs are concurrently delivered to service processors for handling service requests.

The server-side application processes incoming multiplex requests and synchronously dispatches them to the corresponding handler threads. The Reactor pattern, also known as the Dispatcher pattern, involves I/O multiplexing with unified event listening. Upon receiving an event, it dispatches the event (to a certain process). This is one of the essential techniques for writing high-performance network servers.

In the Reactor model, there are two key components:

1) Reactor: The Reactor operates within a single thread, responsible for listening to and dispatching events, directing them to the appropriate handlers to react to IO events. It’s akin to a company switchboard operator who answers calls from clients and transfers the line to the appropriate contact.

2) Handlers: These are responsible for executing the actual events of I/O processes, analogous to the actual officials within a company with whom a client wishes to converse. The Reactor responds to I/O events by scheduling the appropriate handlers, wherein the handlers perform non-blocking operations.

Depending on the number of Reactors and Handler threads, the Reactor model has three variants:

1) Single Reactor Single-threaded;

2) Single Reactor Multithreading;

3) Master-Slave Reactor Multithreading.

It can be understood this way: Reactor is essentially a thread that executes a loop like while (true) { selector.select(); …}, continuously generating new events, making the term “reactor” quite fitting.

Due to space constraints, the Reactor features and comparison of pros and cons are not elaborated here. Interested readers can refer to another article I previously wrote: “High-Performance Network Programming (Part Five): Understanding I/O Models in High-Performance Network Programming》、《High-Performance Network Programming (Part 6): Understanding Thread Models in High-Performance Network Programming》。

【Netty Thread Model】:

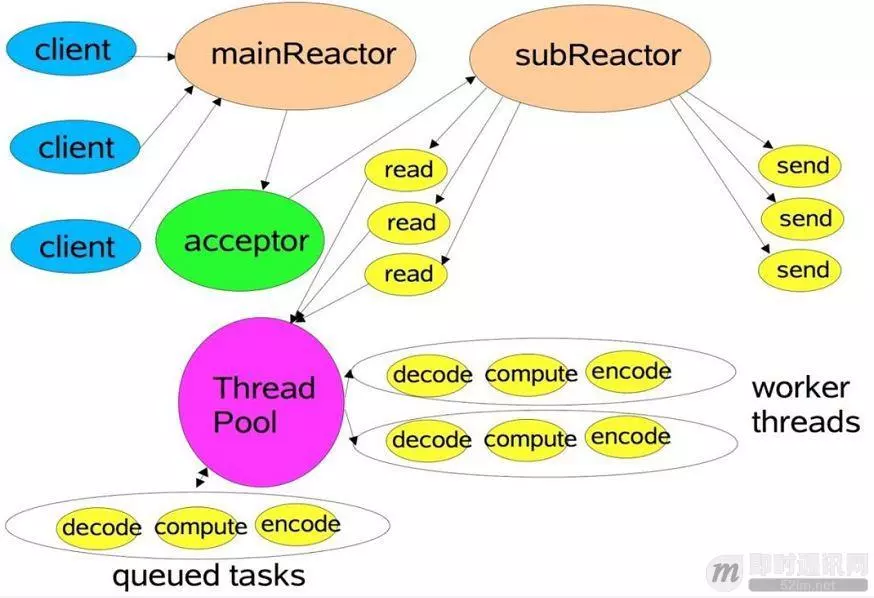

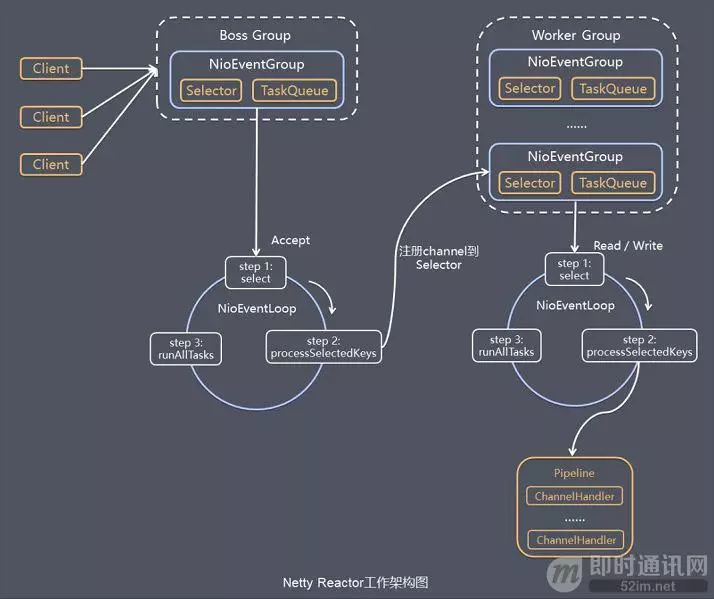

Netty primarily makes some modifications based on the master-slave Reactors multithreading model (as shown in the diagram below), where the master-slave Reactor multithreading model comprises multiple Reactors:

1) MainReactor is responsible for handling client connection requests and delegates these requests to the SubReactor;

2) SubReactor is responsible for the I/O read and write requests of the corresponding channel;

3) Non-I/O requests (specific logic processing) will be directly written into the queue, waiting to be handled by the worker threads.

Here is a reference from the esteemed Doug Lee’s introduction to Reactor — Scalable IO in Java, featuring a diagram about the master-slave Reactor multithreading model:

Special Note:Although Netty’s threading model is based on the master-slave Reactor multithreading, borrowing the structure of MainReactor and SubReactor, in the actual implementation, SubReactor and Worker threads are in the same thread pool:

EventLoopGroup bossGroup = newNioEventLoopGroup();

EventLoopGroup workerGroup = newNioEventLoopGroup();

ServerBootstrap server = newServerBootstrap();

server.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

In the above code, `bossGroup` and `workerGroup` are two objects passed into the constructor method of `Bootstrap`. Both of these groups are thread pools:

1) The bossGroup thread pool is simply assigned after binding to a specific port, where one thread is designated as the MainReactor to specifically handle the Accept events for that port. Each port corresponds to a single Boss thread.

2) The workerGroup thread pool is fully utilized by each SubReactor and Worker thread.

【Asynchronous Processing】:

The concept of asynchronous is relative to synchronous. After an asynchronous process call is initiated, the caller does not immediately receive a result. The component that actually handles this call notifies the caller of completion through status, notification, and callbacks.

I/O operations in Netty are asynchronous, including operations like Bind, Write, and Connect, and will simply return a ChannelFuture.

The caller cannot immediately obtain the result; instead, through the Future-Listener mechanism, the user can easily actively obtain or get notified of the I/O operation result.

When a Future object is initially created, it is in a non-completed state. The caller can obtain the execution status of the operation through the returned ChannelFuture and register listeners to perform operations after completion.

Common operations include:

1) Use the isDone method to determine if the current operation is complete;

2) Use the isSuccess method to determine if the completed operation was successful.

3) Use the getCause method to obtain the reason for the failure of the current completed operation;

4) Use the isCancelled method to determine if the current completed operation has been canceled;

5) Use the `addListener` method to register a listener, so that once the operation is complete (when the `isDone` method returns complete), the specified listener will be notified; if the `Future` object is already complete, the specified listener will be notified accordingly.

In the following code, binding the port is an asynchronous operation. When the binding process is complete, the appropriate listener will handle the logic:

serverBootstrap.bind(port).addListener(future -> {

if(future.isSuccess()) {

System.out.println(newDate() + “: Port [“+ port + “] binding successful!”);

} else{

System.err.println(“Port [“+ port + “] binding failed!”);

}

});

In contrast to traditional blocking I/O, where the thread is blocked after performing an I/O operation until the operation is complete, the benefit of asynchronous processing is that it does not cause thread blocking. Threads can execute other programs during the I/O operation, leading to more stability and higher throughput in high concurrency scenarios.

7. Architecture Design of the Netty Framework

After previously introducing some theoretical aspects of Netty, we will now discuss its architectural design in terms of features, module components, and its operational process.

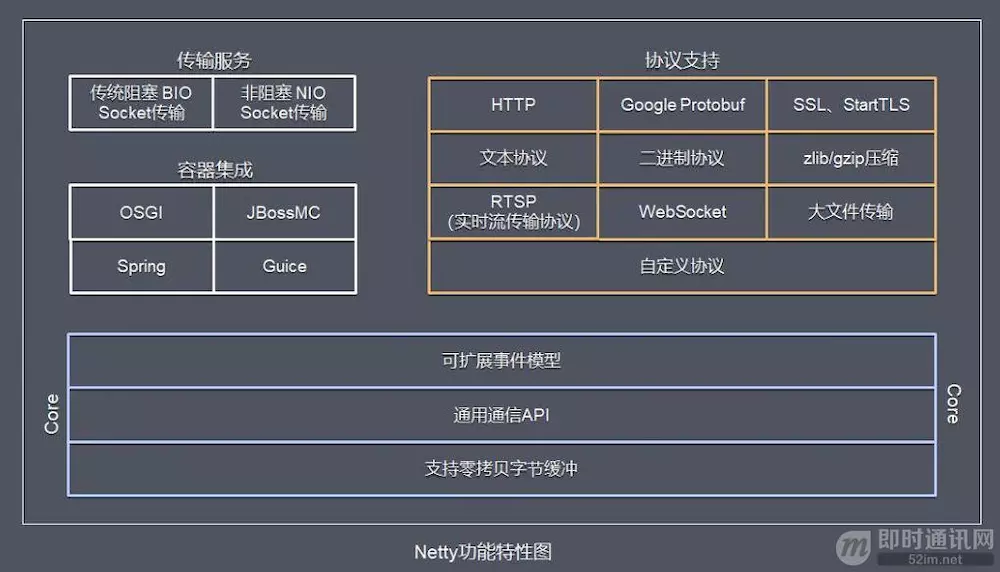

7.1 Functional Features

The features of Netty are as follows:

1) Transmission Services: Supports BIO and NIO;

2) Container Integration: Supports OSGI, JBossMC, Spring, Guice containers;

3) Protocol Support: A series of common protocols such as HTTP, Protobuf, binary, text, and WebSocket are all supported. Additionally, custom protocols can be implemented through encoding and decoding logic.

4) Core: Scalable event model, universal communication API, ByteBuf buffer objects supporting zero-copy.

7.2 Module Components

【Bootstrap, ServerBootstrap】:

The term “Bootstrap” refers to initialization. A Netty application typically starts with a Bootstrap, which primarily serves to configure the entire Netty program and connect various components. In Netty, the Bootstrap class is the bootstrap class for client-side programs, while ServerBootstrap is the bootstrap class for server-side programs.

【Future, ChannelFuture】:

As previously introduced, in Netty, all IO operations are asynchronous, and you cannot immediately know whether the message has been correctly processed.

However, you can wait for a while until it completes execution, or directly register a listener. The implementation involves using Future and ChannelFutures, which allow you to register a listener that automatically triggers a registered event upon successful or failed operation execution.

【Channel】:

Netty’s network communication component can be used to execute network I/O operations. Channel provides the user with:

1) The current status of the network connection channel (e.g., is it open? Is it connected?)

2) Configuration parameters for network connections (e.g., receive buffer size)

3) Provide asynchronous network I/O operations (such as establishing connections, reading, writing, and binding ports). Asynchronous invocation means that any I/O call will return immediately and does not guarantee that the requested I/O operation will be completed by the end of the call.

4) Calling immediately returns a ChannelFuture instance, and by registering listeners on the ChannelFuture, the caller can be notified via callbacks when the I/O operation is successful, fails, or is canceled.

5) Support the association of I/O operations with corresponding handlers.

Different protocols and varying types of blocking connections have corresponding Channel types associated with them.

Below are some common types of Channels:

NioSocketChannel, asynchronous client-side TCP Socket connection.

NioServerSocketChannel, asynchronous server-side TCP Socket connection.

NioDatagramChannel, asynchronous UDP connection.

NioSctpChannel, asynchronous client-side Sctp connection.

NioSctpServerChannel, asynchronous server-side Sctp connection. These channels cover UDP and TCP network IO as well as file IO.

Since the provided content appears to be untranslated text that lacks specific context or content, I am unable to perform a translation. However, if you have any WordPress post content containing plain text interspersed with HTML tags or plugin code, feel free to share, and I will assist in translating the plain text while respecting the HTML structure.

Netty implements I/O multiplexing based on the Selector object, allowing a single thread to monitor events from multiple connected Channels through the Selector.

Once a Channel is registered with a Selector, the internal mechanism of the Selector can automatically and continuously query (select) these registered Channels to check for ready I/O events (such as readable, writable, network connection completion, etc.). This allows the program to efficiently manage multiple Channels using a single thread.

[No plain text available to translate. This seems to be a reference or code term rather than content text that requires translation.]

The `NioEventLoop` maintains a thread and a task queue, supporting asynchronous task submission. When the thread starts, it invokes the `run` method of `NioEventLoop`, executing both I/O and non-I/O tasks:

I/O tasks, specifically events that are ready in the selectionKey such as accept, connect, read, write, etc., are triggered by the processSelectedKeys method.

Non-I/O tasks, such as tasks added to the taskQueue like register0 and bind0, are triggered by the runAllTasks method.

The execution time of the two types of tasks is controlled by the variable `ioRatio`, which defaults to 50, indicating that the time allowed for executing non-I/O tasks is equal to the execution time of I/O tasks.

I noticed your reference to `NioEventLoopGroup`. If you are asking for a translation or explanation regarding its concept or usage in American English, please provide the context or text you wish to be translated so I can assist you accurately while maintaining the HTML structure intact.

NioEventLoopGroup primarily manages the lifecycle of eventLoops and can be understood as a thread pool. It maintains a group of threads internally, with each thread (NioEventLoop) responsible for handling events on multiple Channels. However, a single Channel corresponds only to one thread.

【ChannelHandler】:

The `ChannelHandler` is an interface that processes I/O events or intercepts I/O operations and forwards them to the next handler in its `ChannelPipeline` (business processing chain).

ChannelHandler itself does not offer many methods because this interface has numerous methods that need to be implemented. For convenience during usage, you can extend its subclass:

`ChannelInboundHandler` is used to handle inbound I/O events.

`ChannelOutboundHandler` is used to handle outbound I/O operations.

Or use the following adapter class:

`ChannelInboundHandlerAdapter` is used for handling inbound I/O events.

`ChannelOutboundHandlerAdapter` is used for handling outbound I/O operations.

`ChannelDuplexHandler` is used for handling both inbound and outbound events.

【ChannelHandlerContext】:

Save all contextual information related to the Channel while associating it with a ChannelHandler object.

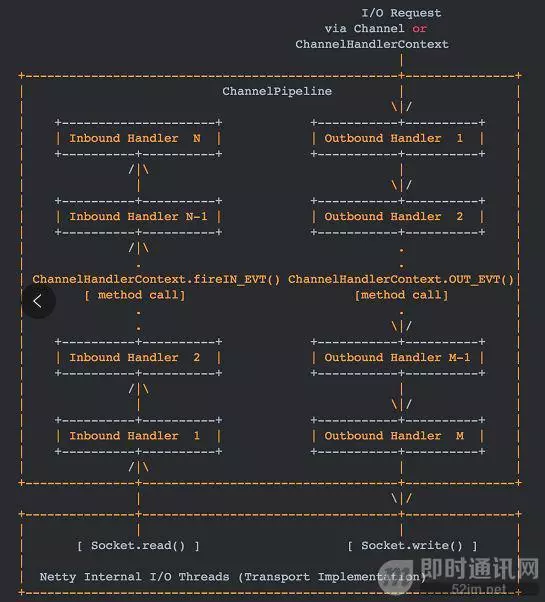

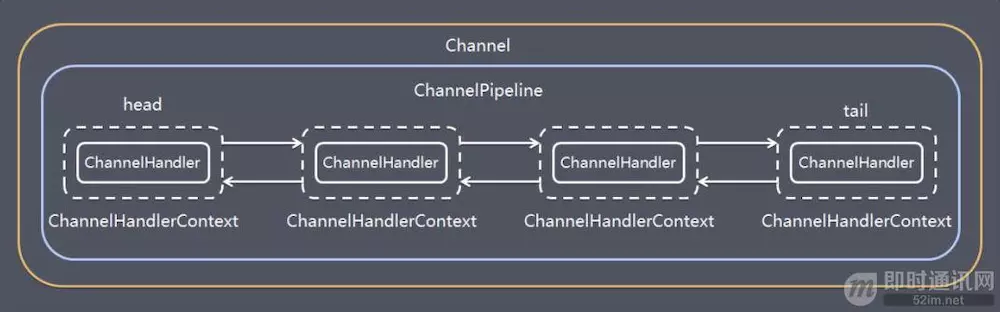

【ChannelPipeline】:

Store a List of ChannelHandlers to manage or intercept the inbound events and outbound operations of a Channel.

ChannelPipeline implements an advanced form of the interceptor filter pattern, allowing users full control over how events are processed and how various ChannelHandlers within a Channel interact with each other.

The following image quotes the description of ChannelPipeline from the Javadoc 4.1 of Netty, explaining how ChannelHandler typically handles I/O events within a ChannelPipeline.

I/O events are handled by ChannelInboundHandler or ChannelOutboundHandler and are propagated through methods defined in ChannelHandlerContext.

For example, `ChannelHandlerContext.fireChannelRead(Object)` and `ChannelOutboundInvoker.write(Object)` forward to their nearest handlers.

Inbound events are processed by inbound handlers in a bottom-up direction, as illustrated on the left side of the diagram. Inbound handlers typically handle the inbound data generated by the I/O threads at the bottom of the diagram.

Inbound data is typically read from a remote source through actual input operations, such as `SocketChannel.read(ByteBuffer)`.

Outbound events are processed in an up-and-down direction, as shown on the right side of the diagram. Outbound Handlers typically generate or transform outbound transmissions, such as write requests.

I/O threads typically perform the actual output operations, such as SocketChannel.write(ByteBuffer).

In Netty, each Channel has one and only one ChannelPipeline associated with it. Their composition relationship is as follows:

A Channel contains a ChannelPipeline, and the ChannelPipeline maintains a bidirectional linked list composed of ChannelHandlerContext instances, with each ChannelHandlerContext associated with a ChannelHandler.

Inbound events and outbound events in a bidirectional linked list: inbound events are passed from the head of the list to the last inbound handler, while outbound events are passed from the tail backwards to the first outbound handler. The two types of handlers do not interfere with each other.

8. Working Principle of the Netty Framework

A typical procedure to initialize and start a Netty server is demonstrated in the following code:

The code snippet you provided is a Java method intended for setting up a server using Netty, a popular asynchronous event-driven network application framework. Let’s translate the comments into English, which describe the steps involved in this setup:

“`java

public static void main(String[] args) {

// Create mainReactor

NioEventLoopGroup boosGroup = new NioEventLoopGroup();

// Create worker thread group

NioEventLoopGroup workerGroup = new NioEventLoopGroup();final ServerBootstrap serverBootstrap = new ServerBootstrap();

serverBootstrap

// Assemble NioEventLoopGroup

.group(boosGroup, workerGroup)

// Set channel type to NIO type

.channel(NioServerSocketChannel.class)

// Set connection configuration parameters

.option(ChannelOption.SO_BACKLOG, 1024)

.childOption(ChannelOption.SO_KEEPALIVE, true)

.childOption(ChannelOption.TCP_NODELAY, true)

// Configure inbound and outbound event handler

.childHandler(new ChannelInitializer()

“`This snippet demonstrates the typical procedure for initializing server-side components in a Netty application, focusing on defining the thread model, channel type, and network settings.<>() {

@Override

protected void initChannel(NioSocketChannel ch) {

// Configure inbound and outbound channel events

ch.pipeline().addLast(…);

ch.pipeline().addLast(…);

}

});// Bind port

int port = 8080;

serverBootstrap.bind(port).addListener(future -> {

if(future.isSuccess()) {

System.out.println(new Date() + “: Port [“+ port + “] bound successfully!”);

} else{

System.err.println(“Port [“+ port + “] binding failed!”);

}

});

}</>

The basic process is described as follows:

1) Initialize by creating 2 NioEventLoopGroups: the bossGroup is used for handling Accept connection establishment events and distributing requests, while the workerGroup is responsible for processing I/O read and write events and business logic.

2) Based on ServerBootstrap (Server-side startup bootstrap class): Configure the EventLoopGroup, Channel type, connection parameters, and set up inbound and outbound event handlers.

3) Bind Port: Start Work.

Based on the overview of the Netty Reactor model, describe the server-side architecture diagram of Netty:

The server side includes 1 Boss NioEventLoopGroup and 1 Worker NioEventLoopGroup.

The `NioEventLoopGroup` is equivalent to one event loop group, which contains multiple event loops (`NioEventLoop`). Each `NioEventLoop` includes one `Selector` and one event loop thread.

Each task executed by the Boss NioEventLoop in a loop includes 3 steps:

1) Polling Accept event;

Handle Accept I/O events, establish a connection with the Client, generate a NioSocketChannel, and register the NioSocketChannel with the Selector of a Worker NioEventLoop.

3)Process tasks in the task queue, runAllTasks. The tasks in the task queue include those executed by user calls to eventloop.execute or schedule, as well as tasks submitted to that eventloop by other threads.

Each task executed by a Worker NioEventLoop in the loop consists of 3 steps:

1) Polling Read and Write events;

2) Handle I/O events, i.e., Read and Write events, which are processed when readable or writable events occur in NioSocketChannel.

3) Process tasks in the queue, runAllTasks.

There are three typical use cases for Tasks in the task queue:

User-defined custom routine tasks:

ctx.channel().eventLoop().execute(newRunnable() {

@Override

publicvoidrun() {

//…

}

});

② Non-current Reactor thread invoking various methods of the Channel:

For example, in the business thread of the push system, based on the user’s identification, locate the corresponding Channel reference, and then call the Write method to push messages to the user, this scenario will be triggered. Eventually, the Write will be submitted to the task queue and asynchronously consumed.

③ User-Customized Scheduled Tasks:

ctx.channel().eventLoop().schedule(newRunnable() {

@Override

publicvoidrun() {}

}, 60, TimeUnit.SECONDS);

9. Summary of This Article

The currently recommended mainstream stable version is still Netty4. Netty5 utilizes ForkJoinPool, which increases code complexity without significantly improving performance, so this version is not recommended. The official website also does not provide a download link.

The entry barrier for Netty seems relatively high primarily due to the scarcity of resources, not because it’s particularly difficult. In fact, everyone can thoroughly understand Netty just as they do with Spring.

Before learning, it’s advisable to thoroughly understand the entire framework’s principles, structure, and operation process, which can help you avoid many detours.